Attribution Challenges in Generative AI ROI: How to Isolate AI’s Real Impact from Other Changes

Jan, 13 2026

Jan, 13 2026

Companies are spending billions on generative AI-$30 to $40 billion since 2023-but 95% of them can’t prove it’s making money. Not because the tech doesn’t work. Because they’re measuring it wrong.

Why Your AI ROI Numbers Are Lies

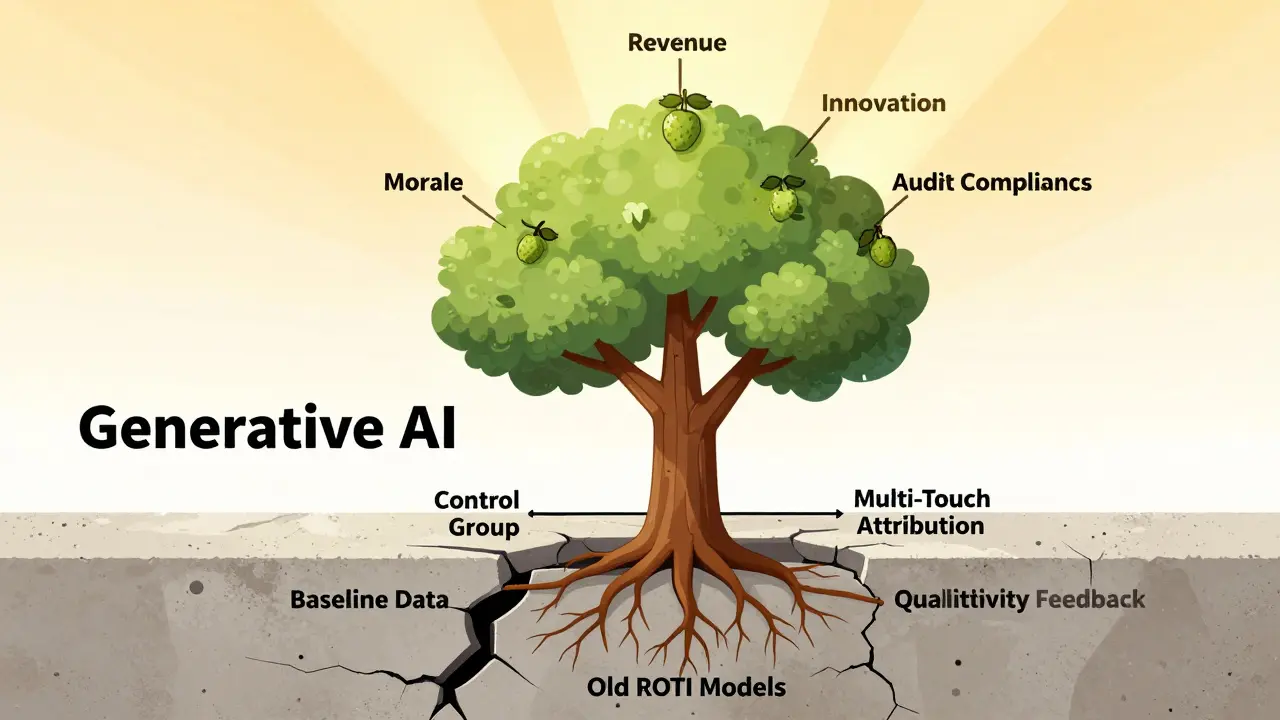

You launched a generative AI chatbot. Sales went up 18%. Your team celebrates. CFO asks: "How much of that was AI?" You freeze. Because you don’t know. That’s not an anomaly. It’s the norm. According to Gartner’s 2024 survey of 1,200 enterprise leaders, 74% say attribution is their biggest roadblock to proving AI value. The problem isn’t that AI doesn’t help. It’s that you can’t untangle its effect from everything else changing at the same time. Was the sales lift from the chatbot? Or the new website design? The price drop? The marketing campaign that launched the same week? The fact that your customer service team started using AI templates to respond faster? All of it? None of it? Traditional ROI models were built for machines that do one thing: a CNC machine cuts parts faster. A new ERP system replaces a legacy database. You measure before and after. Simple. Generative AI doesn’t work like that. It’s not a tool. It’s a catalyst. It changes how people work, how teams collaborate, how decisions get made. It doesn’t replace a process-it reshapes it across departments. That’s why measuring it like a lightbulb replacement fails.The 5 Biggest Measurement Mistakes

Most companies make the same five mistakes when trying to measure AI’s impact:- Measuring too soon. 67% of organizations assess ROI within six months. But generative AI needs 12 to 18 months to mature. Employees need time to learn, workflows need time to adapt, and the system needs time to improve through feedback. Measuring at month three is like judging a marathon runner after the first mile.

- Using single metrics. 63% rely on one number-like reduced support tickets or faster report generation. That’s like judging a car’s value by how loud the engine sounds. You’re missing the whole picture: employee satisfaction, innovation speed, risk reduction, customer loyalty.

- Ignoring confounding variables. 78% don’t account for concurrent changes. A process reengineering, a new hire, a policy update-all these move the needle. If you don’t isolate them, you’re attributing their impact to AI.

- No baseline. No control. Only 18% of failing programs established a baseline before launch. And only 22% use proper control groups. Without knowing what normal looked like, you’re flying blind.

- Trusting vendor calculators. AI vendors sell ROI tools that assume all gains come from their product. One healthcare director told G2: "Our vendor’s calculator said AI drove 100% of productivity gains. Reality? Process changes contributed 40%. The tool was useless."

What Works: The 4 Proven Methods

The 26% of companies that do prove AI’s ROI don’t guess. They use structured, technical methods. Here’s what they do:1. Build a Pre-Launch Baseline

Before you deploy AI, measure everything. How long does it take to generate a report? How many customer complaints per week? How many hours do engineers spend on documentation? Track it daily for at least 30 days. Store it. Use it as your "before" number.2. Use Counterfactual Analysis

This is the gold standard. Ask: "What would have happened if we didn’t deploy AI?" Siemens did this with their AI design assistant. They ran two parallel teams: one using AI, one not. Both worked on similar projects. After six months, the AI team completed designs 27% faster-with 95% statistical confidence the gain came from AI, not experience or better tools. You don’t need a lab. You can use historical data from similar teams or departments. If your sales team in Chicago used AI and your team in Atlanta didn’t, compare their performance trends over time.3. Apply Multi-Touch Attribution

AI doesn’t act alone. It’s part of a chain. At American Express, a generative AI tool helped agents draft responses. But the 22% drop in handling time wasn’t just the AI. It was the AI + new training + updated knowledge base + revised escalation rules. They mapped each intervention and assigned weight. AI accounted for 40%. Training, 30%. Knowledge base, 20%. Process change, 10%. That’s how you get real numbers.4. Track Soft ROI with Qualitative Data

Financial metrics don’t capture everything. Generative AI boosts morale, reduces burnout, and sparks innovation. IBM tracked developer productivity using 360-degree feedback. Engineers said AI helped them focus on complex problems instead of boilerplate code. That led to faster feature releases and fewer bugs. That’s ROI too-even if it doesn’t show up on a P&L.

The Infrastructure You Need

You can’t measure what you can’t see. Most companies lack the data foundation to isolate AI’s impact. You need:- Granular usage tracking: Capture 15-20 data points per AI interaction-what prompt was used, how long it took to approve the output, which team used it, what task it replaced.

- Integrated data pipelines: Connect your AI tool to your CRM, ERP, HR systems, and support tickets. Without this, you’re working with siloed snapshots.

- Real-time dashboards: Leaders don’t want quarterly reports. They want to see AI’s impact on revenue or cost savings as it happens. Leading companies now use dashboards that link AI usage directly to financial KPIs.

- AI observability tools: Platforms like Censius and WhyLabs help track model drift, usage patterns, and output quality. These aren’t just for engineers-they’re for analysts measuring impact.

Who’s Winning? Who’s Losing?

The "GenAI Divide" isn’t about tech. It’s about measurement maturity. Manufacturers and financial firms lead. Why? They’ve been measuring operational efficiency for decades. They know how to track time, cost, quality. They adapted those frameworks. Now, 41% of manufacturers and 38% of financial firms can prove AI ROI. Retail and healthcare lag. Why? They’re focused on customer experience, not internal metrics. They track satisfaction, not productivity. They’re trying to measure a transformation with the wrong ruler. The difference? The winners spent six months setting up measurement before deploying AI. The losers deployed AI first, then asked: "Where’s the ROI?"

The New Rules for AI Investment

CFOs aren’t asking for hype anymore. They’re asking for proof. A Fortune 500 CIO said it bluntly: "Our board approved our initial AI budget based on industry hype. Next year, they want proof, not promises." That’s the new reality. And it’s not going away. The EU AI Act now requires impact assessments-including ROI-for high-risk AI. The SEC requires public companies to explain how AI affects financial performance. Investors are watching. Boards are asking. Auditors are checking. If you can’t isolate AI’s impact, you won’t get more budget. You won’t get promotion. You might get replaced.What to Do Now

If you’re stuck in pilot purgatory, here’s your action plan:- Stop measuring ROI until you have a baseline. Collect 30 days of pre-AI data. Don’t skip this.

- Identify your top three use cases. Focus on one or two where AI replaces repetitive work-report generation, drafting emails, code comments, customer replies.

- Build a control group. Use an existing team or department that doesn’t use AI. Compare their output over time.

- Track both hard and soft metrics. Time saved. Errors reduced. Employee feedback. Innovation ideas generated.

- Document everything. Create an attribution playbook. What did you measure? How? What did you learn? Share it. Repeat it.

Final Thought

Dr. Erik Brynjolfsson of Stanford put it best: "We’re trying to measure the value of electricity by counting how many candles it replaces." Generative AI isn’t replacing a tool. It’s changing how work gets done. You can’t measure that with old math. The companies that win aren’t the ones with the fanciest models. They’re the ones who figured out how to measure what matters. If you don’t solve attribution, your AI investment won’t survive the next budget cycle.Why can’t I just use the ROI calculator from my AI vendor?

Vendor ROI calculators assume all improvements come from their tool. They ignore concurrent changes like process updates, new hires, marketing campaigns, or pricing shifts. One healthcare provider found their vendor’s tool claimed 100% of productivity gains came from AI-when reality showed process changes accounted for 40%. These tools are marketing material, not measurement tools.

How long should I wait before measuring AI’s ROI?

Wait 12 to 18 months. Generative AI doesn’t deliver instant results. Teams need time to adapt. Workflows need to stabilize. Feedback loops need to improve the model. Measuring at six months misses 73% of the value, according to Berkeley Executive Education. Early gains are often noise. Real impact emerges after adoption matures.

What’s the difference between generative AI and agentic AI ROI measurement?

Generative AI (like chatbots or document summarizers) boosts efficiency and productivity. Measure it with time-motion studies: how much faster do reports get made? How many fewer hours are spent drafting emails? Agentic AI (like autonomous workflow bots) redesigns processes. Measure it with cost savings: how many full-time roles were eliminated? How much did manual processing drop? They require different metrics and timeframes.

Can I use A/B testing to measure AI impact?

Yes-and it’s one of the most reliable methods. Split teams or workflows: one group uses AI, the other doesn’t. Track identical KPIs over time. American Express used this to isolate a 22% reduction in customer service handling time. The key is keeping everything else equal-same team size, same tasks, same tools-except the AI.

What if my company doesn’t have the data infrastructure for this?

Start small. Pick one use case. Manually track the top three metrics before and after AI rollout for 30 days. Use spreadsheets if needed. Focus on time saved, error rates, and employee feedback. You don’t need a fancy platform to prove value-you need discipline. Once you show results, you’ll get budget for better tools.

Is there a standard framework I can follow?

Yes. In Q2 2025, the AI Measurement Consortium (47 Fortune 500 companies) released Version 2.1 of the Generative AI ROI Framework. It includes standardized methods for counterfactual analysis, multi-touch attribution, and time-series decomposition. While adoption is still low, it’s the closest thing to an industry standard. Start with their guidelines, even if you adapt them.

saravana kumar

January 13, 2026 AT 11:49Let’s be real - if your CFO is asking for ROI proof after 6 months, you already lost. The vendor’s ROI calculator? A glorified PowerPoint slide with fake numbers. I’ve seen this 12 times in Bangalore alone. Companies deploy AI like it’s a new coffee machine, then panic when the beans don’t magically brew profit. Baseline data? Control groups? Please. Most teams can’t even track their own TPS reports. You want attribution? Start by writing down what the hell you were doing before you turned on the bot. No magic. Just discipline. And yes, it’s boring. That’s why 95% fail.

Tamil selvan

January 13, 2026 AT 23:34Thank you for this comprehensive breakdown - it’s refreshing to see such a well-structured analysis. I particularly appreciate the emphasis on counterfactual analysis and the distinction between generative and agentic AI. Many organizations overlook the fact that AI is not a standalone tool but an ecosystem integrator. The suggestion to track soft metrics like morale and burnout is especially critical; these are often the first indicators of sustainable change. I would add that establishing an internal AI governance committee, even if small, can ensure accountability and consistency in measurement practices. Thank you again for highlighting the real, actionable steps - not just hype.

Mark Brantner

January 15, 2026 AT 02:39sooo… you’re telling me the reason my boss won’t give me a raise is because i didn’t track how many times the AI changed the font size in my quarterly report?? 😭 i just wanted to automate my emails, not become a data scientist. also, who the heck has time to set up a control group? my team’s down to 3 people and we’re all running on espresso and regret. also - vendor calculators are literally just AI whispering ‘you’re awesome’ into a microphone. i’m just here for the free coffee.

Kate Tran

January 16, 2026 AT 08:11Honestly? I’m shocked anyone still believes vendor ROI calculators. I worked at a health tech startup last year - their ‘AI solution’ claimed to cut patient intake time by 50%. Turned out, they just hired 4 new admins and called it ‘automation’. The tool was barely used. We tracked actual usage logs, compared teams, and found the real gain was in training, not AI. It’s not about the tech. It’s about whether you’re willing to do the boring, messy work of measuring. And no - spreadsheets are fine. You don’t need a $200k platform to prove value. Just honesty.

amber hopman

January 17, 2026 AT 02:01I’ve been on both sides of this - the ‘we launched AI and now everything’s perfect’ team and the ‘why is nothing working’ team. The difference? The ones who succeeded didn’t start with the AI. They started with the problem. They asked: ‘What task is draining people?’ Then they picked one. Then they measured before. Then they added AI. Then they measured again. No vendor tool. No dashboards. Just a shared spreadsheet and a team that actually talked to each other. The real ROI? People stopped hating their jobs. That’s the metric that matters. And yes, it’s slower. But it’s real.