Benchmarking Transformer Variants on Real-World Large Language Model Workloads

Feb, 12 2026

Feb, 12 2026

When you're building a real-world AI application, choosing the right transformer model isn't about which one is the newest-it's about which one actually works for your specific job. Too many teams pick GPT-4 because it's popular, only to find out it's too slow, too expensive, or doesn't handle their data well. The truth is, different transformer variants excel at different things. Some are lightning-fast. Others understand code better. A few can read a 300-page contract and still answer questions accurately. This isn't theory. These are real trade-offs you'll face in production.

What transformer variants actually do in practice

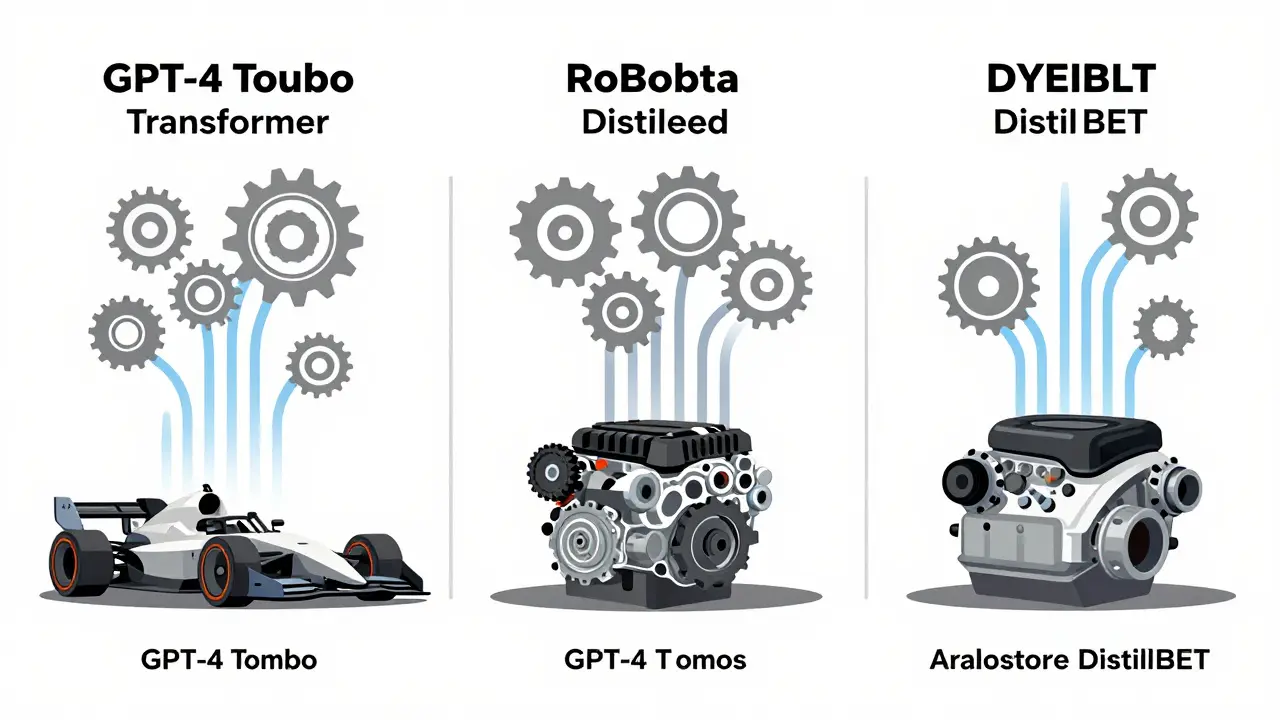

Transformers aren't one-size-fits-all. The original transformer from 2017 was a breakthrough, but today's models are specialized tools. Think of them like different types of engines: a race car engine for speed, a diesel engine for torque, and a hybrid for efficiency. You don't put a race engine in a delivery van. Same logic applies here.

GPT-4 Turbo is the go-to for high-stakes tasks. It handles 128,000 tokens-that's over 300 pages of text-in a single input. A company that switched its customer service chatbot from GPT-3.5 to GPT-4 Turbo saw customer satisfaction jump 12 points. But that came at a cost: four times the price per request. If you're processing thousands of queries a day, that adds up fast. And you can't tweak it. You can't see the weights. You're locked into OpenAI's API, with rate limits and unpredictable downtime during peak hours.

On the other end, BERT-family models like RoBERTa and DistilBERT are open. You download them. You run them on your own server. RoBERTa-Large scores 7.8 out of 10 on accuracy and 8 on speed, but what really matters is price efficiency: a perfect 10. If you're a small team without a big cloud budget, these models are your best bet. DistilBERT cuts model size by 40% and keeps 97% of BERT's accuracy. That means you can run it on a single GPU, even a consumer-grade one, without losing much performance.

Long context isn't just a feature-it's a requirement

Most models max out at 512 or 1024 tokens. That's fine for short questions or tweets. But if you're analyzing legal contracts, medical records, or engineering specs, you need more. Transformer XL breaks the mold here. With a context window over 3,000 tokens, it can process entire chapters of a book in one go. It's not as accurate as GPT-4 on reasoning tasks, but for document classification or scientific paper summarization, it's unmatched. The catch? It needs custom CUDA kernels to run efficiently. Most cloud providers don't support it out of the box. You'll need engineers who know how to optimize GPU memory. If you don't have that, it's not worth the hassle.

That's where GPT-4 Turbo and Gemini 2.5 Flash come in. Both support 128,000+ tokens. Gemini 2.5 Flash is the dark horse. It's not as strong on deep reasoning, but it's three times faster than GPT-4 and handles images and text together. If you're building a tool that scans blueprints and answers questions about them, Gemini 2.5 Flash is the only choice. One construction firm used it to automate permit reviews. They cut processing time from 3 days to 12 hours. The savings? Over $200,000 a year.

Open-source alternatives are now competitive

A few years ago, open-source models were behind. Not anymore. Falcon 40B and Nemotron-4 70B are now in the same league as GPT-4 on benchmarks. Falcon 2 even handles multimodal inputs-text and images-without needing separate systems. Nemotron-4, built on Llama-3, is Nvidia's answer to the black-box problem. You can run Nemotron-4 15B on a single RTX 4090. That's right: a consumer graphics card. No cloud subscription. No API calls. Just local inference.

Why does this matter? Control. If you're in healthcare, finance, or government, you can't send sensitive data to OpenAI. You need to own the model. Falcon and Nemotron give you that. You can audit the weights. You can fine-tune them on your own data. You can deploy them behind your firewall. That’s not a nice-to-have-it’s a compliance requirement for many industries.

When transformers fail: the hidden vulnerability

Transformers look powerful, but they’re brittle. A 2026 study tested models under real-world data shifts-like when customer behavior changes suddenly or new regulations alter document formats. In these cases, transformer models dropped performance by up to 70%. A model that scored 0.693 on clean data fell to 0.118 when the data shifted. Meanwhile, a simple MLP (multilayer perceptron) held steady at 0.089.

This isn't a bug. It's a feature of how transformers learn. They memorize patterns from training data. If the real world changes, they don't adapt. They break. That's why you can't just train once and deploy. You need monitoring. You need fallbacks. You need to know when your model is underperforming. One fintech startup learned this the hard way. Their transformer model flagged 90% of transactions as fraudulent during a holiday sales spike. Turns out, the training data didn't include Black Friday patterns. They switched to a hybrid system: transformer for normal cases, rule-based logic for outliers. Fraud detection accuracy went back up to 94%.

How to choose the right variant

Here’s how to cut through the noise:

- What’s your task? If it’s reasoning-heavy-legal analysis, coding help, research synthesis-go with GPT-4 Turbo or Claude 4. If it’s classification or summarization, try RoBERTa or Falcon 40B.

- How long is your input? Over 10,000 tokens? You need GPT-4 Turbo, Gemini 2.5, or Transformer XL. Below 1,000? BERT-family models work fine.

- What’s your budget? If you’re paying per API call and processing millions, open-source models like DistilBERT or Nemotron-4 15B will save you six figures a year.

- Do you need control? If compliance, security, or customization matters, skip GPT-4. Use Falcon or Nemotron. You can’t fine-tune GPT-4. You can’t audit it. You’re at the mercy of a vendor.

- Do you need speed? Gemini 2.5 Flash is the fastest. If your app needs sub-200ms responses, it’s your best option.

There’s no universal best model. The best model is the one that solves your problem without breaking your budget, timeline, or compliance rules.

What’s next? Beyond transformers

Even as we benchmark these models, the field is shifting. Mamba-a new architecture using state-space models-is starting to outperform transformers on long sequences with 10x less compute. Early tests show it can handle 1 million-token contexts without slowing down. It’s not ready for prime time yet, but by late 2026, it might be. That’s why you shouldn’t lock into one model forever. Build systems that can swap components. Use APIs where possible. Keep your data pipeline clean. The next breakthrough won’t be another transformer. It’ll be something entirely different.

Which transformer variant is best for code generation?

GPT-4 Turbo and Claude 4 lead in code generation. They understand complex logic, generate clean syntax, and handle multi-file projects. For open-source, Falcon 40B and Nemotron-4 70B are strong alternatives. If you need speed over perfection, Gemini 2.5 Flash also performs well. For lightweight local use, try CodeLlama 7B-a BERT-style model fine-tuned specifically for code.

Can I run GPT-4 on my own server?

No. GPT-4 is a closed-source model owned by OpenAI. You can only access it via their API. You can’t download the weights, fine-tune it, or deploy it locally. If you need local control, use open-source models like Falcon, Nemotron, or Llama 3 variants. They’re designed for on-premise deployment.

Is BERT still relevant in 2026?

Absolutely. While newer models outperform BERT on complex reasoning, BERT-family models like RoBERTa and DistilBERT are still the gold standard for understanding language structure. They’re faster, cheaper, and fully transparent. If you’re doing sentiment analysis, intent classification, or question answering on structured data, they’re often better than heavier models. Many companies still use them because they’re reliable and easy to maintain.

How do I know if my data is too different from training data?

Monitor performance drops. If your model’s accuracy suddenly falls by 15% or more, your data has likely shifted. Set up alerts for prediction confidence scores-if the model starts saying "I’m not sure" often, that’s a red flag. Use simple baselines like logistic regression or MLPs as a control. If the transformer crashes but the baseline holds steady, you’ve got a distribution shift. Re-train with newer data or add guardrails.

What’s the cheapest way to deploy a transformer today?

Run DistilBERT or Nemotron-4 15B on a single consumer GPU like an RTX 4090. No cloud fees. No API limits. You can get under $0.001 per inference. For higher volume, use a cloud provider’s spot instances with Falcon 7B. Total cost? Often under $50/month for thousands of requests. Compare that to GPT-4 Turbo, which can cost $500/month for the same volume.

Jeremy Chick

February 13, 2026 AT 07:04Stephanie Serblowski

February 13, 2026 AT 16:26Renea Maxima

February 14, 2026 AT 20:20selma souza

February 15, 2026 AT 19:37Michael Jones

February 15, 2026 AT 22:45