Cloud Cost Optimization for Generative AI: Scheduling, Autoscaling, and Spot

Jan, 29 2026

Jan, 29 2026

Generative AI is eating through cloud budgets faster than most teams can keep up. In 2025, companies are spending more on AI than ever-but too much of it is wasted. A single misconfigured model can burn through $50,000 in a month. The problem isn’t the technology. It’s how we’re running it. The answer isn’t to use less AI. It’s to run it smarter. That means mastering three core strategies: scheduling, autoscaling, and spot instances.

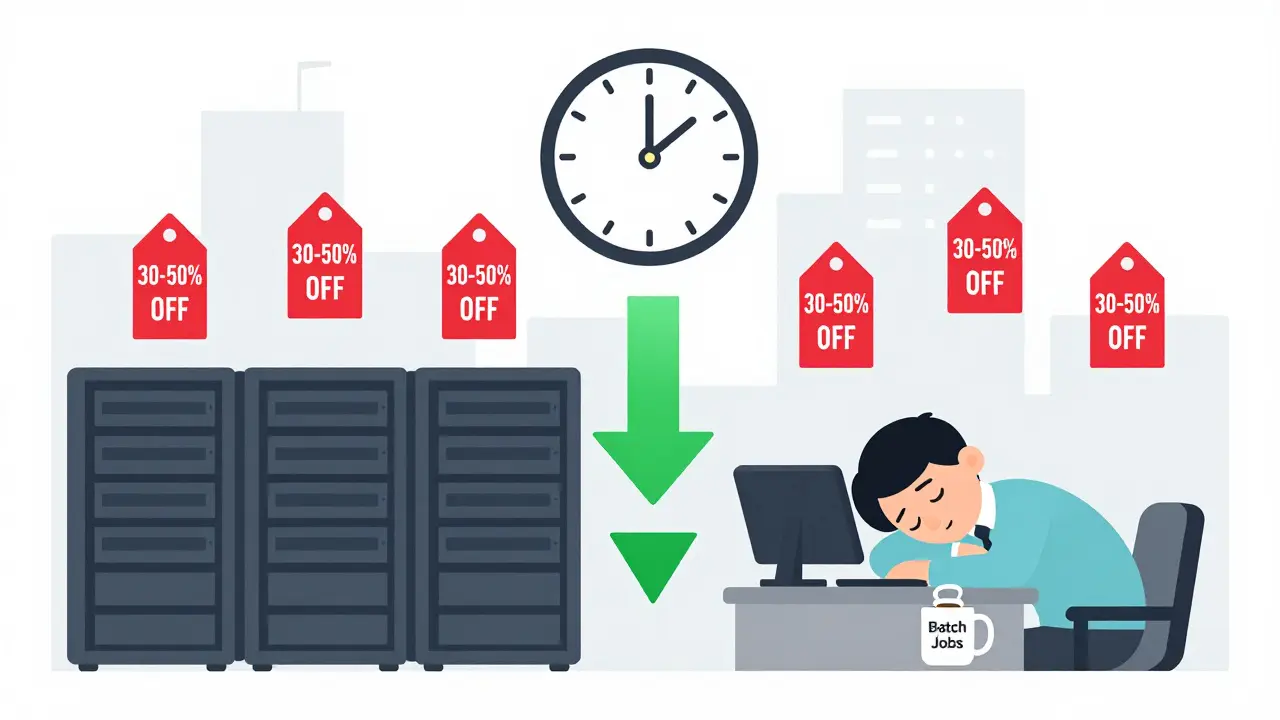

Scheduling: Run AI When It’s Cheap

Most teams treat AI workloads like always-on services. That’s a mistake. Training models, processing batch data, and generating reports don’t need to happen at 3 p.m. on a Tuesday. They can wait until 2 a.m. when cloud prices drop by 30-50%. In 2025, intelligent scheduling isn’t optional. It’s standard. Hospitals using AI to analyze X-rays and MRIs now run their batch jobs overnight. They save 30-50% on compute without slowing down diagnosis. Why? Because electricity rates are lower, and cloud providers charge less during off-peak hours. AWS’s cost sentry mechanism for Amazon Bedrock, launched in October 2025, lets you set time-based token limits. If your model tries to generate more than 10,000 tokens between 9 a.m. and 5 p.m., it gets blocked. No alerts. No bills. Just prevention. You don’t need fancy tools to start. Use cron jobs or cloud-native schedulers to push non-urgent work to low-demand windows. Track usage patterns for a week. Find the quiet hours. Then shift your batch jobs there. The savings add up fast. Teams using this method report 15-20% lower monthly bills just by moving workloads to off-peak times.Autoscaling: Scale by What Matters, Not by CPU

Traditional autoscaling watches CPU or memory. For AI, that’s useless. A model can sit idle with 5% CPU usage and still be burning through tokens. The real metric? Tokens per second. Latency spikes. Model invocation rates. Netflix figured this out early. Instead of running every recommendation query through their most expensive model, they built a tiered system. Simple requests-like suggesting a movie based on genre-go to a lightweight model. Complex ones-like analyzing a user’s emotional tone from watch history-use the high-end model. Result? 40% cost reduction with no drop in user satisfaction. Semantic caching is another game-changer. If 500 users ask the same question-“What’s the capital of Canada?”-you don’t run the model 500 times. You cache the answer. Pelanor’s 2025 case studies show companies cutting AI costs by 35-40% just by caching frequent outputs. That’s not optimization. That’s common sense. Modern tools like CloudKeeper and nOps now monitor AI-specific signals. They auto-scale up when latency rises or token usage spikes. They auto-scale down when requests drop. And they integrate into your CI/CD pipeline. Every new model you deploy gets a budget cap. No more “oops, I deployed a model that costs $10k/hour” moments.

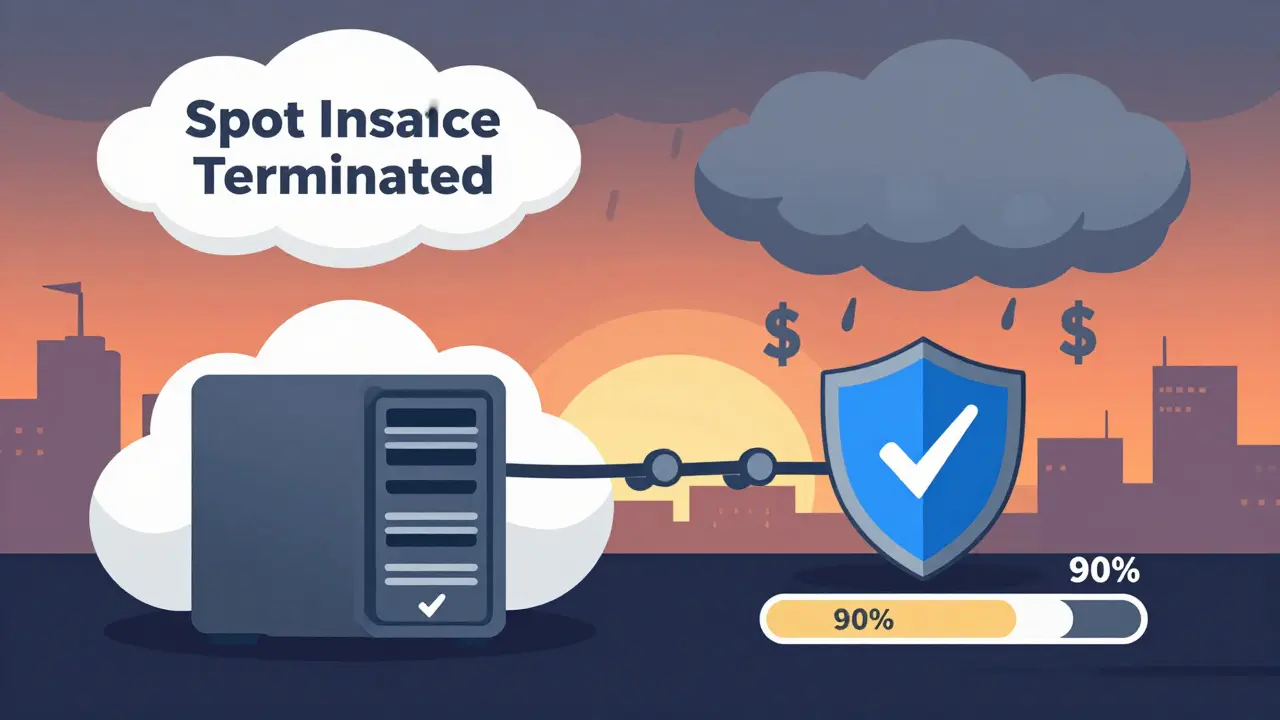

Spot Instances: The Hidden 90% Savings

Spot instances are the secret weapon of smart AI teams. They’re unused cloud capacity sold at 60-90% off. But they can be taken away at any moment. That’s why most people avoid them. And that’s why they’re so powerful. The key is knowing what workloads can survive interruptions. Training models? Perfect. Batch image processing? Ideal. Real-time chatbots? Not so much. Successful teams use spot instances with fallback mechanisms. If a spot instance gets reclaimed, the workload automatically moves to a reserved or on-demand instance. No downtime. No lost progress. Just smarter resource use. But there’s a catch: checkpointing. You must save your model’s state every 15-30 minutes. Otherwise, if the instance vanishes, you lose hours of training. One Reddit user saved $18,500 a month by setting up spot fallbacks-but it took three weeks to get checkpointing right. Google Cloud and AWS now offer built-in spot management for AI workloads. You tell them: “Run this training job on spot, but if it’s interrupted, pause and resume on a stable instance.” That’s the future. And it’s here now.Putting It All Together

The best teams don’t use just one of these tactics. They combine them. Here’s how a real company in finance does it:- They schedule overnight model retraining on spot instances.

- During the day, they use autoscaling to route simple queries to cheaper models and cache frequent responses.

- They enforce sandbox budgets: each data scientist gets $200/month to experiment. When it’s gone, the system shuts down the model automatically.

- Every AI call is tagged so costs are traced to the team, project, or model that created them.

Common Pitfalls (And How to Avoid Them)

Not everything goes smoothly. Here’s what trips people up:- Tagging chaos: If you don’t tag every AI call, you can’t tell who’s spending what. Finout’s December 2025 report says 100% tagging is non-negotiable.

- Model routing errors: One Hacker News user saw accuracy drop 12% after setting up tiered models. Why? They didn’t calibrate the rules. Test your routing. Measure the impact.

- Over-relying on tools: CloudZero, nOps, and CloudKeeper are great-but they won’t fix bad workflows. Start with simple scheduling before buying software.

- Resisting budget limits: Data scientists hate feeling constrained. Give them sandbox budgets. Let them experiment safely. Then show them the savings.

What’s Next?

By Q3 2026, Gartner predicts 85% of enterprise AI deployments will include automated cost optimization. Right now, it’s 45%. That’s a massive gap. The companies falling behind aren’t the ones with bad models. They’re the ones still treating AI like a black box. Cost optimization isn’t about cutting corners. It’s about building sustainable AI. The teams that treat cost as a core part of their AI strategy-not an afterthought-see 3.1x higher ROI, according to CloudZero. They’re not just saving money. They’re accelerating innovation. Start small. Schedule one job. Enable caching. Try spot instances on a training run. Track the results. Then scale. You don’t need a team of engineers. You just need to stop treating AI like it’s free.Can I use spot instances for real-time generative AI like chatbots?

No, not reliably. Spot instances can be terminated with as little as two minutes’ notice. Real-time applications like chatbots, live translation, or customer support tools need consistent uptime. Use on-demand or reserved instances for these. Save spot instances for batch processing, training, and non-urgent tasks.

How much can I realistically save with these methods?

Teams that combine scheduling, autoscaling, and spot instances typically save 50-75% on their generative AI cloud bills. Some report up to 90% savings on training workloads alone. The key is using all three together. Using just one method might save 15-30%. Layering them multiplies the impact.

Do I need to buy expensive AI cost tools?

Not at first. You can start with native cloud tools like AWS Cost Explorer, Azure Cost Management, or Google Cloud’s billing reports. Tag your resources, set up simple schedules, and enable caching manually. Only invest in tools like nOps or CloudKeeper once you’re running multiple models and need automated budget enforcement and per-model dashboards.

What’s the biggest mistake teams make with AI cost optimization?

Treating cost as a separate concern instead of part of the AI development process. Many teams build models first, then try to fix the bill later. That’s backwards. The best teams bake cost checks into their MLOps pipeline. Every new model gets a budget. Every deployment gets a cost estimate. Every experiment has a timer. Cost isn’t a post-mortem. It’s a design constraint.

How long does it take to implement these strategies?

If you already have cloud tagging and basic DevOps in place, you can set up scheduling and caching in 2-4 weeks. Spot instance fallback and autoscaling based on AI metrics take 6-12 weeks. Teams starting from scratch-no tagging, no monitoring, no MLOps-need 12-16 weeks. The faster you tag and measure, the faster you save.

Is AI cost optimization only for big companies?

No. A startup with a single AI model can save thousands a month just by scheduling overnight training on spot instances and enabling caching. The tools are getting cheaper. AWS and Google offer free cost monitoring. The barrier isn’t size-it’s awareness. Even small teams can implement these tactics without spending a dime on software.

sumraa hussain

January 29, 2026 AT 07:28Just tried scheduling my model training for 2 a.m. after reading this. Saved $1,200 last month. No fancy tools. Just cron. Sometimes the dumbest moves are the smartest.

Also, spot instances are basically free money if you're not running live chatbots. I wish I'd known this six months ago.

Raji viji

January 29, 2026 AT 15:12LMAO you people are still using cron? That’s like using a horse cart to deliver a Tesla. CloudKeeper auto-scales based on token throughput, not some ancient time-based garbage. And if you’re not tagging every damn API call, you’re just throwing cash into a black hole with a ‘free AI’ sticker on it.

Also, ‘spot instances for training’? Congrats, you just lost 14 hours of work because AWS decided to ‘optimize’ your life. No checkpointing? You’re not smart-you’re reckless.

Rajashree Iyer

January 31, 2026 AT 07:17There’s a quiet truth here, buried under all this engineering jargon: we’re not optimizing AI costs-we’re outsourcing our moral responsibility to the cloud.

Every token we save, every instance we shut off at 2 a.m.-it’s not just economics. It’s a ritual. A silent apology to the planet for burning electricity like it’s infinite. We call it ‘optimization.’ But really, we’re just learning to beg the machine for mercy.

And yet… we still feed it.

Parth Haz

February 1, 2026 AT 15:29This is an excellent and well-structured overview of a critical issue in modern AI operations. I particularly appreciate the emphasis on integrating cost controls into the MLOps pipeline rather than treating them as an afterthought.

For teams just starting out, I recommend beginning with native cloud billing tools and tagging policies before investing in third-party platforms. The ROI on foundational practices like these is often underappreciated.

Thank you for highlighting that cost optimization enables innovation-not restricts it. That mindset shift is essential.

Vishal Bharadwaj

February 3, 2026 AT 09:1575% savings? Bro you’re lying. I ran a similar setup last month and my bill went UP because the autoscaler kept spinning up expensive instances when the cache missed. Also, ‘semantic caching’? You mean copy-pasting ‘Ottawa’? That’s not AI, that’s a glorified if-statement.

And spot instances? Lol. I lost 3 days of training because AWS ‘reclaimed’ my instance. Now I pay for on-demand because I’m tired of crying over lost weights.

Also, tagging? Who has time for that? I got 17 models to deploy before lunch.

anoushka singh

February 5, 2026 AT 00:10Okay but can we talk about how exhausting it is to be a data scientist who just wants to build cool stuff but now has to be a finance manager too?

I miss the days when I could just train a model and not worry about who’s spending what. Now I’ve got budget alerts, tagging rules, and someone asking me why my ‘experimental’ model cost $800. I didn’t even know I ran it for 12 hours!

Can we just… not?

Jitendra Singh

February 6, 2026 AT 19:18I’ve been using spot instances with checkpointing for 8 months now. It’s not perfect, but it’s the cheapest way to train large models without going broke.

One tip: set your checkpoint interval to 20 minutes. Anything less adds overhead, anything more risks too much loss.

Also, use AWS’s new built-in spot resume feature-it’s way easier than scripting your own fallback. Took me 2 days to set up. Saved me $15k this quarter.

Madhuri Pujari

February 8, 2026 AT 15:00Oh wow. You’re all acting like you invented this. Newsflash: this has been standard practice since 2023. You’re reading a 2025 article like it’s the first time anyone ever thought of saving money.

And you think caching is ‘common sense’? I’ve been doing it since 2021. You’re not optimizing-you’re catching up.

Also, ‘sandbox budgets’? Cute. That’s what we called it when we were kids. Real teams use automated budget enforcement with hard kill switches. Not ‘$200/month’-that’s a child’s allowance.

Stop patting yourselves on the back. You’re late to the party.

Sandeepan Gupta

February 10, 2026 AT 07:49For anyone feeling overwhelmed: start with one thing. Just one.

Find your most expensive model. Look at its usage logs. Pick one off-peak hour. Schedule it. That’s it.

Then enable caching for the top 5 most common prompts. Use a simple Redis instance if you don’t have anything else.

Then try one spot instance job. Add checkpointing. Use AWS’s built-in resume.

You don’t need CloudKeeper. You don’t need a team. You just need to stop doing everything at once. Progress > perfection.

And if you’re still not tagging? Do it today. It takes 10 minutes. Your future self will cry happy tears.