Compliance Controls for Vibe-Coded Systems: SOC 2, ISO 27001, and More

Jan, 19 2026

Jan, 19 2026

When your developers start typing prompts instead of code, your compliance playbook breaks. That’s the reality for companies using vibe coding-AI tools like GitHub Copilot and Cursor that generate code from natural language. It’s faster. It’s productive. But it’s also a compliance nightmare if you’re still relying on old-school controls designed for human-written code.

Why Traditional Compliance Frameworks Fail with Vibe Coding

SOC 2 and ISO 27001 were built for a world where every line of code was written by a person. Auditors expected to see commit logs, code reviews, and documented change requests. But with vibe coding, code appears out of nowhere-generated by an AI model trained on public repositories, sometimes with no clear human authorship. The audit trail? Gone. Take SOC 2’s Processing Integrity criterion. It requires that systems produce accurate, complete, and timely results. But what if an AI generates code that passes all unit tests but silently leaks API keys? Or introduces a logic flaw that only triggers under load? Traditional tools can’t catch it. A 2024 benchmark from Contrast Security showed their AI-specific monitoring caught 89% of vulnerabilities in AI-generated code. Legacy SAST tools? Only 62%. ISO 27001 demands documented controls and traceability. But if your developer types, “Write a function to fetch user data,” and the AI returns code that connects directly to the database with hardcoded credentials, who’s responsible? The developer? The AI? The prompt engineer? Without a system that logs every prompt, every generated snippet, and every modification, you can’t answer that question during an audit.What Vibe Coding Compliance Actually Requires

Compliant vibe coding isn’t about adding another tool. It’s about rebuilding your software development lifecycle from the inside out. Here’s what it looks like in practice:- IDE-level enforcement: Controls must activate as code is being written-not after it’s committed. Tools like Knostic Kirin block vulnerable dependencies in real time, stopping 97.3% of risky packages before they ever reach your repo.

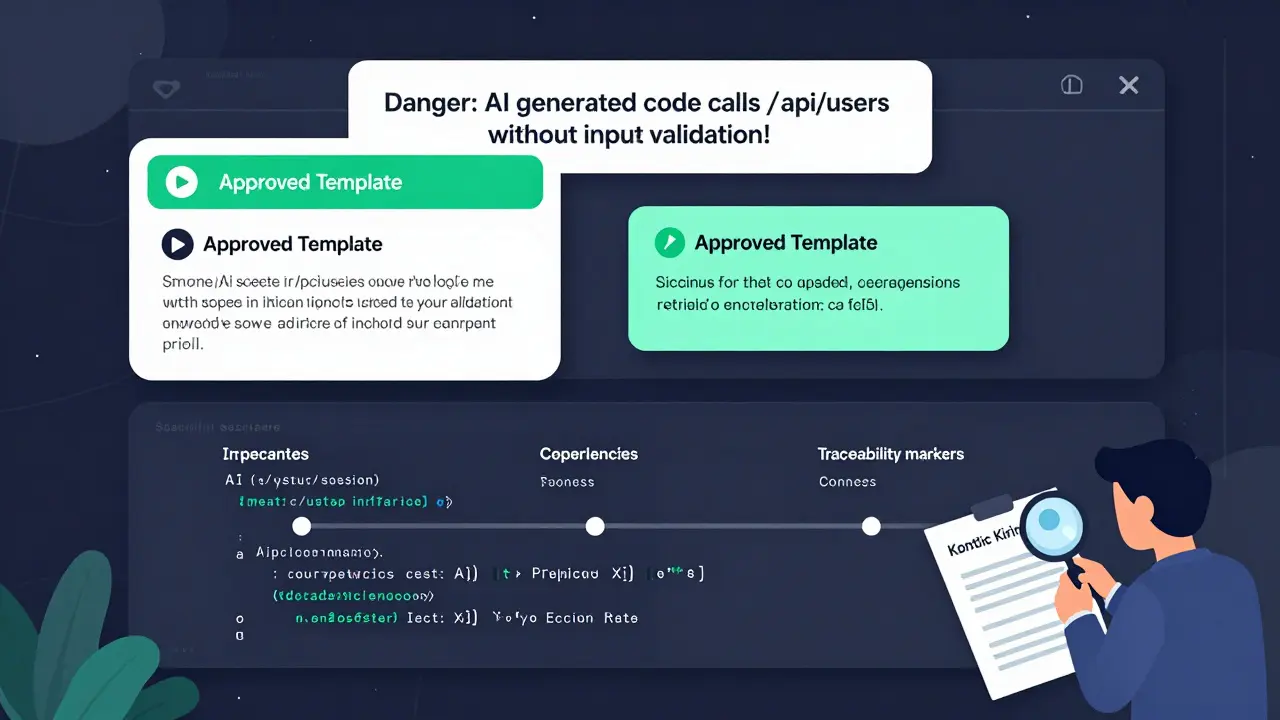

- Full audit trails: Every AI-generated line must be tied to a specific prompt, user, timestamp, and reason. Systems like Kirin capture 275+ data points per change, automatically mapping them to SOC 2 trust principles.

- Secrets scanning in development: AWS secrets, API keys, and passwords must be scanned at the IDE level. Legit Security’s framework requires 100% credential detection before code leaves the developer’s machine.

- Prompt governance: 68% of compliance failures come from poorly written prompts. Teams now use validated templates-like “Generate a secure API endpoint that validates input and uses environment variables for credentials”-to reduce risky outputs by 63%.

How SOC 2 and ISO 27001 Adapt to AI-Generated Code

Neither SOC 2 nor ISO 27001 officially mention AI-generated code. But that doesn’t mean they don’t apply. Regulators and auditors are interpreting existing controls through a new lens. For SOC 2, the key is Processing Integrity. If your AI-generated code introduces defects that impact system behavior, you’re non-compliant. The solution? Automated evidence mapping. Knostic’s Kirin 3.0 (beta in early 2025) auto-maps AI code changes to SOC 2 criteria with 95% accuracy. Instead of spending weeks manually correlating prompts to code, auditors get a clean report showing exactly which AI-generated functions meet each control. ISO 27001’s Annex A controls around access control, asset management, and incident response now include AI artifacts as “digital assets.” That means:- AI-generated code must be inventoried like any other software component.

- Access to AI coding tools must follow the same approval workflows as database access.

- Incident response plans must include steps to trace and remediate AI-generated vulnerabilities.

Real-World Failures and Wins

A healthcare startup in Seattle failed its HIPAA audit in late 2024 because an AI-generated function accidentally logged patient names in debug logs. The CTO told auditors, “The developer didn’t write that.” But they couldn’t prove whether the AI or the prompt caused it. No audit trail. No answer. They lost their certification. Meanwhile, Capital One’s engineering team switched to Knostic Kirin. Before: 20 days to collect SOC 2 evidence. After: 3 days. Why? Because every AI-generated change was automatically logged, tagged, and mapped to compliance controls. Their auditor didn’t ask for a single manual file. Teams using standard compliance tools saw 43% more audit findings in development controls than those with vibe coding-specific controls. The difference? Shift-left security. Blocking vulnerabilities while the code is being typed-not after it’s merged into main.Implementation Roadmap: 4 Phases to Get There

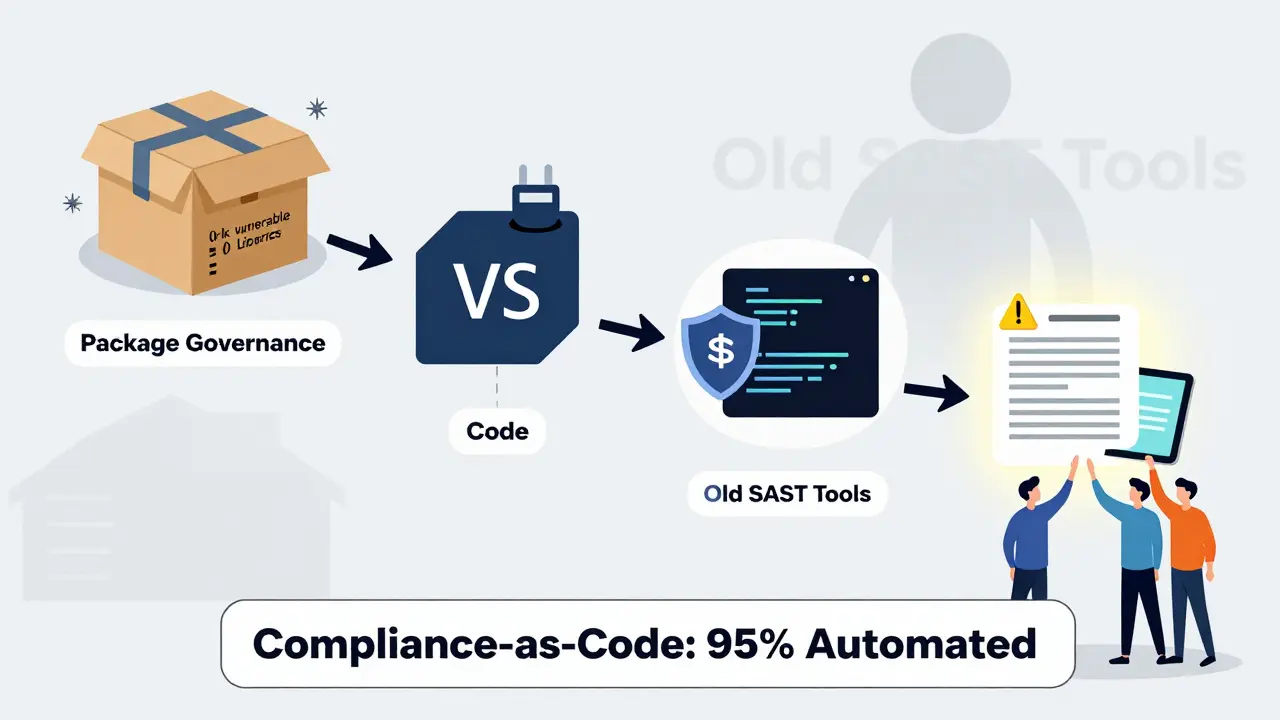

You don’t flip a switch. You build this over time.- Package governance (2-4 weeks): Scan dependencies in real time. Block known vulnerable libraries before they’re added. Use NVD feeds updated daily.

- Plugin control (1-3 weeks): Deploy IDE plugins for VS Code and JetBrains. Enforce secrets scanning and prompt templates. Require approval for AI-generated code in sensitive modules.

- In-IDE guardrails (3-5 weeks): Add contextual warnings. Example: “You’re generating code that calls /api/users. Are you validating input? Are credentials stored securely?”

- Audit automation (4-6 weeks): Integrate with your SIEM. Auto-generate compliance reports tied to SOC 2 and ISO 27001 controls. Eliminate manual evidence collection.

Who’s Leading the Way

The market is splitting into two camps: traditional AppSec vendors trying to retrofit their tools, and specialized players built for vibe coding.- Knostic Kirin: 18% market share. Best for financial services. Auto-maps AI code to SOC 2 and ISO 27001.

- Contrast Security AVM: 15% market share. Strong runtime monitoring. Detects vulnerabilities in live AI-generated apps.

- ReversingLabs: 12% market share. Focuses on code provenance-proving what AI model generated what code.

Expert Warnings and the Future

Dr. Emily Chen from NIST says: “AI-generated code requires enhanced verification that goes beyond human code reviews.” She’s right. You can’t just slap a code review process on AI output. You need structured validation workflows. Gartner predicts that by 2026, 70% of enterprises will require specialized compliance controls for AI-assisted development-up from 15% in 2024. The EU’s AI Act, effective February 2026, will require full documentation of AI development processes. If you’re not ready, you’ll be fined. The biggest risk? Over-reliance. Teams that disable controls because they’re “too slow” are setting themselves up for failure. One developer on Reddit said: “We turned off the prompts filter to ship faster. We got breached two weeks later.” The future? Compliance-as-code. Security policies written in YAML or JSON that automatically translate into IDE guardrails. Forrester predicts 85% of vibe coding compliance will be automated by 2027. But even then-human oversight remains non-negotiable. As Contrast Security’s CTO puts it: “The most critical element is developer accountability.”Frequently Asked Questions

Is vibe coding compliant with SOC 2?

Yes-but only if you have specialized controls in place. Standard SOC 2 audits assume human-written code. Without AI-specific audit trails, prompt logs, and real-time scanning, your AI-generated code will trigger audit findings. Platforms like Knostic Kirin auto-map AI outputs to SOC 2 trust principles, making compliance possible.

Does ISO 27001 cover AI-generated code?

ISO 27001 doesn’t mention AI, but its controls still apply. AI-generated code is now treated as a digital asset. You must inventory it, control access to the tools that generate it, and document how it’s reviewed. NIST SP 800-218’s 2025 update explicitly requires traceability from prompt to production code, which auditors now expect under ISO 27001.

Can I use GitHub Copilot and stay compliant?

You can-but only with guardrails. GitHub Copilot alone doesn’t provide audit trails, secrets scanning, or prompt logging. You need a platform like Knostic, Contrast Security, or Legit Security that integrates with Copilot to enforce policies, scan for vulnerabilities, and log every AI-generated line. Without those, you’re at risk.

What’s the biggest mistake companies make with vibe coding compliance?

Turning off controls because they slow down development. Teams that disable prompt filters or skip code reviews for AI-generated output end up with breaches, failed audits, and regulatory fines. The slowdown is temporary. The risk is permanent. The best teams use prompt templates and automated validation to reduce friction-not eliminate checks.

Do I need to train my developers on vibe coding risks?

Absolutely. Black Duck’s security framework shows teams that complete AI-specific training reduce compliance failures by 52%. Developers need to understand how AI models work, what prompts generate insecure code, and why human review still matters. Training isn’t optional-it’s part of your control environment.

Next Steps

If you’re using vibe coding today, start here:- Check if your current AppSec tools can log AI-generated code to prompts.

- Run a test: Generate a function that handles user data. Can you trace it back to the exact prompt used?

- Ask your auditor: “How would you verify the origin of AI-generated code during a SOC 2 audit?”

- Start with one high-risk module-like authentication or payment processing-and apply IDE guardrails there first.

Rubina Jadhav

January 19, 2026 AT 23:21This is actually really important. I work with AI tools every day and never thought about how audits would handle it. If the code just appears, who gets blamed when something breaks? I think companies need to start tracking prompts like they track commits. Simple, but needed.

sumraa hussain

January 20, 2026 AT 09:06OMG YES. I just had my team’s entire CI/CD pipeline blow up because Copilot generated a function that hardcoded an AWS key. We had NO IDEA where it came from. No logs. No trace. Just… code. Suddenly. Like magic. Except it wasn’t magic-it was negligence. And now we’re in audit hell. I’m screaming into the void here but someone PLEASE make IDEs enforce this before it’s too late!!!

Rajashree Iyer

January 22, 2026 AT 04:12Think about it-this isn’t just about compliance. It’s about the erosion of authorship. When code is no longer written by a human, but whispered into existence by a machine trained on billions of lines… who owns it? Who is responsible? The developer who typed the prompt? The AI that interpreted it? The dataset that taught it? We’re not just breaking SOC 2-we’re breaking the very idea of accountability in software. The soul of engineering is vanishing, replaced by a ghost in the machine. And we’re applauding it because it’s fast.

Parth Haz

January 22, 2026 AT 12:51While the urgency is clear, I’d caution against overestimating the risk. Many of the vulnerabilities mentioned can already be mitigated with existing tooling if properly configured. The real issue isn’t AI-generated code-it’s the lack of policy enforcement. Teams that implement structured prompt templates, mandatory scanning, and IDE-level guardrails are already compliant. The problem isn’t technology-it’s discipline. And that’s fixable.

Vishal Bharadwaj

January 23, 2026 AT 01:57lol so now we need to audit ai prompts? next they’ll want us to log our coffee intake before writing code. you know what’s worse than ai generated code? teams that spend 6 months writing a 50 page compliance doc for something that could’ve been fixed by a 2 line regex. also knostic kirin? sounds like a bad fantasy novel. and 2.3 fte? who’s the 0.3? the intern who cries when the linter fails?

anoushka singh

January 24, 2026 AT 18:17Wait so you’re saying I can’t just tell AI to ‘make it work’ anymore? 😱 I mean, I kinda liked that part. Also, why do I need to care about audit trails? My boss just wants it done. Can’t we just… not get caught? 😅

Jitendra Singh

January 25, 2026 AT 05:38I’ve seen both sides. We tried disabling the guardrails to speed things up-big mistake. Got a critical leak in production. Now we use the templates and the warnings. It’s not perfect, but it’s way better than before. The key is making it part of the flow, not a barrier. Small steps. Consistency. That’s what matters.

Madhuri Pujari

January 26, 2026 AT 02:46Ohhh so THIS is why your ‘cutting-edge’ team got breached last month? You thought ‘AI writes code, we write excuses’? Pathetic. You didn’t fail because of the tech-you failed because you treated compliance like a suggestion. And now you’re pretending this is some revolutionary insight? Newsflash: NIST didn’t invent common sense. You just ignored it until someone got fired. And yes, I’m still mad you used ‘vibe coding’ in a corporate doc. It’s not a vibe. It’s a liability.

Sandeepan Gupta

January 27, 2026 AT 00:16Let me break this down simply: If you're using AI to generate code, you need three things-1) A log of every prompt used, 2) A scanner that checks every line before it leaves the IDE, and 3) A policy that says ‘no exceptions.’ No, you can’t skip scanning because ‘it’s just a small change.’ No, you can’t disable the guardrail because ‘it’s slowing me down.’ This isn’t about being paranoid-it’s about being professional. Start with one module. Get it right. Then expand. And yes, training your team matters. Not because it’s trendy-it’s because if you don’t, someone else will pay the price. You’re not just writing code. You’re writing trust.