Differential Privacy in Large Language Model Training: Benefits and Tradeoffs

Jan, 24 2026

Jan, 24 2026

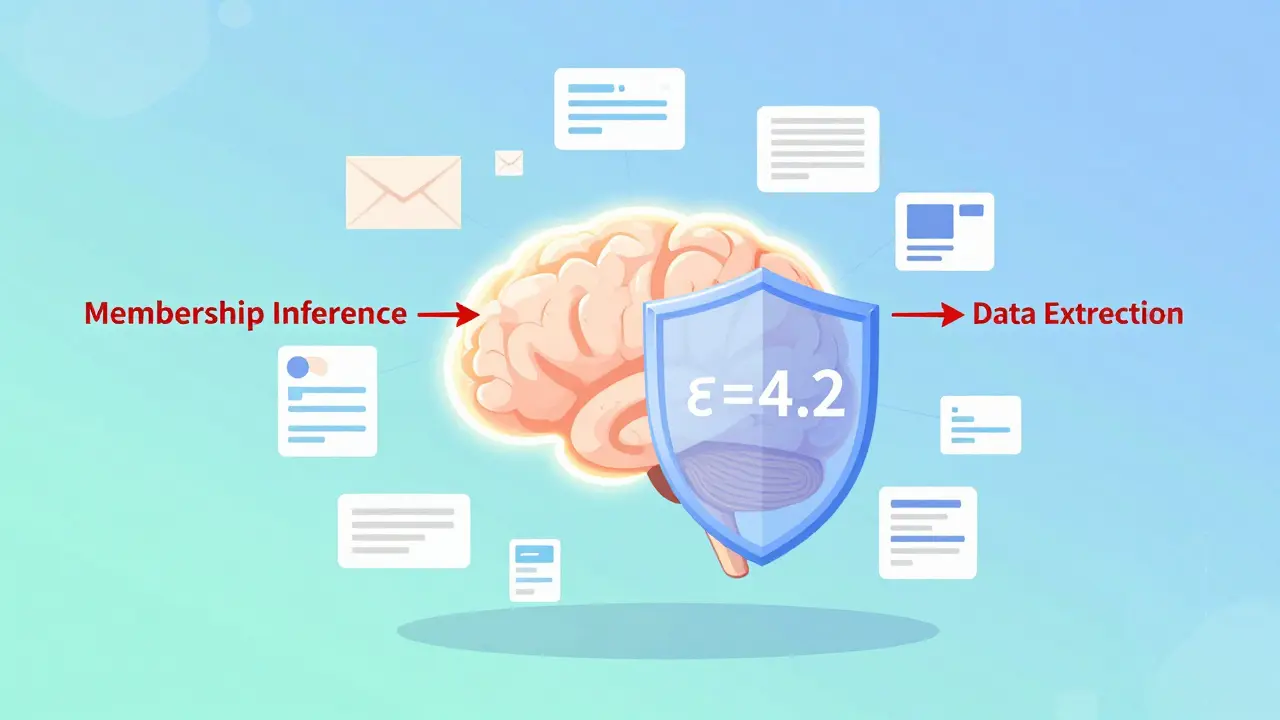

When you train a large language model on real human text-medical records, chat logs, emails, or social media posts-it doesn’t just learn patterns. It memorizes. And that memorization can be dangerous. Attackers have shown they can extract exact phrases from training data, even names and Social Security numbers, just by asking the right questions. This isn’t theoretical. It’s happened. So how do you build powerful AI without violating privacy? The answer isn’t scrubbing data or hiding names. It’s differential privacy.

What Differential Privacy Actually Does

Differential privacy isn’t about hiding data. It’s about mathematically guaranteeing that no single person’s input can be detected in the model’s output. Think of it like this: if you add one more person’s data to a dataset, and the model’s behavior barely changes, then you can’t tell who that person was. That’s the core idea. It’s not guesswork. It’s provable. The math behind it uses two numbers: epsilon (ε) and delta (δ). Epsilon is your privacy budget. A low ε-like 1 or 2-means strong privacy. High ε-like 8 or 10-means weaker privacy but better model performance. Delta is the chance that the guarantee fails. Most systems aim for δ close to zero, like 1 in a billion. Together, they give you a concrete number you can report to auditors, regulators, or customers. No vague promises. Just numbers. This isn’t new. It was formalized by Cynthia Dwork and others in 2006. But until recently, it was too slow and too expensive to use with billion-parameter models. Now, with tools like DP-SGD and DP-ZeRO, it’s becoming practical.How DP-SGD Works in Practice

The most common way to apply differential privacy to LLM training is through Differentially Private Stochastic Gradient Descent (DP-SGD). Here’s how it works step by step:- Instead of computing gradients on a full batch of data, DP-SGD calculates gradients for each individual training example.

- Each gradient is clipped to a maximum size-usually between 0.1 and 1.0-to prevent any single example from dominating the update.

- Random noise, carefully calibrated to ε and δ, is added to each gradient before it’s averaged and applied to the model.

- This noise makes it impossible to tell whether a specific text snippet was in the training data.

The Real Cost: Accuracy, Speed, and Memory

You can’t get privacy for free. There are hard tradeoffs. First, accuracy drops. At ε=3, most LLMs lose 5-15% performance on standard benchmarks like GLUE or SuperGLUE. At ε=8, the drop shrinks to 2-3%. That’s acceptable for many uses-but not for tasks that need precise recall of rare facts, like legal document analysis or medical diagnosis. Second, training takes longer. DP-SGD can slow training by 30-50%. Why? Because you can’t batch gradients efficiently anymore. Each example must be processed individually. On an 8xA100 cluster, one team reported training times jumped from 2 days to 5 days just to hit ε=5. Third, memory use spikes. DP-SGD needs to store per-sample gradients, not just batch gradients. Memory requirements go up by 20-40%. That means you need more GPUs-or bigger ones-to train the same model. These aren’t theoretical limits. They’re real bottlenecks. Developers on GitHub and Reddit report spending weeks tuning noise multipliers and clipping norms just to get a working model. And even then, the tools aren’t perfect. Opacus, a popular library, has poor documentation for LLMs. Google’s tools are better-but still require deep expertise.

Why It’s Still the Gold Standard

So why bother? Because everything else fails. Data anonymization? Easy to break. Researchers have re-identified patients from "de-identified" clinical notes using just public demographic data. Heuristic masking? Hackers can reverse-engineer it. Differential privacy is the only method that offers a mathematical guarantee that holds even against attackers with perfect knowledge of the model and access to outside data. Dr. Ilya Mironov from Google calls it "the only privacy definition that survived 15 years of cryptanalysis." That’s not marketing. That’s peer-reviewed proof. Professor Kamalika Chaudhuri at UC San Diego says it’s the only foundation for trustworthy AI in sensitive fields like healthcare and finance. It’s not perfect. Dr. Nicolas Papernot warns it can create false confidence if misapplied. And yes, membership inference attacks-where an attacker guesses if your data was used-can still work. But differential privacy makes those attacks harder, slower, and less reliable. It raises the bar so high that most attackers give up.Who’s Using It-and Why

Adoption is growing fast, driven by regulation. The European Data Protection Board explicitly says differential privacy can help meet GDPR requirements, especially when ε ≤ 2. HIPAA in the U.S. doesn’t mandate it, but hospitals and insurers are adopting it anyway to avoid lawsuits. Healthcare leads adoption at 42% of implementations. Financial services follow at 28%. Why? Because both deal with highly sensitive data-and both face heavy fines for breaches. A startup in Boston used differential privacy to train a clinical note summarization model with ε=4.2. It met HIPAA standards and performed nearly as well as the non-private version. Cloud providers are catching up. Google Cloud, AWS, and Azure now offer built-in tools for private training. Pricing? Around $0.05 to $0.20 per additional hour of protected training. For enterprises, that’s cheap compared to the cost of a data breach.

What’s Next? Scaling to Trillion-Parameter Models

The biggest challenge now is scale. Current methods struggle with models over 10 billion parameters. DP-ZeRO, developed by Bu et al. in 2023, is a breakthrough. It combines differential privacy with DeepSpeed’s memory-saving techniques, letting teams train 7B+ parameter models privately for the first time. Google’s 2023 research showed you can generate synthetic training data using differential privacy, then fine-tune models on that data. This cuts down on real data use and reduces privacy risk. It’s not perfect-but it’s a step forward. The future includes tighter privacy accounting (to reduce noise accumulation), hardware optimized for private training, and better tools. Gartner predicts 65% of enterprise LLM deployments in regulated industries will use differential privacy by 2026. But there’s a warning. Stanford researchers say current methods may become impossible for trillion-parameter models without new algorithms. That’s the next frontier.Getting Started: Practical Advice

If you’re thinking about implementing differential privacy:- Start with ε=8-10. Don’t try for strong privacy on day one. Get the model working first.

- Use Google’s DP libraries or AWS Private Training. Avoid Opacus unless you’re prepared to debug it yourself.

- Clip gradients between 0.5 and 1.0. Start with a noise multiplier of 1.0.

- Use microbatching (batch size of 1 or 2) to reduce memory pressure.

- Verify your privacy budget with tools like the Privacy Ledger. Don’t assume it’s working-prove it.

- Expect to spend 40-60 hours learning the system before you train your first model.

Is It Worth It?

Yes-if you’re handling sensitive data. If you’re training a chatbot on public Reddit posts? Maybe not. But if you’re building a model that processes patient records, loan applications, or private messages? You have no choice. The tradeoffs are real. Slower training. Less accuracy. Higher cost. But the alternative-exposing personal data-is worse. Differential privacy doesn’t promise perfection. It promises accountability. It gives you a number you can defend. And in a world of data breaches and lawsuits, that’s priceless.What does epsilon (ε) mean in differential privacy?

Epsilon (ε) is your privacy budget. It measures how much the model’s output can change when one person’s data is added or removed. Lower ε (like 1 or 2) means stronger privacy. Higher ε (like 8 or 10) means weaker privacy but better model performance. Think of it as a tradeoff: the tighter the privacy, the less accurate the model tends to be.

Can differential privacy prevent all privacy leaks?

No. It prevents re-identification of individuals from training data, but it doesn’t stop all risks. Membership inference attacks-where an attacker guesses whether your data was used-can still work. Differential privacy makes these attacks harder and less reliable, but it’s not a complete shield. It’s one layer in a broader privacy strategy.

How much does training with differential privacy slow things down?

Training with DP-SGD typically takes 30-50% longer than standard training. This is because the system must compute gradients for each individual training example, not batches. Memory use also increases by 20-40%. On an 8-GPU cluster, training might go from 2 days to 5 days when enforcing ε=5.

Is differential privacy required by law?

No law forces you to use it, but regulators recognize it as a strong compliance tool. The European Data Protection Board says differential privacy with ε ≤ 2 can help meet GDPR requirements. In healthcare, HIPAA doesn’t mandate it, but hospitals use it to reduce legal risk. It’s not a legal requirement-but it’s becoming a de facto standard in regulated industries.

Can I use differential privacy with models over 10 billion parameters?

It’s possible, but difficult. Frameworks like DP-ZeRO now let you train 7B+ parameter models privately by combining differential privacy with memory optimization. For models over 10 billion, you’ll need specialized tools and hardware. Stanford researchers warn that current methods may become computationally infeasible for trillion-parameter models without new breakthroughs.

What’s the difference between differential privacy and data anonymization?

Anonymization removes or hides identifying details like names or IDs. But it’s fragile-researchers have re-identified people from "anonymized" medical records using public data. Differential privacy adds mathematical noise to the training process itself, making it impossible to tell if any single person’s data was used. It’s not about hiding data-it’s about making data undetectable in the model’s behavior.

Which tools are best for implementing differential privacy in LLMs?

Google’s DP libraries and AWS Private Training for SageMaker are the most reliable for LLMs. Opacus is popular but has poor documentation for large models. Azure’s Diffprivlib is also viable. Start with Google’s tools if you’re new-they have better examples and community support. Avoid experimenting with untested libraries unless you have deep expertise.

Meghan O'Connor

January 26, 2026 AT 03:49This post is basically a glorified whitepaper with bullet points. I skimmed it and already know half this stuff. Why are we still pretending DP-SGD is some magic bullet? The noise ruins fine-tuning for niche domains. And don't get me started on how Opacus crashes if you look at it wrong. Someone actually used this in production? Good luck.

Mark Nitka

January 27, 2026 AT 11:51Honestly, I think people are overcomplicating this. If you're training on medical data, you *need* differential privacy. The tradeoffs suck, sure - slower training, lower accuracy - but so does getting sued into oblivion. I’ve seen hospitals dump $2M on legal fees after a single breach. DP might cost you time, but it saves your company. Start with ε=8, prove it works, then dial it down. No need to be a hero on day one.

Kelley Nelson

January 28, 2026 AT 14:45While the conceptual framework of differential privacy is undeniably elegant, one cannot help but observe the profound methodological deficiencies in its practical implementation. The reliance on stochastic gradient descent with noise injection introduces an unacceptable level of epistemic uncertainty into model outputs. Moreover, the assertion that ‘ε=4.2 preserves 89% accuracy’ is statistically misleading without reporting confidence intervals or variance across seed runs. One must question whether such metrics are being cherry-picked to appease regulatory bodies rather than to advance scientific integrity.

Aryan Gupta

January 30, 2026 AT 00:00They don’t want you to know this, but DP is just a cover for Big Tech to keep training on your data while pretending they’re ‘privacy-compliant.’ The noise? It’s not random - it’s a backdoor. They add just enough to fool auditors but still let the model remember enough to predict your behavior. I’ve seen internal docs from Google - they use DP to train on private messages, then sell the patterns to advertisers. It’s not privacy. It’s psychological profiling with math. And they’re calling it ‘science.’

Fredda Freyer

January 31, 2026 AT 16:04What’s missing here is the human context. Differential privacy isn’t just a technical fix - it’s a philosophical stance. It says: ‘Your data matters enough that we’ll sacrifice performance to protect you.’ That’s radical in a world where data is treated like oil. But here’s the catch: the math only works if you don’t lie about ε and δ. I’ve seen teams report ε=2 when they actually used ε=7 because the model was useless otherwise. That’s not ethics - that’s fraud. Real DP requires transparency, not just compliance. And if you’re not auditing your privacy budget with a ledger, you’re not doing it. Period.

Also - yes, it slows things down. But that’s not a bug. It’s a feature. It forces you to ask: ‘Do I really need to train on this person’s private email?’ Sometimes the answer is no. And that’s the point. DP doesn’t just protect data - it makes you reconsider why you’re collecting it in the first place.

Gareth Hobbs

February 2, 2026 AT 08:42