Evaluating Internal Deliberation Costs in Reasoning Large Language Models

Aug, 31 2025

Aug, 31 2025

Most people think of large language models as fast text generators. You ask a question, they spit out an answer. Simple. But behind the scenes, a new generation of models - called reasoning large language models (LRMs) - is doing something very different. They don’t just answer. They think. They pause. They backtrack. They evaluate options. And all of that thinking comes at a price.

If you’re considering using these models for business, you need to understand one thing: the cost of internal deliberation isn’t just a technical detail. It’s a budget breaker.

What Exactly Is Internal Deliberation?

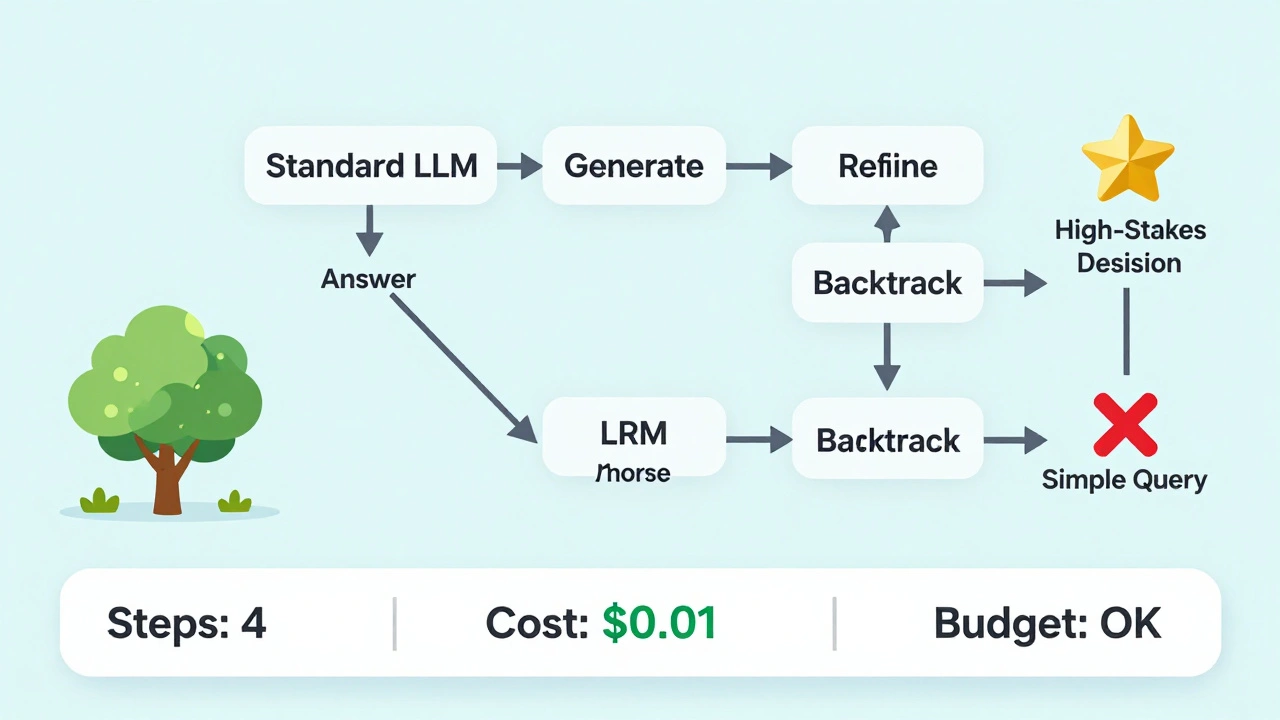

Standard LLMs like GPT-4 or Claude 3 generate responses in one pass. They predict the next word, then the next, until the answer is done. No planning. No revising. Just generation.

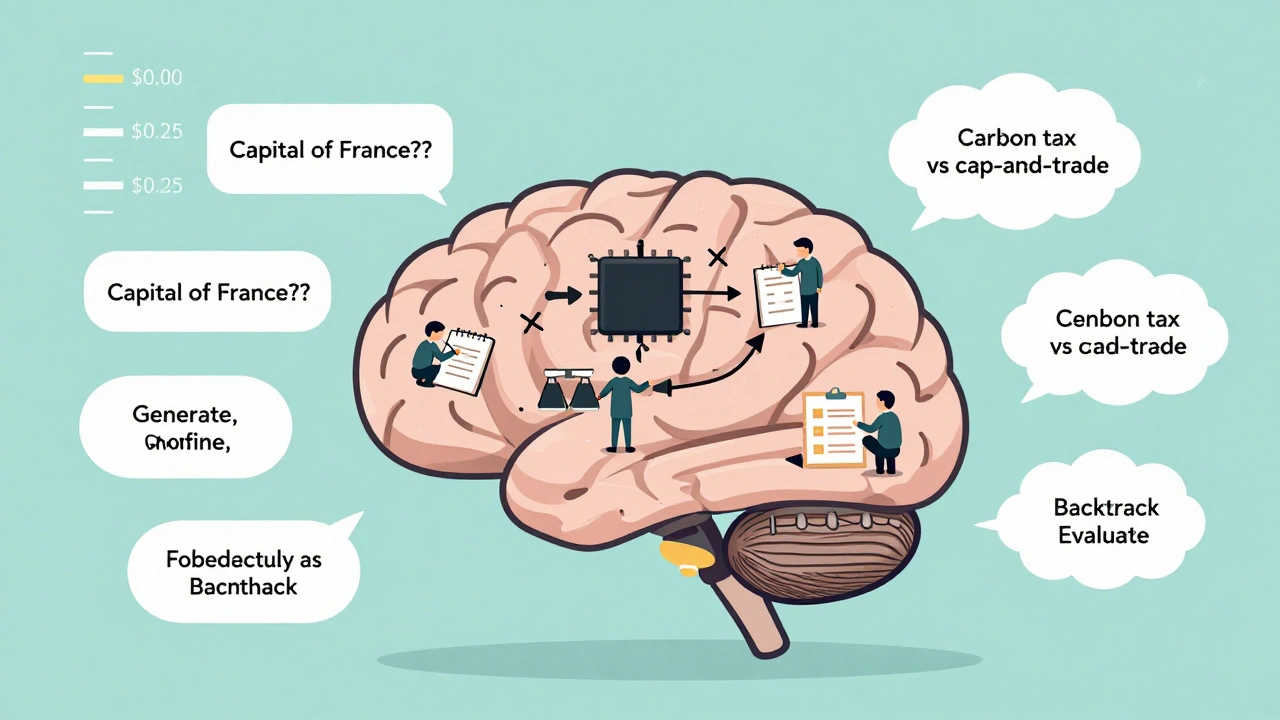

LRMs are different. They break tasks into steps. They might first generate a hypothesis. Then refine it. Then check for contradictions. Then compare alternatives. Then pick the best one. Each of these steps - called deliberation actions - requires extra tokens, extra memory, and extra compute time.

Researchers at Anthropic and Google DeepMind call these steps operators: Generate, Refine, Aggregate, Select, Backtrack, Evaluate. Think of them like a human working through a complex problem on paper. You don’t just write the final answer. You scribble notes, cross out ideas, re-read the question, try again. That’s what LRMs do - and it’s expensive.

The Real Cost of Thinking

Let’s say you ask a standard LLM: “What’s the capital of France?” It uses about 20 tokens. At current pricing, that costs $0.000002.

Now ask an LRM the same question - but it’s configured to “reason deeply.” It might generate 100 tokens just to confirm it’s not missing context. Maybe it checks if “France” refers to the country or a company. It evaluates possible ambiguities. It backtracks because it’s unsure about the spelling. Now you’re at 100 tokens. That’s 5x more. $0.000010. And you got the same answer: Paris.

That’s the problem. For simple queries, LRMs add cost without adding value. Emergent Mind’s 2025 data shows 63% of routine business questions processed through LRMs cost 4.7x more than they should.

But here’s where it gets interesting.

Ask an LRM: “Compare the economic impacts of carbon tax versus cap-and-trade systems over 20 years, factoring in inflation, job displacement, and international competitiveness.” A standard LLM might give you a shallow, inaccurate summary. An LRM? It breaks it down. It pulls data points. It models scenarios. It checks for logical gaps. It refines its conclusion twice. It might use 2,500 tokens. That’s $0.25 - way more than a simple answer.

But here’s the kicker: that $0.25 answer is 35% more accurate. And if you’re making a billion-dollar policy decision, that accuracy is worth it.

Costs Add Up Fast - And Not Linearly

Each reasoning step doesn’t just add cost. It multiplies it.

One step? 1.8x the cost of a standard response. Two steps? 3.1x. Five steps? 4.2x. Ten steps? 12.7x. Why? Because every step requires the model to hold more state in memory. It’s like keeping ten open tabs in your browser - each one uses more RAM, slows things down, and drains your battery faster.

University of Pennsylvania’s March 2025 study found that LRMs need 40-60% more GPU memory than standard models just to maintain reasoning state. SandGarden’s August 2025 analysis showed deep reasoning queries use 2.3-4.7x more electricity. A single complex task can consume 0.85 watt-hours - enough to power a phone for 15 minutes.

And it gets worse. If the model gets stuck in a loop - say, it keeps refining the same point without progress - costs can spike 500-2,000%. One Reddit user reported a single query costing $17.30 in compute. That’s more than 1,000 simple queries.

Who’s Paying the Bill?

Cloud providers have taken notice. OpenAI charges $0.012 per 1,000 tokens for reasoning mode - four times the standard rate. Anthropic’s April 2025 pricing puts token costs at $0.0001 per 1k tokens for reasoning tasks. That’s not just a little more. That’s a big jump.

On-premise? Even steeper. A single-node LRM server with 8 NVIDIA RTX 5090-32G GPUs costs $128,000 upfront. Monthly electricity? $1,850 - assuming it runs 160 hours a month. That’s not a lab experiment. That’s a production system.

And the numbers are real. A Fortune 500 financial firm was spending $287,000 a month on LRM reasoning before they capped the steps per query. After implementing budget limits, they dropped to $92,000 - keeping 92% of the accuracy. That’s a 68% cost cut.

Another company had an earnings analysis query cost $214 in compute. More than their entire monthly budget for that service line.

When Do LRMs Pay Off?

LRMs aren’t for everything. They’re not for customer service chatbots. Not for FAQ bots. Not for summarizing news.

They’re for tasks where the cost of being wrong is higher than the cost of thinking.

Healthcare diagnostics. Financial risk modeling. Legal contract analysis. Policy evaluation. Scientific hypothesis generation. These are the sweet spots.

Anthropic’s internal metrics show that for complex analytical tasks, LRMs deliver 28-42% higher accuracy per unit of cost - because they don’t need to retry. One standard LLM might fail three times before getting it right. An LRM gets it right the first time - even if it takes longer.

The break-even point? Around 17-23 reasoning steps per task. Below that? Stick with standard LLMs. Above it? LRM wins.

How to Control the Costs

Most companies fail not because LRMs are too expensive - but because they use them everywhere.

The smart approach? Hybrid systems.

Use a lightweight LLM to classify the query first. Simple question? Route it to a cheap model. Complex analysis? Send it to the LRM.

Then, set hard limits. Google’s December 2025 guide recommends:

- 3-5 steps for factual analysis

- 5-8 steps for strategic planning

- 8-12 steps for policy evaluation

Anything beyond that? Diminishing returns kick in. Stanford’s Professor David Kim found that beyond five steps, cost inefficiency hits 80%.

Use tools like Anthropic’s Reasoning Cost Dashboard or Microsoft’s Azure Reasoning Optimizer. They track token usage per step, flag runaway processes, and auto-shutdown loops.

And train your team. Prompt engineering for LRMs isn’t about asking better questions. It’s about constraining the thinking. “Think step by step, but limit yourself to four reasoning actions.” That’s the new prompt.

The Future: Cheaper Thinking

LRMs aren’t going away. But they’re evolving.

Meta’s Llama-Reason 3.0, released in December 2025, cuts deliberation costs by 40% without losing accuracy. It uses “reasoning compression” - like deleting unnecessary notes from your scratch paper.

Microsoft’s Azure Reasoning Optimizer reduces costs by 52% by dynamically choosing which operators to use - skipping steps that don’t add value.

And coming in Q1 2026: Adaptive Reasoning Budget. This system will monitor the cost of each step in real time and decide: “Is this next thought worth $0.03?” If not, it stops.

Industry forecasts predict a 65-75% drop in deliberation costs over the next 18 months. But that doesn’t mean you can ignore cost now. The models are still expensive. The risk is still high.

Final Thought: Don’t Think More. Think Smarter

Reasoning large language models are powerful. But power without control is dangerous.

They’re not a replacement for standard LLMs. They’re a specialized tool - like a scalpel instead of a hammer.

Use them where the stakes are high. Where accuracy justifies cost. Where the alternative is a wrong decision with real consequences.

And always, always - set limits. Monitor usage. Audit your reasoning logs. Because the most expensive thing about LRMs isn’t the hardware. It’s the assumption that more thinking is always better.

It’s not. Sometimes, the best answer is the one you don’t overthink.

What exactly are internal deliberation costs in reasoning LLMs?

Internal deliberation costs refer to the extra computational resources - tokens, memory, time, and energy - required when a reasoning large language model (LRM) performs multi-step thinking. Unlike standard LLMs that generate answers in one pass, LRMs use operators like Generate, Refine, Backtrack, and Evaluate to simulate human-like reasoning. Each step consumes additional tokens and GPU memory, leading to significantly higher costs per query.

How much more expensive are reasoning LLMs compared to standard ones?

For simple queries, reasoning LLMs cost 3-5x more than standard LLMs - often without better results. For complex tasks requiring 5-10 reasoning steps, costs can be 4-12x higher. However, on high-stakes analytical tasks, LRMs can be 28-42% more cost-efficient per unit of accuracy because they reduce retries. OpenAI charges $0.012 per 1,000 tokens for reasoning mode, compared to $0.003 for standard responses.

When should I use a reasoning LLM instead of a standard one?

Use reasoning LLMs only for complex tasks where accuracy is critical and failure is costly - such as legal contract analysis, financial risk modeling, medical diagnosis support, or policy evaluation. Avoid them for simple questions like “What’s the capital of France?” or customer service FAQs. A hybrid approach - using standard LLMs for easy queries and LRMs only for tasks needing 3+ reasoning steps - saves money and improves performance.

What are the biggest risks of using reasoning LLMs in production?

The biggest risk is uncontrolled reasoning expansion - where the model gets stuck in infinite loops or generates unnecessary steps. This can cause single queries to cost $10-$20 instead of $0.01. Gartner reports 73% of enterprises without cost controls saw AI budgets spike 40-220% within six months. Other risks include high GPU memory demands, unpredictable electricity costs, and poor ROI on simple tasks.

How can I reduce the cost of reasoning in my LLM applications?

Implement reasoning budgeting: limit steps per query based on task type (e.g., 3-5 steps for factual analysis, 8-12 for policy evaluation). Use cost-monitoring tools like Anthropic’s Reasoning Cost Dashboard. Classify queries first - route simple ones to cheaper models. Adopt new techniques like Meta’s Llama-Reason 3.0, which compresses reasoning to cut costs by 40%. Train your team to write prompts that constrain thinking, not encourage it.

Is the cost of reasoning LLMs expected to go down?

Yes. Industry-wide optimizations are already cutting costs. Microsoft’s Azure Reasoning Optimizer reduces costs by 52%. Meta’s Llama-Reason 3.0 cuts deliberation costs by 40% while keeping 95% of accuracy. The upcoming Adaptive Reasoning Budget system (Q1 2026) will dynamically stop steps that aren’t worth the cost. Experts predict a 65-75% reduction in deliberation costs over the next 18 months due to better algorithms and specialized hardware.

NIKHIL TRIPATHI

December 13, 2025 AT 04:51Been using LRMs for financial modeling at work and honestly? The cost spike is real. We had one query that ran $214 and nobody even noticed until the finance team screamed. Now we’ve got a rule: if it’s not a billion-dollar decision, it gets the cheap model. Simple.

Also, the 17-23 step break-even point? Spot on. We tested it. Below 15 steps? Standard LLM wins. Above? LRM saves us from costly mistakes. It’s not about thinking more-it’s about thinking *only when it matters*.

Shivani Vaidya

December 14, 2025 AT 15:57The notion that more deliberation equals better outcomes is a dangerous illusion. In practice, most business queries do not require deep reasoning. The overuse of LRMs is akin to using a diamond-tipped drill to hang a picture frame.

Hybrid routing is not optional-it is essential. The cost inefficiency is not a bug; it is a feature of unregulated deployment. Discipline in prompt design and query classification is the only sustainable path forward.

Rubina Jadhav

December 14, 2025 AT 19:23I just use the simple ones. They work fine for me.

sumraa hussain

December 15, 2025 AT 04:27Bro. I ran a single LRM query for a 5-line summary and it cost me $17.30. I thought my GPU had gone rogue. I screamed. My cat ran away. My roommate asked if I was okay. I’m not okay. This isn’t AI. This is a financial horror movie and we’re all just extras.

And now they’re talking about ‘adaptive budgets’ like we’re not already drowning. I just want my $0.002 back.

Raji viji

December 16, 2025 AT 00:38Anyone else notice how every ‘expert’ here is just rebranding lazy engineering as ‘reasoning’? You’re paying 10x for a model that still gets Paris wrong sometimes. And now you want us to ‘constrain thinking’? LOL. That’s not prompting-that’s giving up. If your model can’t handle a simple question without a 12-step ballet, it’s broken. Not ‘advanced’.

And don’t even get me started on those ‘cost dashboards.’ They’re just fancy spreadsheets with extra steps so you can feel like you’re in control while your cloud bill burns a hole in your wallet. Pathetic.

Rajashree Iyer

December 16, 2025 AT 21:10Think about it-what is deliberation but the soul of the machine trying to become human? We project our own uncertainty onto these models, then punish them for mirroring our chaos.

Each token is a whispered doubt. Each backtrack, a sigh. Each evaluation, a silent prayer that this time, it’ll get it right.

And yet-we call it cost. We call it waste. We call it inefficiency.

But isn’t that the price of meaning? To think, even if no one asked you to?

Perhaps the real tragedy isn’t the $0.25 query. It’s that we’ve trained ourselves to measure wisdom in cents.

Parth Haz

December 17, 2025 AT 03:19Great breakdown. I appreciate the data-driven approach and the clear thresholds for when to use LRMs. Many teams overlook the importance of query classification, and that’s where most cost overruns begin.

Also, the mention of Meta’s Llama-Reason 3.0 is timely. We’re testing it in our compliance pipeline and seeing 38% cost reduction with no drop in accuracy. Encouraging signs.

Keep pushing for responsible use. The future of AI isn’t just smarter models-it’s smarter deployment.

Vishal Bharadwaj

December 19, 2025 AT 00:00Wait-so you’re saying if i ask ‘what’s 2+2’ and the model takes 10 steps to confirm it’s not a trick question, that’s ‘reasoning’? Bro. That’s not intelligence. That’s a glitch. And now you want to charge me $0.012 per token for this? I’ve seen toddlers solve problems faster and cheaper.

Also, ‘adaptive reasoning budget’? Sounds like a marketing term for ‘we gave up and added a timer.’ And don’t even get me started on the RTX 5090-32G price. That’s not hardware. That’s extortion with a GPU logo.

anoushka singh

December 19, 2025 AT 10:30ok but like… what if i just dont care if the answer is 35% more accurate? i just want it fast and cheap. why do i need all this thinking? its not like im launching a rocket or diagnosing cancer. i just need to know if the client liked the email.

also why does everyone act like this is new? i’ve been using basic bots for 5 years and they never broke the bank. just sayin’.