How to Choose Between API and Open-Source LLMs in 2025

Oct, 7 2025

Oct, 7 2025

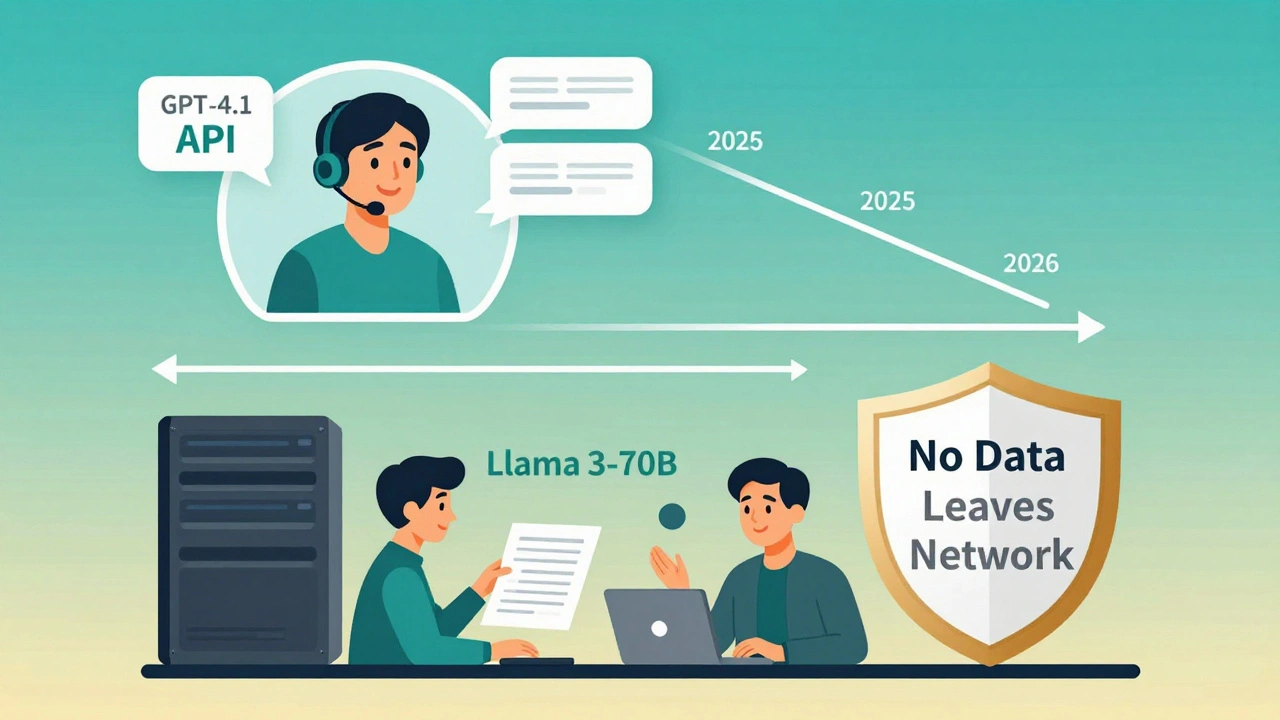

By late 2025, choosing between an API-based LLM like GPT-4.1 or an open-source model like Llama 3-70B isn’t about which one is better-it’s about which one fits your real-world needs. The performance gap has narrowed to just 3-5%, but the trade-offs in cost, control, and complexity are wider than ever. If you’re trying to decide, you’re not alone. Thousands of teams are wrestling with this right now. And the wrong choice can cost you thousands-or even derail your project.

Performance: Is the Gap Really Gone?

You’ve probably heard that open-source models are now almost as good as the big APIs. That’s mostly true. On benchmarks like MMLU (knowledge) and GPQA (reasoning), top open-source models like DeepSeek-V3 and Llama 3-70B score within 2-4 percentage points of GPT-4.1 and Claude Opus 4.1. For tasks like summarizing documents, answering FAQs, or generating marketing copy, you won’t notice the difference. But here’s the catch: that small gap matters where it counts. In medical diagnosis, legal document review, or complex coding tasks, proprietary models still outperform open-source ones by 4-6%. MIT CSAIL’s November 2025 report found that this translates to 15-22% more errors in real-world applications. If your use case involves high-stakes decisions, that margin isn’t worth risking for cost savings. Open-source models also struggle more with consistency. A GPT-4.1 response will be nearly identical across 100 runs. Llama 3 might give you five slightly different answers to the same prompt-even with the same temperature settings. That unpredictability can break workflows that rely on stable outputs.Cost: Upfront vs. Ongoing

Cost is where open-source models shine-but only if you’re ready for the setup. Proprietary APIs charge per token. GPT-4.1 costs $1.25 per million input tokens and $10.00 per million output tokens. For a customer support bot handling 250,000 queries a month, that’s $1,200 a month. Scale to a million queries? You’re looking at $5,000+. Anthropic’s Claude Opus 4.1? Even higher: $15 per million input, $75 per million output. Open-source models flip the script. You pay upfront for hardware: a single NVIDIA A100 GPU costs $10,000-$15,000. Monthly hosting? $20 for a basic CPU server, $700+ for a dedicated GPU instance. But once it’s running, your cost plateaus. Medium-sized companies report $300-$1,500/month for open-source, compared to $5,000-$20,000 for APIs at scale. That’s 86% savings, according to WhatLLM.org’s November 2025 analysis. The catch? You need engineers. A typical open-source deployment takes 2-4 weeks to set up. Most teams hire an ML engineer-salaries range from $120,000 to $180,000 a year-to manage Kubernetes, GPU memory, quantization, and model updates. If you don’t have that team, you’ll waste months troubleshooting CUDA errors or model crashes.Data Privacy and Compliance

If your data is sensitive-health records, financial transactions, legal contracts-this is the deciding factor. Proprietary APIs send your prompts and outputs to their servers. Even if they claim not to store data, you’re still trusting a third party. For companies under HIPAA, GDPR, or the EU AI Act, that’s a non-starter. InclusionCloud’s November 2025 survey found that 78% of healthcare and financial firms use open-source models specifically to avoid this risk. Self-hosting gives you full control. Your data never leaves your network. That’s why 68% of enterprises with over 1,000 employees use open-source models for internal data processing-even if they use GPT-4 for customer-facing chatbots. The EU AI Act, fully active in 2025, requires full transparency for high-risk AI systems. Proprietary models can’t meet that. You can’t inspect their weights, training data, or decision logic. Open-source? You can audit everything. That’s not just a technical advantage-it’s a legal one.

Speed and Throughput

Speed isn’t just about how fast a model answers. It’s about how many requests you can handle at once. GPT-4.1 delivers about 85 tokens per second via API. That’s fast enough for most real-time apps. But if you have 100 users hitting your system simultaneously, you’re hitting API rate limits. You might need to pay for higher tiers or add queuing systems. Self-hosted Llama 3-70B on an A100 hits 45-60 tokens per second. That’s slower, but you control the scaling. Add more GPUs? You can handle 10x the traffic without paying extra per request. No vendor lock-in. No surprise bills. For low-traffic apps-internal tools, small customer service bots-speed doesn’t matter much. For high-volume systems like call centers or real-time analytics, the difference becomes critical.Setup and Maintenance

This is where most teams get stuck. API integration? You write a few lines of code, paste your key, and go. Documentation for OpenAI’s API is rated 4.7/5 by over 1,200 developers. Support is 24/7 with a 99.9% uptime SLA. Open-source? You’re on your own. Llama 3’s documentation is community-run. GitHub discussions average 17.3 hours for a response to a critical issue. You need to know how to:- Quantize models to fit on smaller GPUs

- Optimize inference with vLLM or TensorRT

- Deploy with Docker and Kubernetes

- Monitor GPU memory leaks

- Update models without downtime

Market Trends and What’s Coming

The market is shifting fast. In Q3 2025, proprietary APIs still hold 68% of enterprise adoption. But open-source usage is growing at 142% year-over-year. Why? Because mid-sized companies are tired of vendor lock-in and unpredictable bills. New models are closing the gap even faster. Microsoft’s Phi-3.5, released in August 2025, hits 83.7% on MMLU-just 0.5% behind GPT-4.1. Mistral AI’s Mixtral 8x22B, launched in November 2024, rivals Claude Sonnet on reasoning tasks at 1/10th the cost. And the roadmap? OpenAI is launching GPT-5 mini for coding at $0.25 per million input tokens. Anthropic’s prompt caching cuts costs by 35-60% for repetitive queries. Meanwhile, Meta’s Llama 4 (expected Q1 2026) will cut inference costs by 40%. The future isn’t one or the other. It’s layered. Forward-thinking teams are using APIs for customer-facing tasks where performance matters most-and open-source for internal workflows where privacy and cost matter more.Which Should You Choose?

Here’s a simple decision tree:- Choose API LLMs if: You need the highest accuracy on complex tasks (medical, legal, research), have no ML team, want to launch in days, and can afford $1,000-$20,000/month.

- Choose open-source LLMs if: You handle sensitive data, need to comply with regulations, have a technical team (or budget to hire one), plan to scale beyond 500K queries/month, and want to lock in costs.

Frequently Asked Questions

Can open-source LLMs really match GPT-4’s performance?

For most everyday tasks-summarizing, answering questions, generating content-yes. Top open-source models like Llama 3-70B and DeepSeek-V3 score within 2-4% of GPT-4.1 on benchmarks. But for advanced reasoning, scientific analysis, or coding tasks, GPT-4.1 still leads by 4-6%. That gap can mean 15-22% more errors in real-world applications, according to MIT CSAIL’s November 2025 report.

Is open-source cheaper than using an API?

At scale, yes-by 86%. A company using GPT-4 for 1 million queries/month might pay $5,000-$20,000 monthly. Switching to Llama 3-70B on a dedicated GPU cuts that to $300-$1,500. But you pay upfront: a single A100 GPU costs $10,000-$15,000, and you need engineers to run it. If you don’t have the team, the hidden costs of delays and failures can outweigh the savings.

Do I need a data science team to use open-source LLMs?

You don’t need a PhD, but you do need someone who knows how to deploy models on GPUs, manage Kubernetes, and optimize inference. n8n Blog’s November 2025 survey found that 67% of organizations hiring open-source LLMs added at least one ML engineer. If your team only knows Python and APIs, you’ll struggle. Start small: test Llama 3 on Hugging Face’s free tier before committing to hardware.

What if I need both speed and privacy?

You don’t have to pick one. Many companies use a hybrid model: proprietary APIs for customer-facing chatbots (where performance matters) and open-source models for internal document processing (where privacy matters). For example, a bank might use GPT-4 for customer service and Llama 3 for analyzing loan applications internally. This approach balances cost, compliance, and quality.

Are there any legal risks with using open-source LLMs?

Open-source models reduce legal risk because you control the data. But they’re not risk-free. Some models were trained on copyrighted data. If you use them to generate content that resembles protected work, you could face liability. Always audit your model’s training data if you’re in regulated industries. The EU AI Act requires full transparency, which open-source models can provide-but only if you’re prepared to document everything.

When will open-source models be better than APIs?

They already are-for many use cases. By Q4 2026, Gartner predicts the performance gap will shrink to just 1-2%, while cost savings stay at 80-85%. But APIs will keep improving too-faster response times, better agent capabilities, cheaper pricing tiers. The real winner won’t be one model type-it’ll be the team that uses both strategically.

Bharat Patel

December 14, 2025 AT 08:11It's funny how we treat LLMs like they're gods or villains when really they're just tools. Like choosing between a hammer and a power drill-you don't ask which is better, you ask what you're trying to build. The real question isn't API vs open-source, it's whether your project needs polish or autonomy. And honestly? Most teams don't need either. They need clarity on what they're solving for. Stop chasing benchmarks. Start asking: does this make my users' lives easier? That's the only metric that matters.

Bhagyashri Zokarkar

December 15, 2025 AT 22:12omg i just spent 3 weeks trying to get llama 3 running on my laptop and it kept crashing like my ex’s promises lol i mean like i had the gpu and everything but then the quantization thingy and vllm and docker and i was just like why am i doing this i could’ve just paid 50 bucks a month and had coffee while it worked smh

Rakesh Dorwal

December 16, 2025 AT 15:00Open-source? Sure, if you want to trust some random guy on GitHub who trained his model on leaked EU parliament data. Meanwhile, GPT-4.1? Made in America, audited by top engineers, and not hiding behind open-source loopholes. Why are we letting foreign code run our internal systems? The EU AI Act is just a distraction-they want us to give up control. If you care about sovereignty, stick with APIs. They’re not perfect, but at least they’re accountable. And if you’re using Llama 3 for anything serious, you’re already a data leak waiting to happen.

Vishal Gaur

December 18, 2025 AT 06:00so like i read the whole thing and honestly i think the real problem is nobody wants to admit they’re just scared of learning something new. i mean sure open-source is a pain in the ass but so is paying 20k a month for something you dont own. i tried to deploy it once and spent 4 days fixing cuda errors and then gave up and went back to the api and now i just pretend i made a smart choice. also i think the author forgot to mention how much time you waste reading github issues that are 3 years old and still unanswered. why is everything so complicated now?

Nikhil Gavhane

December 19, 2025 AT 04:37This is one of the clearest breakdowns I’ve seen in a long time. I work with a small nonprofit and we were debating this exact thing-cost vs control. We went with Llama 3 on a modest cloud instance after testing for a month. The setup was rough, but now we handle 80% of our internal queries without worrying about data leaving our servers. It’s not perfect, but it’s ours. And honestly? The peace of mind is worth the initial headache. Keep sharing these practical guides-they help people like me who aren’t engineers but still need to make smart calls.

Rajat Patil

December 20, 2025 AT 12:56I appreciate the balanced perspective. It is important to recognize that both approaches have merit. The decision should be based on the specific context of the organization, its resources, and its responsibilities. For some, the simplicity and reliability of an API are essential. For others, the control and transparency of open-source are non-negotiable. There is no universal solution. Each team must evaluate their needs with care and humility. Thank you for presenting this without bias.

deepak srinivasa

December 22, 2025 AT 08:28What about hybrid approaches? Like using API for customer-facing stuff but open-source for internal QA or training data filtering? I’ve seen teams do this but nobody talks about it. Also, how do you handle model drift in open-source? Do you retrain weekly? Monthly? And who owns the fine-tuned version? Is it still open-source if you tweak it for your use case? Just wondering if anyone’s actually documented this workflow properly.

pk Pk

December 24, 2025 AT 05:41Hey everyone, I’ve been helping startups pick their LLM stack for the last year. If you’re reading this and feeling overwhelmed-stop. You don’t need to solve this today. Start small. Try Hugging Face’s free tier with Llama 3. Run 100 queries. Compare it side-by-side with GPT-4.1. Measure latency, cost per query, and output consistency. Then ask your team: which one feels less stressful to maintain? The answer isn’t in benchmarks. It’s in how you feel at 2 a.m. when the system breaks. Pick the one that lets you sleep. And if you’re still stuck? Hire a contractor for two weeks. It’s cheaper than a year of wasted effort.