Legal Counsel Playbook for Generative AI: Priorities, Checklists, and Training

Sep, 7 2025

Sep, 7 2025

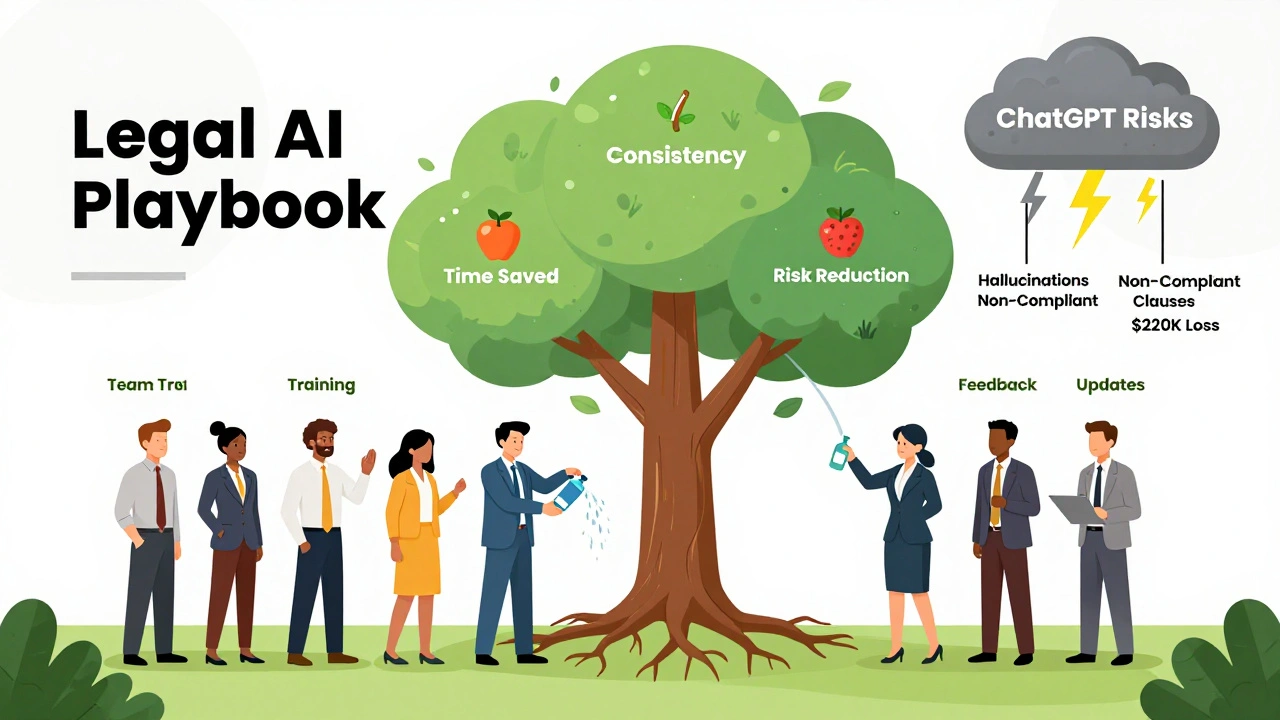

Why Legal Teams Can’t Afford to Ignore AI Playbooks Anymore

Every day, in-house lawyers spend nearly 4.5 hours just reviewing contracts. That’s more than half a workday-on paperwork that rarely changes. NDAs, vendor agreements, employment terms: these are the same documents, signed over and over, with minor tweaks. Yet, most legal teams still handle them manually. Why? Because they haven’t figured out how to automate the routine without losing control.

Generative AI isn’t coming for your job. It’s here to take over the boring parts so you can focus on what actually matters: strategy, risk, and judgment. But only if you build the right playbook.

Without a clear set of rules-what we call a legal counsel AI playbook-AI doesn’t help. It hurts. It hallucinates. It misses red flags. It flags harmless language as risky. And when that happens, you’re not saving time-you’re creating more work.

What Exactly Is a Legal Counsel AI Playbook?

A legal counsel AI playbook is not software. It’s not a tool. It’s a living set of rules that tells an AI how to think like your legal team.

Think of it like a training manual written in code. It includes:

- Which contract clauses are non-negotiable

- What language counts as acceptable risk

- How to handle indemnification, liability caps, and data privacy terms

- Which fallback positions to suggest during negotiations

- How to flag deviations based on your company’s past deals

These rules are fed into a fine-tuned language model trained on your own contracts, past negotiations, and internal legal memos. The result? An AI that doesn’t guess-it follows your standards.

For example: If your company always limits liability to the contract value and never accepts unlimited indemnity, the AI learns that instantly. When it sees a vendor trying to slip in a broader indemnity clause, it highlights it, suggests your standard language, and even cites past agreements where the same clause was rejected.

This isn’t science fiction. According to Dioptra.ai’s 2025 data, teams using AI playbooks cut contract review time by up to 50% in six months. And 87% of users say consistency improved dramatically-whether the reviewer was a new hire or a 20-year veteran.

Three Priorities Before You Build Your Playbook

Too many legal teams jump straight into AI tools without asking: What are we trying to fix? Skip this step, and you’ll end up with a fancy tool that does the wrong thing.

Start with these three priorities:

- Identify your high-volume, low-complexity contracts - These are your low-hanging fruit. NDAs, standard vendor agreements, SaaS licenses, and employment offer letters. These make up 70% of your contract volume but require minimal legal judgment. Automate these first.

- Map your current standards - Grab 50-100 recent contracts. Highlight every clause that was negotiated. What did you insist on? What did you compromise on? What language did you reuse? This isn’t about what’s legally ideal-it’s about what your team actually accepts.

- Define your risk tolerance - Not every deviation is a dealbreaker. Is a 12-month data retention clause acceptable if the vendor is ISO 27001 certified? Does your company allow liability caps below the contract value? Write these down. AI needs clear thresholds, not vague principles.

One Fortune 500 tech company spent three months mapping their NDA standards before touching an AI tool. The result? Their AI playbook reduced NDA review time from 90 minutes to 15-with zero errors in the first six months.

Your AI Playbook Implementation Checklist

Building a playbook isn’t a one-time project. It’s a process. Here’s how to do it right:

- Collect your contract corpus - Gather at least 12 months of executed contracts. Include versions with edits, redlines, and final signed copies. Don’t skip the rejected drafts-they show where your team drew the line.

- Tag key clauses - Use a spreadsheet or CLM system to label each clause by type: liability, confidentiality, termination, governing law, etc. This trains the AI to recognize patterns.

- Define acceptable language - For each clause, write out your preferred version and at least one acceptable alternative. Example: “Liability capped at contract value” is preferred. “Liability capped at $500,000” is acceptable if the contract value is under $1M.

- Train the model - Feed your tagged contracts into a legal-grade LLM. Use tools like Gavel, Dioptra, or Pramata that support fine-tuning on proprietary data. Avoid public models like ChatGPT-they don’t know your rules.

- Test with real contracts - Run 20-30 unseen contracts through the system. Compare AI suggestions to what your team would have done. Adjust rules until accuracy hits 90%+.

- Integrate with your tools - Make sure the AI works inside Word, Excel, or your CLM system. No one will use it if it requires switching platforms.

- Train your team - Attorneys need 8-12 hours. Paralegals and ops staff need 20-25. Focus on how to interpret AI flags, override suggestions, and update the playbook.

- Set up review cycles - Every quarter, review what the AI missed. Did it miss a new regulatory requirement? Did a vendor introduce a new clause? Update the playbook. It’s not static. It’s alive.

One healthcare legal team spent four months and three iterations to codify their playbook. Their biggest hurdle? Turning 20 years of tribal knowledge into clear rules. But once they did, onboarding new lawyers dropped from 6 months to 2 weeks.

Training Your Team to Work With AI-Not Against It

AI doesn’t replace lawyers. It amplifies them. But only if the team knows how to use it.

Most resistance doesn’t come from fear of tech. It comes from fear of losing control. Senior attorneys worry their expertise will be reduced to a checklist. That’s a valid concern-if you treat the playbook as a black box.

Fix this by making the AI transparent. Show your team why the AI flagged something. Did it compare the clause to 47 past NDAs? Did it detect a change in state law? Let them see the evidence.

Also, give them ownership. Let attorneys propose updates to the playbook. If a new clause becomes standard across your industry, they should be able to submit it for review. This turns AI from a top-down tool into a team-wide knowledge system.

Training should cover:

- How to interpret AI suggestions (is it a warning or a requirement?)

- When to override the AI (and how to document why)

- How to report false positives and false negatives

- How to update the playbook with new precedents

According to Gavel.io’s 2025 guide, teams that involve attorneys in playbook updates see 40% fewer errors over time. The AI learns from them. They learn from the AI. It becomes a feedback loop.

Regulatory Risks You Can’t Ignore

Using AI in legal work isn’t unregulated. The European AI Act classifies legal AI as “high-risk” if it affects rights or obligations. In the U.S., 28 states have introduced laws affecting AI use in contracts, disclosures, or hiring.

Here’s what you must address:

- Data residency - Did you train your AI on contracts stored in the EU? If so, you may need to comply with GDPR. IAPP found 73% of legal AI tools failed to properly handle data location.

- Transparency - If a contract is generated or modified by AI, do you need to disclose that to the counterparty? Some jurisdictions require it.

- Liability - If the AI misses a clause and you get sued, who’s responsible? Your team. The AI is a tool, not a shield.

- Recordkeeping - Keep logs of AI suggestions, overrides, and playbook updates. This is your audit trail.

The ACC’s 2024 AI Toolkit includes 13 principles for AI governance. One of them: “Maintain human accountability at every decision point.” That’s your North Star.

What Happens When You Don’t Use a Playbook?

Some teams skip the playbook and just plug in ChatGPT or Copilot. They ask: “Review this NDA.”

What happens?

- The AI suggests a clause that was banned in your company three years ago.

- It misses a key jurisdictional requirement because it was trained on generic data.

- It overwrites your standard language with boilerplate from another industry.

- It doesn’t know your negotiation history-so it agrees to terms your team would never accept.

One legal director at a mid-sized SaaS company told us: “We used ChatGPT on 12 contracts. Three had dangerous clauses we didn’t catch until the vendor signed. We lost $220K in potential damages.”

That’s the cost of skipping the playbook.

Where the Market Is Headed-And What You Should Do Now

By 2027, Gartner predicts 85% of routine legal review tasks will be AI-assisted. The winners won’t be the ones using AI the most. They’ll be the ones using it the smartest.

Top performers are already:

- Connecting their AI playbooks to enterprise risk systems

- Using AI to predict contract disputes based on historical patterns

- Automating compliance checks for new regulations

Start small. Pick one contract type. Build your playbook. Train your team. Measure results. Then expand.

Don’t wait for perfection. Wait for progress.

Frequently Asked Questions

What’s the difference between a legal AI playbook and just using ChatGPT for contracts?

ChatGPT uses generic training data and doesn’t know your company’s policies. A legal AI playbook is fine-tuned on your own contracts, past negotiations, and internal standards. It doesn’t guess-it follows your rules. Using ChatGPT alone risks inconsistent, inaccurate, or non-compliant outputs. A playbook ensures every contract aligns with your organization’s actual practices.

How long does it take to build a legal AI playbook?

Most teams spend 3-6 months building their first playbook. The biggest time sink isn’t the tech-it’s codifying tribal knowledge. Gathering and tagging 100+ contracts, defining acceptable language, and testing iterations takes 80-120 hours from senior legal staff. But once built, the playbook scales instantly across your entire contract volume.

Can AI handle complex, high-stakes contracts like M&A deals?

No-not yet. AI playbooks excel at standardized contracts like NDAs, vendor agreements, and employment letters. For M&A, IPOs, or complex joint ventures, human judgment is still essential. Use AI to draft initial versions, flag risks, and summarize terms, but never rely on it to make strategic decisions. The AI is a research assistant, not a dealmaker.

Do we need a dedicated legal tech team to run this?

Not necessarily. Many teams use existing legal operations staff to manage the playbook. You’ll need someone to oversee training, update rules, and monitor outputs-but you don’t need engineers. Tools like Gavel and Dioptra are designed for legal teams, not IT departments. Focus on legal expertise, not technical skills.

How do we prevent AI from hallucinating legal advice?

Three ways: First, only use models trained on your own data-not public ones. Second, require all AI outputs to be reviewed by a human before use. Third, build in validation rules-like flagging any clause that contradicts your playbook. Deloitte’s 2024 report confirms that 82% of CLOs worry about hallucinations. The fix isn’t better AI-it’s better governance.

Is this only for big companies?

No. While 89% of Fortune 500 companies use AI playbooks, mid-sized firms (500-5,000 employees) are adopting them at 67% rates. Even small legal teams under 10 people can start with one contract type-like NDAs. Pre-built templates for common agreements are available from vendors like Gavel.io. You don’t need a huge team to begin.

Next Steps: Start With One Contract Type

Don’t try to automate everything at once. Pick one contract you review every week. NDA? Vendor agreement? Employment offer? Gather 50 examples. Map your standards. Build a simple playbook. Train one attorney. Test it. Measure the time saved. Then expand.

The goal isn’t to replace lawyers. It’s to stop wasting their time on tasks that don’t require judgment. When you do that, you free them to do the work that actually moves the business forward.

Buddy Faith

December 13, 2025 AT 07:38Xavier Lévesque

December 13, 2025 AT 15:56Thabo mangena

December 14, 2025 AT 16:51Eva Monhaut

December 15, 2025 AT 14:18Scott Perlman

December 16, 2025 AT 01:46Sandi Johnson

December 16, 2025 AT 14:58mark nine

December 17, 2025 AT 04:54Tony Smith

December 18, 2025 AT 01:03Karl Fisher

December 19, 2025 AT 20:48