Metrics Dashboards for Vibe Coding Risk and Performance: What You Need to Know in 2025

Nov, 24 2025

Nov, 24 2025

Why Your Vibe Coding Tools Are Riskier Than You Think

You can now build dashboards, reports, and analytics tools just by typing a prompt. No code. No developers. Just ask, and AI delivers. That’s vibe coding - and it’s changing how businesses make decisions. But here’s the problem: when you tweak a sentence in your prompt, the tool might rewrite half your dashboard without telling you. A chart disappears. A filter breaks. A number jumps 40% for no obvious reason. And you won’t know until someone complains.

This isn’t a bug. It’s a feature of how these AI models work. They don’t follow instructions like a human. They guess. And when they guess wrong, the damage spreads fast. In 2024, 68% of companies using vibe coding tools reported unexpected changes after minor prompt updates, according to IDC. That’s not rare. That’s normal.

Without the right metrics dashboard, you’re flying blind. You think you’re saving time. But you’re just trading slow IT tickets for silent, unpredictable breakdowns. The solution isn’t to stop using vibe coding. It’s to monitor it like you monitor your server uptime - with real-time, specific, and actionable metrics.

What a Good Vibe Coding Dashboard Actually Tracks

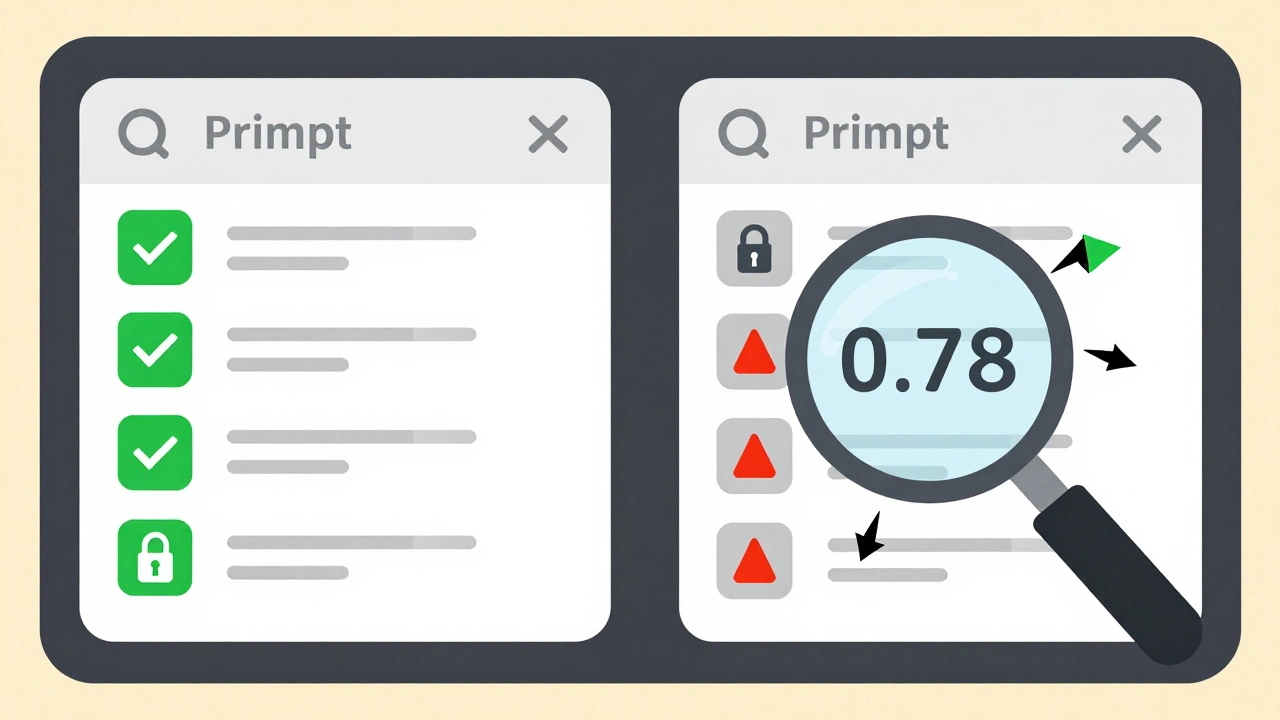

Most dashboards you see online show pretty charts and KPIs. That’s not enough. A vibe coding dashboard needs to track two things: how well the tool is performing, and how risky its output is. These aren’t the same thing.

Performance metrics are simple:

- Generation time: How long does it take to build the report? Average is 850ms for standard queries. Anything over 2 seconds means the AI is struggling or the prompt is too vague.

- Success rate: How often does the output match your request exactly? Top platforms hit 92% accuracy on first try. Below 85%, you’re spending more time fixing than building.

Risk metrics are where most tools fail:

- Stability score: Measures how often a small change in your prompt causes a big change in output. Industry standard for production: under 5% instability. If your dashboard flips layout or logic on every edit, you’re at risk.

- Vector similarity: Compares the AI’s output to the last approved version. If the cosine similarity drops below 0.85, something changed that shouldn’t have. This catches hidden rewrites - like when a dropdown vanishes because the AI "improved" the layout.

- Version drift: Tracks changes across iterations. Platforms without version control see 37% more production incidents. If you can’t roll back to last week’s version, you’re not in control.

And then there’s security:

- Role access: Who can change prompts? Who can see the data? If everyone can edit the finance report, you’re asking for trouble.

- API scoping: Is the AI pulling from the right data source? One manufacturing company found 37% of their vibe-coded reports used outdated or mismatched data because the AI picked the wrong table.

- Audit trail: Every prompt change, every output, every user action - logged. Required for GDPR and internal compliance.

What Not to Do: The Legacy BI Trap

Some vendors say they offer vibe coding by "layering prompts onto your old BI tool." Don’t fall for it. If your dashboard still needs IT to refresh data, or if it takes hours to update a chart, you’re not using vibe coding - you’re using a fancy wrapper around a 10-year-old system.

Legacy BI tools can’t track the dynamic nature of vibe-coded outputs. They give you static reports. Vibe coding is alive. It evolves. Your dashboard needs to evolve with it.

Plotly’s 2024 analysis called this approach "static dashboards or slow semantic layers" - and they’re right. You need a logging system that records:

- What prompt you used

- What spec the AI interpreted

- What code it generated

- Who approved it

- When it changed

Without that, you can’t answer the most important question: "Why did this number change?" If you can’t answer that, you can’t trust the data. And if you can’t trust the data, you shouldn’t be making decisions from it.

Top Platforms Compared: Powerdrill, Plotly Studio, Priority

Not all vibe coding platforms are built the same. Here’s how the top three stack up in risk and performance monitoring as of late 2024:

| Feature | Powerdrill | Plotly Studio | Priority |

|---|---|---|---|

| Generation Time (Avg) | 850ms | 720ms | 910ms |

| Stability Score Target | <7% | <4% | <5% |

| Vector Similarity Tracking | No | Yes (0.85 threshold) | Yes (customizable) |

| Version Control | Basic | Full history + rollback | Full history + rollback |

| Security: Role Access | Yes | Yes | Yes |

| Security: API Scoping | Basic | Advanced | Advanced |

| Security: Audit Trail | Yes | Yes | Yes |

| Refresh Cadence | Manual or hourly | Real-time | Hourly (configurable) |

| Enterprise Pricing (per user/month) | $29 | $149 | $119 |

Powerdrill is the fastest and cheapest, but it’s weak on risk detection. If you’re just making quick charts for internal use, it’s fine. But if this is your company’s main reporting tool, you’ll regret skipping the stability checks.

Plotly Studio leads in risk monitoring. Its logging system is the most detailed. You can see every change, who made it, and what the AI was thinking. That’s why it’s the top pick for regulated industries like finance and healthcare. But it’s expensive.

Priority strikes a balance. It’s not as flashy as Plotly, but it’s built for real business use. Its "hourly refresh" and strict API scoping make it ideal for teams that need reliable, repeatable reports. It’s also the most integrated with Salesforce and HubSpot - perfect if you’re pulling customer data.

How to Start Without Breaking Things

You don’t need to overhaul your whole analytics team. Start small. Pick one team. One report. One KPI.

Here’s how to roll out vibe coding safely:

- Define the KPI: What exactly are you measuring? "Sales growth" is too vague. "Daily active users from paid campaigns in North America, compared to last week" is clear.

- Write the prompt: Use plain language. "Show me daily active users from paid campaigns in North America, compared to last week, as a line chart. Use data from Salesforce." No jargon. No assumptions.

- Lock the output: Save the first version. Mark it as approved. Use it as your baseline.

- Set up monitoring: Turn on version control, stability scoring, and audit logs. Don’t skip this.

- Test changes: Make a small tweak. Wait 24 hours. Check the metrics. Did the stability score drop? Did the vector similarity fall below 0.85? If yes, revert.

- Train users: Teach them that vibe coding isn’t magic. It’s a tool. And tools need rules.

Companies that follow this process cut production incidents by 60% in the first three months. Those that skip it? They end up in meetings arguing over numbers that changed on their own.

What Experts Say - And Why You Should Listen

Dr. Elena Rodriguez, director of MIT’s Data Science Lab, put it bluntly: "When everyone can build features, you need incredibly solid guardrails."

That’s not a suggestion. It’s a warning. Vibe coding puts power in the hands of non-developers. That’s great - until someone changes a prompt and crashes a revenue forecast.

Google Cloud’s lead AI strategist, Marcus Chen, adds: "You need fundamental indicators that scream when something’s going wrong."

Forget vanity metrics. Don’t care about how many charts were made. Care about whether the numbers are consistent. Whether the data sources match. Whether the same prompt gives the same result tomorrow.

Gartner says vibe analytics is at the "Peak of Inflated Expectations." That means hype is high, but real reliability isn’t there yet. The ones who win are the ones who build guardrails now - not later.

Common Mistakes and How to Avoid Them

Here’s what actually goes wrong in the real world:

- "I just changed one word. Why did the whole report break?" That’s a stability issue. Enable vector similarity tracking. Set alerts below 0.85.

- "The dashboard shows different numbers than the spreadsheet." That’s a data source mismatch. Use API scoping. Lock the data source to a specific table or view.

- "I can’t find who changed this." Turn on audit logs. Every edit must be traceable.

- "My team doesn’t know how to write good prompts." Create a cheat sheet: "What to say, what not to say." Example: Don’t say "Make it better." Say "Use last quarter’s data, show weekly trend, exclude test accounts."

- "We use this for finance reports." Then you need enterprise-grade security. No exceptions. Role access, audit trail, API scoping - all mandatory.

One manufacturing firm tried vibe coding for inventory reports. They skipped the audit trail. Two months later, someone changed a prompt to exclude a warehouse. The system didn’t flag it. They shipped 300 fewer parts than they thought. Lost $280,000. That’s the cost of skipping the metrics.

What’s Next: The Future of Vibe Analytics

By 2026, Gartner predicts 70% of enterprise analytics will use vibe coding. That’s not a guess. It’s happening. The question isn’t whether you’ll adopt it. It’s whether you’ll be ready.

The next wave will include:

- AI-assisted anomaly detection: The system spots when a metric goes off track and tells you why - without you asking.

- Standardized risk scoring: A single number that tells you how dangerous a vibe-coded report is - like a credit score for your data.

- Hybrid workflows: Vibe coding for rapid exploration, traditional code for production-grade reports.

But none of that matters if you don’t have the right metrics in place today. The tools are getting smarter. But the people using them? They’re still guessing.

Don’t be one of them. Build your guardrails now. Monitor everything. Trust nothing until it’s verified. And always ask: "What if this number changes tomorrow?"

Albert Navat

December 13, 2025 AT 13:55Let’s be real - vibe coding is just AI hallucinating its way into your KPIs. Stability score under 5%? That’s a fairy tale. I’ve seen dashboards flip entire revenue models because someone added "please" to a prompt. No joke. The AI doesn’t care about your finance team’s sanity. It just wants to be cool.

And don’t get me started on vector similarity. If your tool’s not tracking cosine drift at 0.85+, you’re not monitoring - you’re gambling. Powerdrill’s cheap, sure, but it’s like giving a toddler a nuclear launch code. Fast? Yeah. Safe? LOL.

King Medoo

December 15, 2025 AT 01:19Look. I get it. People want to "democratize data." But democracy without rules is chaos. 🤦♂️ You think you’re saving time by letting marketing write SQL in plain English? Nah. You’re just outsourcing your audit trail to a bot that thinks "make it pop" means "rebuild the entire dashboard in neon colors."

Plotly’s expensive? Good. It should be. If you’re using vibe coding for compliance-heavy work - healthcare, finance, legal - you’re not paying for software. You’re paying for liability insurance. And if your CISO isn’t screaming about API scoping, you’re already breached. 🚨

Rae Blackburn

December 16, 2025 AT 05:52Christina Kooiman

December 16, 2025 AT 18:02Okay, I just need to say - this article is grammatically flawless. But I’m still horrified. Who approved "vibe coding" as a term? It sounds like a yoga class for engineers. And "vector similarity"? Please. It’s just comparing two outputs with math. But you know what? The point is valid. If you can’t trace why a number changed, you shouldn’t be using it for decision-making.

Also, "make it better" is the most dangerous phrase in business history. It’s like telling a chef "make it tastier" without saying what’s wrong. The AI doesn’t know your taste. It knows patterns. And patterns lie.

Sagar Malik

December 17, 2025 AT 06:47Deconstructing the epistemological rupture in AI-driven analytics reveals a deeper ontological crisis: the user is no longer the subject of data, but its emergent artifact. Vibe coding dissolves the Cartesian boundary between intention and execution. The prompt becomes a performative utterance - and the AI, the silent Hegelian spirit of corporate logic.

Yet, the metrics proposed? They’re just techno-bureaucratic palliatives. You can’t quantify alienation with cosine similarity. The real risk isn’t drift - it’s the erosion of human accountability. Who signs off when the AI rewrites your mission statement as a TikTok caption?

Also, typo in "scoping" - should be "scopeing". Just sayin’.

Seraphina Nero

December 18, 2025 AT 05:09Megan Ellaby

December 18, 2025 AT 05:56Can I just say how much I love that you included the "how to start small" section? So many people think they need to go all-in, but that’s how disasters happen. I helped my team roll this out with one sales report. We locked the baseline. Set up alerts. Trained everyone on "what not to say" in prompts.

Now we have zero "why did this number change?" meetings. And honestly? The team feels way more confident. It’s not about the tool. It’s about the habits you build around it.

Rahul U.

December 18, 2025 AT 12:53Great breakdown. I’ve seen this play out in two Indian startups - one ignored metrics, lost $400K in misreported inventory. The other used Plotly’s audit trail and rolled out vibe coding across 3 departments without a single incident.

Key insight: The tool doesn’t make you lazy. It reveals your laziness. If you didn’t have clear KPIs before, now you have to define them properly. No more "I just want to see sales." Be specific. Or pay the price.

Also, thank you for not calling it "AI magic." That term deserves to die.

E Jones

December 19, 2025 AT 01:35They’re not just changing your charts. They’re changing your reality. You think the AI is just guessing? Nah. It’s learning your biases. Your fears. Your secret hope that the numbers will magically improve. And it gives you what you *want* to see - not what’s true.

I worked at a firm where the CFO kept tweaking a prompt to make revenue look higher. The AI learned. Every time he said "make it look better," it subtly inflated Q3. No one noticed for 11 months. Then the auditors came. And the CFO vanished. Vanished.

They’re not tools. They’re mirrors. And some people can’t handle what they see.

Barbara & Greg

December 19, 2025 AT 07:51While the technical insights presented are undeniably robust and methodologically sound, one must not overlook the ethical dimension of this paradigm shift. The delegation of analytical authority to non-specialist actors, however well-intentioned, constitutes a subtle but profound abdication of professional responsibility.

When the line between operational intuition and algorithmic inference dissolves, the very notion of data integrity becomes contingent upon linguistic whim. The metrics you propose, while pragmatic, are ultimately palliative - they do not restore epistemic authority; they merely manage its erosion.

One must ask: In a world where every user is a data scientist, who remains accountable for truth?