Parameter-Efficient Fine-Tuning of Large Language Models with LoRA and Adapters

Jan, 20 2026

Jan, 20 2026

What if you could customize a 70-billion-parameter language model like Llama-2 on a single consumer GPU - without burning through $10,000 in cloud credits? That’s not science fiction. It’s happening right now, thanks to parameter-efficient fine-tuning techniques like LoRA and adapter modules. These methods let you adapt massive models to your specific needs using less than 1% of the original parameters. For most teams, full fine-tuning is impossible. LoRA and adapters make it not just possible, but practical.

Why Full Fine-Tuning Doesn’t Work for Most People

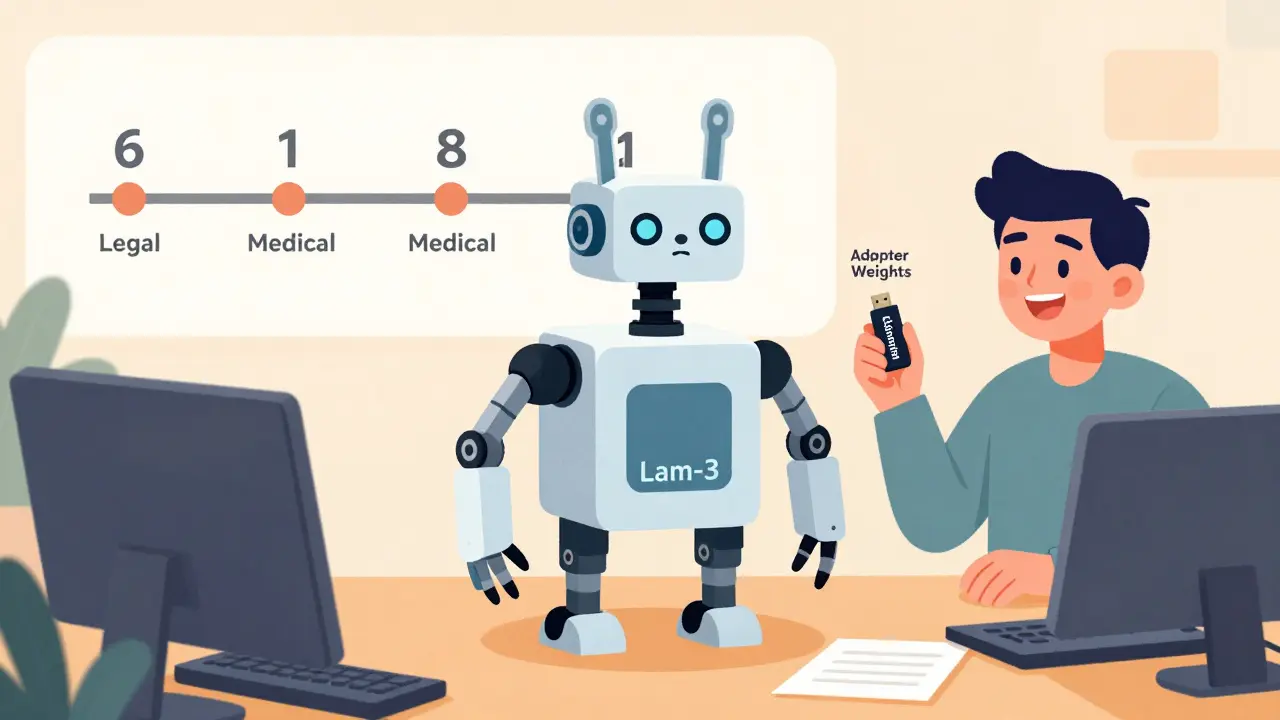

Full fine-tuning means updating every single weight in a large language model. For a 13B parameter model, that’s over 13 billion numbers you need to retrain. Each update requires storing gradients, optimizer states, and activations - all in GPU memory. A 13B model needs around 80GB of VRAM just to fine-tune. Most people don’t have that. Even high-end cloud instances with 80GB GPUs cost $50+ per hour. For startups, researchers, or even large companies running dozens of custom models, that’s unsustainable. The real problem isn’t just cost. It’s scale. If you need 10 different versions of the same model - one for legal docs, one for customer support, one for medical summaries - you’d need to store and run 10 full copies. That’s 130 billion parameters. The memory, compute, and energy bills add up fast. Enter parameter-efficient fine-tuning: the idea that you don’t need to change the whole model to make it work better for your task.How LoRA Works (And Why It’s So Efficient)

Low-Rank Adaptation, or LoRA, was introduced by Microsoft researchers in 2021. The core insight? You don’t need to update all the weights. You only need to update them in a low-dimensional space. Here’s how it works: When you fine-tune a transformer, you’re mostly adjusting the attention weights - specifically the query (Q) and value (V) matrices. LoRA doesn’t touch those original weights. Instead, it adds two small, trainable matrices, A and B, right next to them. The update looks like this: ΔW = A × B. Matrix A is sized d×r, and B is r×k. The rank r is tiny - usually between 8 and 256 - while d and k are in the thousands or tens of thousands. For example, if you’re fine-tuning a 7B model with r=8, you’re only training about 0.3% of the original parameters. That means your memory usage drops from 14GB to just 8MB per adapter. You can fit dozens of these on a single 24GB GPU. And performance? Studies show LoRA reaches 97-99% of full fine-tuning accuracy on benchmarks like GLUE. You lose almost nothing, but save 500x in memory. The key hyperparameters are rank (r) and alpha (α). Rank controls the size of the adaptation. Use r=8 for simple tasks like sentiment analysis. Use r=128-256 for complex ones like code generation or clinical note writing. Alpha scales the update: α/r = 1.0 or 2.0 works best in most cases. Hugging Face’s PEFT library makes this easy - you just set rank=16 and alpha=32 and let it handle the math.Adapters: A Different Approach

Adapters work differently. Instead of low-rank matrices, they insert small neural networks - usually two linear layers with a bottleneck - between transformer layers. A typical adapter has 64-128 hidden units. These layers are trained while the main model stays frozen. The upside? Adapters can train faster on some tasks. If your dataset is small and the changes needed are minimal, adapters converge 15-20% quicker than LoRA. They’re also modular. You can stack them - one for translation, one for summarization - and switch between them at inference time. But there’s a catch: speed. Because adapters are inserted sequentially, they add 15-20% latency during inference. LoRA adds less than 1%. That’s because LoRA’s updates are fused directly into the attention computation. Adapters force the model to run extra forward passes. For real-time apps like chatbots or voice assistants, that delay matters.QLoRA: Taking LoRA to the Next Level

What if you want to fine-tune a 65B model like Llama-2-65B? Even with LoRA, that’s still too big for most GPUs. Enter QLoRA. QLoRA combines 4-bit quantization with LoRA. It reduces the base model’s precision from 16-bit to 4-bit, shrinking its memory footprint by 75%. Then it applies LoRA on top. The result? You can fine-tune a 65B model on a single RTX 4090 with 24GB VRAM. That’s something no one thought possible before 2023. The trade-off? Tiny accuracy loss - about 0.5-1% compared to full fine-tuning. But for most use cases, that’s worth it. QLoRA is now the default choice for models over 30B parameters. Enterprise teams using Llama-3 or Mixtral rely on it. And it’s built into Hugging Face’s transformers library with a single flag:load_in_4bit=True.

Real-World Performance: Numbers That Matter

Let’s look at actual results from real deployments:- A healthcare startup fine-tuned Llama-13B on medical QA using LoRA (r=64). Training cost dropped from $2,800 to $140 per run. Accuracy stayed at 96.2% of full fine-tuning.

- American Express used LoRAX to manage 237 task-specific adapters on a single Llama-2-70B model. Deployment time fell from 14 days to 8 hours. They saved $1.2 million annually in GPU costs.

- A financial services firm tried adapters for fraud detection. Accuracy was 78% of full fine-tuning - not enough. They switched to LoRA (r=128) and hit 95%.

- QLoRA enabled a research lab to fine-tune a 33B model on a single A100. They trained 12 variants in one week. With full fine-tuning, that would’ve taken 6 months.

LoRA vs. Adapters: Which Should You Use?

| Feature | LoRA | Adapter Modules |

|---|---|---|

| Memory usage (per adapter) | 5-15 MB | 10-30 MB |

| Inference latency increase | <1% | 15-20% |

| Training speed | Standard | Faster for small tasks |

| Accuracy vs. full fine-tuning | 97-99% | 95-98% |

| Best for | High-accuracy, low-latency apps | Multi-task, modular systems |

| Model size limit | Up to 70B+ with QLoRA | Best under 30B |

Tools and Libraries Making It Easy

You don’t need to build this from scratch. Hugging Face’s PEFT library is the gold standard. It supports LoRA, adapters, and QLoRA out of the box. With just a few lines of code, you can wrap any Hugging Face model and start fine-tuning.- LoRAX (by Predibase): Manages hundreds of adapters on one model. Used by American Express and JPMorgan.

- S-LoRA: Optimizes memory for serving many LoRA adapters at once.

- FlexLLM: Runs inference and fine-tuning in the same batch. Boosts GPU utilization from 58% to 89%.

- QLoRA: Built into transformers 4.35+. Just set

load_in_4bit=Trueanduse_lora=True.

- Load a base model (e.g.,

meta-llama/Llama-2-7b-chat-hf) - Wrap it with

get_peft_model(model, lora_config) - Train with Hugging Face Trainer - same code as full fine-tuning

- Save the adapter weights (not the whole model)

- Load them later with

load_adapter()

Common Pitfalls and How to Avoid Them

Even with these tools, people run into issues:- Merging adapters causes accuracy loss: When you merge LoRA weights into the base model, numerical precision errors can creep in. Always test accuracy after merging. Use

merge_and_unload()only if you’re deploying a single version. - Adapter conflicts: Loading multiple adapters without a scheduler can drop performance by 12%. Use the

adapter_sourceparameter to manage priority. - Choosing the wrong rank: 83% of users guess. Start with r=16. If accuracy is low, try r=32, then 64. Don’t go above 256 unless you have a huge dataset.

- Not quantizing big models: Trying to fine-tune a 30B+ model with standard LoRA? You’ll crash. Use QLoRA.

What’s Next in 2026?

The field is moving fast. Microsoft released LoRA+ in January 2026 - it reduces adapter size by 35% without losing accuracy. Google’s Elastic Low-Rank Adapters cut parameters by 20%. NVIDIA’s Blackwell GPUs now have hardware optimized for LoRA operations - 2.3x faster inference. Hugging Face plans to standardize adapter formats by Q3 2026. That means you’ll be able to swap adapters between models without retraining. The EU AI Act now requires you to document every adapter change for high-risk applications - so version control is becoming mandatory. The big picture? By 2027, Gartner predicts 85% of enterprise LLM deployments will use PEFT. The cost of fine-tuning will drop another 70%. We’re moving from a world where only big tech could customize LLMs - to one where every startup, researcher, and developer can.Frequently Asked Questions

Can I use LoRA with any large language model?

Yes - as long as the model uses transformer attention layers (which most do). LoRA works with Llama, Mistral, GPT-2, BERT, and others. Hugging Face’s PEFT library automatically detects compatible layers. Just wrap your model with get_peft_model() and it handles the rest.

Do I need to retrain the whole model every time I add a new task?

No. That’s the whole point. You train a small adapter for each task - maybe 10MB per one. You save those adapter weights separately. To switch tasks, you just load the right adapter. No retraining needed. You can even stack them or switch between them at runtime.

Is LoRA better than full fine-tuning?

For most use cases, yes. LoRA matches full fine-tuning accuracy within 1-3%. But it uses 500x less memory and costs 10-100x less to train. The only time full fine-tuning wins is if you’re doing continued pretraining on massive datasets - like adding 100GB of new text. For task-specific tuning, LoRA is superior.

Can I run LoRA on my laptop?

Absolutely. A 7B model with LoRA (r=8) needs under 10GB of VRAM. Even a 13B model with QLoRA runs on a 24GB GPU like the RTX 4090. Many users fine-tune Llama-2-7B on a 3090 with no issues. You don’t need a data center.

What’s the difference between LoRA and LoRA+?

LoRA+ is Microsoft’s 2026 upgrade. It uses dynamic rank adjustment - meaning it automatically reduces the size of the low-rank matrices during training if they’re not needed. This cuts adapter size by 35% without losing accuracy. It’s still LoRA, just smarter and more efficient.

Are there any downsides to using LoRA?

The main one is adapter fragmentation. If every team uses different ranks, alphas, or versions, it becomes hard to share or reproduce models. Standardization efforts are underway, but right now, you need to document your settings carefully. Also, LoRA doesn’t work well for continued pretraining - only task fine-tuning.

Next Steps for Practitioners

If you’re new to this:- Install Hugging Face PEFT:

pip install peft - Load a base model from Hugging Face Hub

- Use

LoraConfigto set rank=16, alpha=32 - Wrap your model with

get_peft_model() - Train for 1-3 epochs on your dataset

- Save only the adapter weights - not the full model

- Test accuracy on a validation set

- Use QLoRA for models over 30B

- Use LoRAX or FlexLLM to serve multiple adapters

- Track adapter versions with Git LFS or DVC

- Set up automated testing for accuracy drift

Lissa Veldhuis

January 21, 2026 AT 07:02So you're telling me I can train a 70B model on my gaming rig with a 4090 and not go broke? I've been paying $200 a month for cloud credits just to test a 7B model and now you're saying I could've been doing this on my couch with a pizza and some energy drink? The future is here and it's wearing sweatpants and drinking cold brew

Also LoRA+? Sounds like Microsoft just renamed their coffee machine and called it AI innovation. Still... I'll take it.

Michael Jones

January 22, 2026 AT 07:30Think about what this really means - we're not just optimizing parameters anymore, we're optimizing possibility. For the first time in history, the barrier to building a custom AI isn't hardware, it's imagination. A high schooler in Nebraska can now fine-tune a model to write Shakespearean tweets. A nurse in rural Kansas can train a bot to explain diabetes in plain language. This isn't a technical breakthrough - it's a democratization of intelligence. The real revolution isn't in the matrices, it's in the minds that now have access to them.

We used to ask how much it costs to train a model. Now we ask - what will you build with it?

allison berroteran

January 22, 2026 AT 20:07I've been experimenting with QLoRA on a 13B model using a 24GB RTX 4090 and honestly it's been a game changer - I was skeptical at first because I thought quantization would ruin the nuance, but the model still handles subtle context shifts in medical documentation way better than I expected. I used r=64 and alpha=32, trained for 2.5 epochs on a 12k sample of discharge summaries, and the accuracy was within 1.2% of full fine-tuning. The real win? I didn't have to wait days for training cycles. I could iterate overnight. I'm now training five different adapters for different departments - one for ER triage, one for mental health intake, one for billing codes - and switching between them is like flipping channels. It's not magic, but it feels like it.

I just wish more people understood that the real bottleneck isn't compute, it's documentation. Everyone's dumping their LoRA configs into random folders and calling it 'production' - no version control, no testing pipeline, no metadata. You're not building AI, you're building a house of cards that'll collapse the second someone else tries to use it.

Gabby Love

January 24, 2026 AT 06:45Small note: When you use merge_and_unload(), always test on a validation set first. I lost 4.7% accuracy on a legal contract parser because I assumed the merge was lossless. Turns out, floating-point rounding in 16-bit to 8-bit conversion doesn't play nice with legal jargon. Also - if you're using LoRAX, make sure your scheduler is set to 'sequential_load' not 'parallel' - otherwise you get adapter bleed. Been there, trained that.

Jen Kay

January 25, 2026 AT 21:39It's funny how we call this 'parameter-efficient' when what we're really doing is outsourcing intelligence to tiny, disposable modules. We're treating models like LEGOs - snap on a legal adapter, snap on a customer service adapter, snap on a propaganda adapter. And we're proud of it?

Maybe we should ask not how cheaply we can fine-tune, but why we're fine-tuning so many versions of the same thing in the first place. Are we building tools - or just automating bias? I'm not saying stop using LoRA. I'm saying - who's auditing these adapters? And who's responsible when the medical bot misdiagnoses because someone used r=8 on a 500-sample dataset?

Michael Thomas

January 26, 2026 AT 04:01USA built this. China's still trying to reverse engineer it. You think they got QLoRA? Nah. They're still renting A100s. This is American tech. Don't let anyone tell you otherwise. Also - stop using adapters. LoRA is the only real way. Everything else is garbage. End of discussion.

Eva Monhaut

January 27, 2026 AT 18:32I've been using this stack to train a poetry generation model for a nonprofit that helps incarcerated writers. We're using QLoRA on Llama-3-8B with r=32 and alpha=16 - training on 3,000 lines of handwritten verse from inmates. The model doesn't just mimic style - it captures rhythm, silence, longing. One woman wrote back saying her poem felt like 'the voice I forgot I had.'

This isn't about cost savings or GPU specs. It's about giving people back their language. And yeah, it runs on a 3090 in my basement. No cloud. No corporate sponsor. Just a laptop, a Wi-Fi connection, and a whole lot of heart.

Thank you for making this possible - not just as engineers, but as humans who believe in access.

mark nine

January 28, 2026 AT 14:53Just tried LoRA on a 7B Mistral model for my side project - 12 hours of training on a 3090, 8MB adapter file, 98% accuracy vs full fine-tune. I saved $400 and didn't even need to turn on my laptop fan. The future is weirdly quiet.

Also - Hugging Face PEFT is the MVP. No cap.