Prompting for Docs: How to Generate READMEs, ADRs, and Code Comments with AI

Feb, 19 2026

Feb, 19 2026

Ever spent hours writing a README just to realize it’s missing the most basic setup steps? Or tried to explain a complex decision in an Architecture Decision Record (ADR) and ended up with a vague paragraph that doesn’t help anyone? You’re not alone. Teams across the industry are turning to prompt engineering to generate documentation on demand - not as a replacement for human judgment, but as a force multiplier for clarity and consistency.

AI doesn’t write docs because it understands them. It writes them because you told it exactly how to. And when you get the prompt right, you save hours. When you don’t, you get dangerously wrong advice disguised as helpful guidance. This isn’t magic. It’s a skill. And it’s becoming as essential as writing clean code.

READMEs: Your Project’s First Impression

A README is the first thing a new developer sees. If it’s confusing, they walk away. If it’s clear, they start contributing. The best AI-generated READMEs don’t just list commands - they map out a journey.

Here’s what works: Start with the objective - "Generate a README for a Python web app built with FastAPI." Then add context: "The app uses PostgreSQL, Docker, and Redis. Target audience: junior developers with basic Python knowledge." Include instructions: "Outline installation in 5 steps. Show a working API call example. Add a section on how to run tests and contribute. Use a friendly but professional tone. Format in Markdown."

GitHub’s own internal benchmarks show that prompts with these five components - objective, context, instructions, tone, and format - cut README creation time from over three hours to under 25 minutes. But here’s the catch: 52% of those AI-generated READMEs still had broken code samples or outdated dependency versions. Why? Because the AI doesn’t know your project. It only knows what you tell it.

That’s why few-shot prompting matters. Include one or two real examples of READMEs from similar projects. Don’t just say "make it like this" - paste the actual text. A study from ScoutOS found that developers who used just two well-written examples improved accuracy by 48%. It’s not about quantity. It’s about quality of reference.

ADRs: Capturing Decisions That Matter

ADRs are where teams make architecture decisions stick. They’re not just notes. They’re legal records of why you chose Kafka over RabbitMQ, or why you moved from monolith to microservices. Get this wrong, and someone five years from now will waste weeks undoing a bad call.

Most AI-generated ADRs fail because they’re superficial. "We chose Option A because it’s faster." That’s not an ADR. That’s a guess.

The winning prompt structure follows Microsoft’s ADR template: Context - what problem are you solving? Alternatives - list at least three options you considered. Decision - what did you pick? Consequences - what’s the impact on performance, cost, or maintainability?

But here’s the real trick: force the AI to reason. Add this line: "Explain your reasoning step-by-step for each alternative." MIT Sloan’s 2023 study found that prompts with this phrase improved ADR quality by 37%. Why? Because it makes the AI simulate deliberation - not just regurgitate facts.

One team at Shopify used this method to document their switch from Redis to PostgreSQL for session storage. The AI, prompted with their exact trade-offs, listed latency, backup complexity, and scaling costs. It even flagged a hidden risk: their monitoring tool didn’t support PostgreSQL metrics. They hadn’t thought of that. The AI did - because they gave it enough context to think.

Still, 63% of negative feedback on developer forums mentions "hallucinated" ADRs - where the AI invents technical details that don’t exist. A Google Cloud study showed that without explicit domain context (like "this is a blockchain payment system using Ethereum smart contracts"), inaccuracies jumped to 41%. That’s not a bug. It’s a risk.

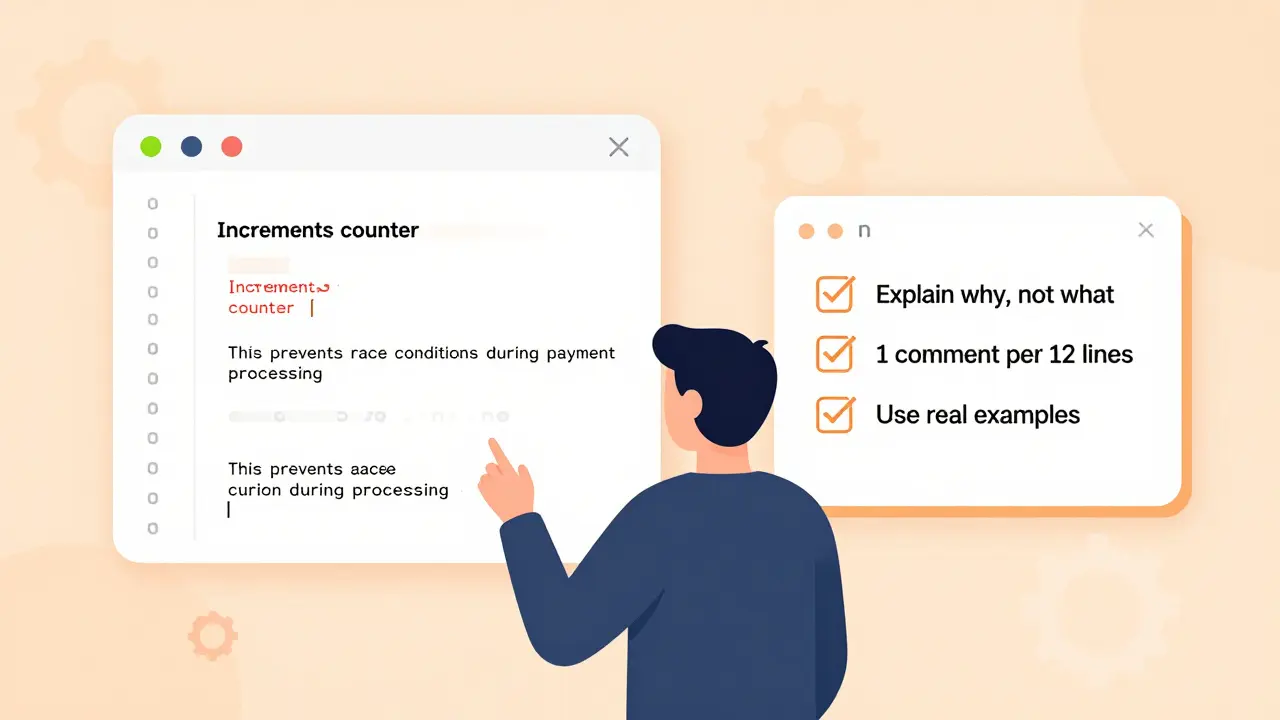

Code Comments: Explain the Why, Not the What

Everyone knows code should be commented. But most comments are useless. "Increments counter." Really? I can see that. What’s the *purpose*?

Effective comment prompts focus on why. The prompt should say: "Add comments to explain the business logic behind this function. Assume the reader knows Python syntax but not our domain. Focus on edge cases and hidden assumptions. One comment per 12 lines of complex logic. Do not repeat what the code says."

ScoutOS’ March 2024 whitepaper found that comments generated with this "why over what" rule were 51% more useful to new team members. A developer at Cloudflare reported that after implementing this across 80 repos, onboarding time dropped by 30%.

Again, few-shot prompting helps. Include a before-and-after example: show a poorly commented function, then show the same function with high-quality comments. The AI learns the pattern. It doesn’t memorize. It generalizes.

But here’s the trap: over-commenting. Some teams ask for comments on every line. That’s noise. The sweet spot? 1 comment per 10-15 lines of complex logic. Anything more becomes a maintenance burden. The AI will follow your rule - if you give it one.

Why This Works Better Than Tools Like Sphinx or JSDoc

Tools like Sphinx and JSDoc are template engines. They auto-generate docs from code structure. But they can’t explain why you chose a specific algorithm. They don’t know your team’s culture. They can’t adapt tone for beginners vs. experts.

Prompt-based documentation is different. It’s customizable. You can say: "Write this like a senior engineer explaining to a new hire." Or: "Use bullet points. No paragraphs. Include a diagram in Mermaid syntax."

Microsoft’s Azure team tested both approaches. Prompt-engineered READMEs were 43% faster to generate. But the real win? Consistency. Teams using prompts reported 71% fewer style variations across repositories. That’s huge when you’re managing 50+ microservices.

Traditional tools give you structure. Prompt engineering gives you voice.

The Hidden Cost: Prompt Engineering Takes Time

You can’t just type "Write a README" and call it a day. Crafting good prompts takes practice. MIT Sloan’s data shows developers need 8-12 hours of hands-on work to reliably produce accurate documentation prompts. ADRs take the longest - an average of 47 minutes per prompt to get right.

That’s why teams are building prompt libraries. GitHub’s public repository for documentation prompts has over 14,800 stars. It’s full of templates: "ADR for database migration," "README for React Native app," "comment style for legacy Java."

Start there. Don’t build from scratch. Find a prompt that matches your project type. Test it. Then tweak it. Save it. Share it. Turn your best prompts into team standards.

What’s Next? Integration and Automation

The next wave is automation. GitLab’s 2024 release now regenerates documentation every time a prompt file changes. JetBrains is embedding AI doc generation into IntelliJ IDEA. Google Cloud’s Document AI offers pre-built templates for READMEs and ADRs.

But automation doesn’t remove the need for skill. It just shifts it. Instead of writing docs, you’re now managing prompts. You’re curating examples. You’re reviewing outputs. You’re still the gatekeeper.

The teams winning here aren’t the ones using AI the most. They’re the ones who understand it the best. They know when to trust the AI. And when to say: "No. That’s wrong. Let’s fix the prompt."

Can AI-generated READMEs replace human-written ones completely?

No. AI can generate a solid draft in minutes, but it can’t replace human judgment. It doesn’t know your team’s culture, your legacy constraints, or your unspoken assumptions. Always review AI-generated READMEs for accuracy, especially around setup steps, dependencies, and contribution guidelines. The best practice is to use AI for the first draft, then edit it like you would any important document.

Why are ADRs so hard for AI to get right?

ADRs require reasoning, trade-offs, and context - not just facts. AI can list options, but it can’t truly understand the real-world consequences of choosing one technology over another. For example, it might not know that your team has no experience with Kubernetes, or that your compliance rules forbid certain cloud services. Without explicit, detailed context, AI often generates plausible-sounding but dangerously incomplete decisions. Always validate ADRs with senior engineers before approving.

How do I avoid hallucinated technical details in AI-generated docs?

Provide specific context. Don’t say "a Python app" - say "a Python 3.12 app using FastAPI 0.110, Docker Compose v2, and PostgreSQL 15, deployed on AWS ECS." Include exact versions, tools, and constraints. The more precise you are, the less the AI has to guess. Also, use few-shot prompting: include real examples from your own codebase. This anchors the AI in reality, not imagination.

Should I use zero-shot or few-shot prompting for documentation?

Use few-shot prompting for ADRs and code comments. Zero-shot (no examples) works okay for simple READMEs, but it fails on anything requiring nuance. Few-shot - where you give the AI 1-3 real examples of good output - improves accuracy by 40-50%. Yes, it takes longer to set up. But the quality gain is worth it. Start with one example from a project you’re proud of.

Is this just a trend, or is it here to stay?

It’s here to stay. Gartner predicts 87% of teams will use AI-generated READMEs by 2026. The real question isn’t whether - it’s how well you’ll do it. Teams that treat prompt engineering as a skill - not a shortcut - will outperform those that treat it as magic. The future belongs to developers who can write clear instructions, not just clean code.

Next Steps: Start Small, Scale Smart

Don’t try to automate everything at once. Pick one README from a new project. Generate it with a well-crafted prompt. Edit it. Save the prompt. Use it again next time.

Then try one ADR. Use the "explain your reasoning step-by-step" trick. Compare the output to what you’d write yourself. Notice where the AI missed things. Adjust your prompt.

Finally, build a library. Create a folder in your repo called "/prompts/docs". Save your best prompts. Label them: "README - Python API", "ADR - Database Migration", "Comments - Legacy Java". Share them. Use them. Improve them.

This isn’t about replacing humans. It’s about giving them back time. Time to think. Time to design. Time to fix the real problems - not write boilerplate.