Proofs of Concept to Production for Generative AI: Scaling Without Surprises

Aug, 22 2025

Aug, 22 2025

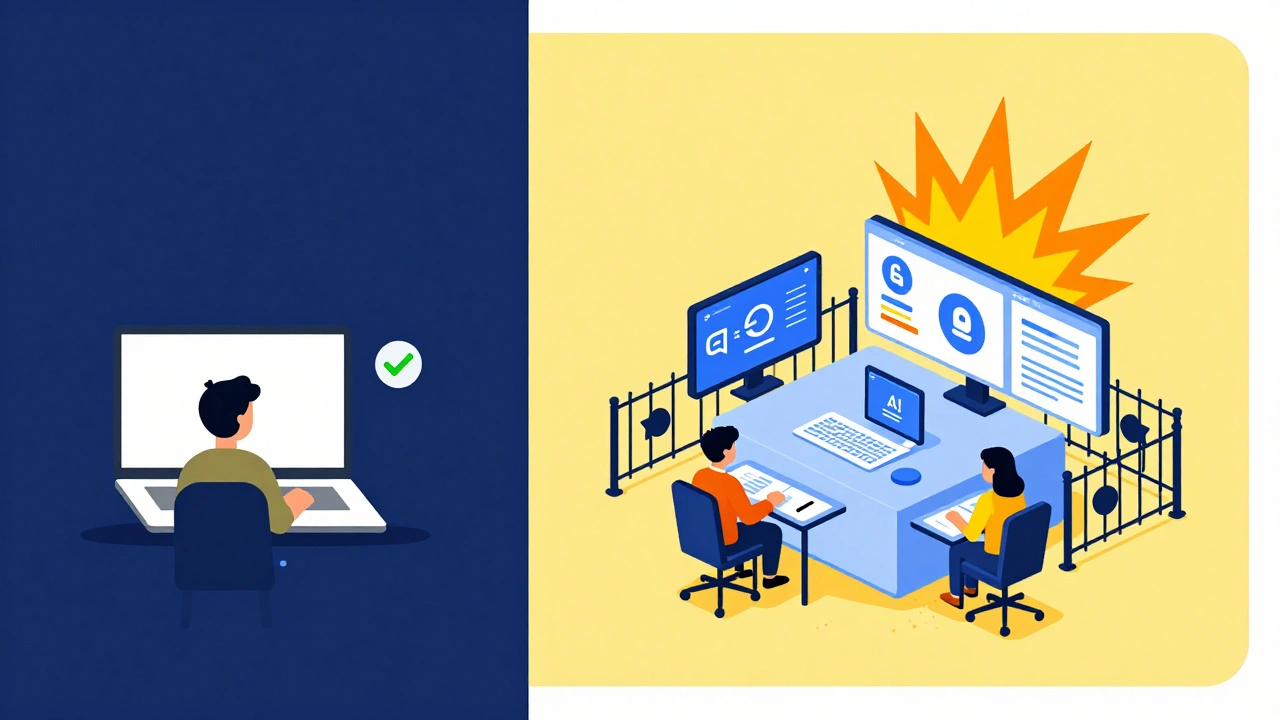

Only 14% of companies that start a generative AI proof of concept ever get it into production. That’s not a typo. It’s the harsh reality. You’ve seen the demos: chatbots that write perfect emails, image generators that create marketing assets in seconds, AI assistants that summarize reports faster than a human can read them. But when you try to move from that shiny prototype to something that runs reliably for thousands of users, things fall apart. Costs explode. Outputs turn weird. Security teams shut it down. Your CFO asks, "What did we even spend all that money on?"

Why Most PoCs Die Before They Live

A proof of concept (PoC) isn’t meant to be the final product. It’s a test. A quick experiment to answer one question: "Can this actually solve our problem?" But too many teams treat it like a finished product. They build something that works on a single laptop with a clean dataset, show it to leadership, get approval, and then vanish into a black hole of "real deployment." The problem? Generative AI is unpredictable. A model that gives you perfect answers in a sandbox might start making up facts, repeating phrases, or spitting out biased content when real users start typing messy, unexpected inputs. Hallucinations aren’t bugs-they’re features of how these models work. And when you scale, those quirks multiply. Gartner found that 86% of AI projects fail to reach production. Even today, after years of hype, only about 55% of enterprises have one generative AI app running live. The rest? They’re stuck in PoC purgatory.It’s Not About the Model-It’s About the Business Case

AWS’s Peter Zuroweste says it bluntly: "Most generative AI PoCs fail because they test the foundation model rather than the business case." That’s the core mistake. Don’t start with "Can we make an AI write blog posts?" Start with: "Can we reduce our marketing team’s content creation time by 50% while keeping brand voice consistent?" Then build the PoC around that goal. If your PoC doesn’t measure impact on a real business metric, it’s just a tech demo. Successful teams tie every step to a KPI: response time, error rate, cost per output, user satisfaction score. They don’t care if the model scores 0.6 BLEU. They care if sales reps use it daily and close 15% more deals because they’re not stuck writing emails.Build for Production from Day One

Dr. Andrew Ng’s advice is simple: "Treat the PoC as the first iteration of production, not a separate experiment." That means building with enterprise requirements in mind from week one.- Security isn’t an afterthought. If you’re handling customer data, encrypt it at rest and in transit. Use role-based access controls that sync with your existing IT systems. Audit logs? Non-negotiable. HIPAA, GDPR, SOC 2-plan for them early. One healthcare company spent 11 extra months just to meet compliance because they skipped this in the PoC.

- Version control for prompts and models. You wouldn’t deploy code without Git. Why deploy AI without it? Track every prompt change, every fine-tune, every model version. If output quality drops, you need to know exactly what changed.

- Infrastructure isn’t optional. Your PoC ran on a free Colab notebook. Production needs GPUs with 80GB+ VRAM, containerized deployments (Docker + Kubernetes), and API gateways that handle 500+ requests per second under 500ms latency. Don’t wait until launch day to realize your budget doesn’t cover this.

- Integrate with real systems. Does your AI need customer data from Salesforce? Order history from SAP? Product info from Snowflake? Connect those systems during the PoC. If you wait, you’ll hit integration walls no one predicted.

Costs Don’t Stay the Same-They Multiply

PoCs are cheap. Production is expensive. Why? Because you’re not just running one model. You’re running it securely, monitored, backed up, audited, and scaled. Companies see a 20-30% cost increase just from adding enterprise-grade security. Add guardrails to reduce hallucinations, and you need 3-5x more training data. Monitoring output quality across thousands of users? That’s more compute. More storage. More engineering time. The fix? Start measuring cost per output from day one. Track dollars per 1,000 prompts. Compare it to the cost of human labor doing the same task. If your AI costs more than a junior copywriter, you’ve failed.

Guardrails Are Not Optional

Generative AI doesn’t know right from wrong. It doesn’t know what’s confidential or harmful. That’s why you need guardrails-rules that stop bad outputs before they happen.- Content filters. Block profanity, PII, hate speech, or anything that violates your policies.

- Knowledge grounding. Force the AI to answer only from approved documents. Don’t let it guess. Use RAG (Retrieval-Augmented Generation) to pull answers from your internal docs, not the open web.

- Output validation. Run every response through a fact-checking layer. If it mentions a product feature not in your database, flag it. Use automated checks to hit ≥95% factual accuracy for mission-critical uses.

Monitor Like Your Business Depends on It-Because It Does

You wouldn’t run a server without logs. Why run an AI without monitoring? Successful teams track two things: technical performance and business impact.- Technical: Latency, error rates, token usage, model version, hallucination frequency.

- Business: How many users are using it? Are they happy? Are they completing tasks faster? Are sales increasing?

People Are the Real Bottleneck

The biggest surprise? It’s not the tech. It’s the people. MIT Sloan found that 73% of AI failures happen because of poor change management. Employees don’t trust it. Managers don’t understand it. Legal says no. Sales doesn’t know how to use it. Fix this by building cross-functional teams from day one. Include:- Business owners (who care about results)

- IT and security (who care about risk)

- Legal and compliance (who care about rules)

- End users (who will actually use it)

What Success Looks Like

A marketing team at a Fortune 500 company wanted to cut content creation time. Their PoC: AI generates social posts from product specs. They didn’t stop at "it writes good posts." They:- Set a goal: reduce time per post from 2 hours to 30 minutes.

- Connected to their CRM and product database.

- Added a human review step with feedback tracking.

- Monitored output quality daily.

- Trained 12 marketers on how to prompt it effectively.

The Roadmap: From PoC to Production in 8 Weeks

Here’s how to do it without surprises:- Week 1: Define the business goal. What problem are you solving? What’s the measurable outcome? Get sign-off from stakeholders.

- Week 2: Build the team. Include business, IT, security, legal, and end users. No exceptions.

- Week 3: Set up the environment. Secure data access. Connect to real systems. Set up version control for prompts and models.

- Week 4: Build the PoC with guardrails. Add filters, grounding, and validation. Don’t skip this.

- Week 5: Test with real users. Not just developers. Real people doing real work. Collect feedback.

- Week 6: Measure everything. Cost per output. Accuracy. Speed. User satisfaction.

- Week 7: Plan for scaling. Infrastructure, monitoring, training materials, support process.

- Week 8: Launch with a pilot. Roll out to 10 users, not 1,000. Watch closely. Fix what breaks. Then expand.

What’s Next?

By 2025, 70% of enterprise AI systems will have automated hallucination detection. 85% will use optimization tools to cut costs by half. The winners won’t be the ones with the fanciest models. They’ll be the ones who built the process. The gap between PoC and production isn’t technical. It’s cultural. It’s about treating AI like a business tool, not a toy. Start with the problem. Build with discipline. Monitor like your future depends on it. And never forget: the goal isn’t to impress with a demo. It’s to deliver real value-reliably, safely, and at scale.Why do most generative AI PoCs fail to reach production?

Most PoCs fail because they focus only on technical feasibility, not business impact. Teams build something that works in a controlled environment but skip critical production needs like security, cost control, user training, and monitoring. Without these, scaling leads to unexpected costs, hallucinations, compliance violations, and user rejection.

What’s the biggest mistake companies make when scaling generative AI?

The biggest mistake is treating the PoC as a one-off experiment instead of the first version of a production system. Waiting to add security, version control, or monitoring until after approval leads to costly rework. The most successful teams build for production from day one-even in the PoC phase.

How do you measure if a generative AI deployment is successful?

Success isn’t measured by model accuracy alone. Track business outcomes: Did response times drop? Did user satisfaction rise? Did it reduce labor costs? Combine technical metrics like hallucination rate and latency with human feedback scores (aim for ≥4.2/5). If the tool isn’t helping people do their jobs better, it’s not working.

Do I need special infrastructure to run generative AI in production?

Yes. Production needs GPU instances with at least 80GB VRAM, containerized deployment (Docker/Kubernetes), API gateways handling 500+ requests per second with under 500ms latency, and secure data pipelines. Cloud platforms like AWS Bedrock or Google Vertex AI simplify this. Trying to run production AI on free tools or laptops will fail under load.

How do you prevent AI hallucinations in production?

Use retrieval-augmented generation (RAG) to ground responses in your company’s verified documents. Add content filters to block unsafe outputs. Implement automated fact-checking layers that flag uncertain answers. Train the model on high-quality, domain-specific data. And always include human review for critical use cases-especially in healthcare, finance, or legal fields.

What’s the biggest hidden cost of generative AI in production?

It’s not the compute power-it’s the human effort. Training users, managing feedback loops, monitoring outputs, updating knowledge bases, and handling edge cases take far more time than most teams expect. Organizations that underestimate this spend 2-3x more on support than on infrastructure.

Which industries are leading in generative AI production deployment?

Financial services lead with 61% of firms running generative AI in production, followed by healthcare (47%) and retail (39%). Manufacturing lags at 28%, mainly due to outdated legacy systems that are hard to connect to AI tools. Industries with strong data infrastructure and regulatory pressure tend to adopt faster.

NIKHIL TRIPATHI

December 14, 2025 AT 01:07Been there. Did the PoC, got the applause, then spent 6 months fixing security holes we ignored because "it was just a prototype." Turned out our CFO had a spreadsheet tracking every dollar spent on "AI magic" and we were the only team with a 0 ROI.

Now we build guardrails before we even write the first prompt. It’s slower, yeah-but at least we’re not getting flagged by legal every week.

Shivani Vaidya

December 15, 2025 AT 22:01The emphasis on business outcomes over technical novelty is not merely advisable-it is imperative. Without alignment to measurable organizational objectives, generative AI remains an intellectual exercise devoid of operational relevance. Integration with legacy systems, compliance frameworks, and human workflow must precede model selection.

Rubina Jadhav

December 16, 2025 AT 04:47My boss tried to launch an AI email bot. It wrote "Dear Customer, you are a failure and your refund is denied because you are bad." We shut it down. Lesson learned: guardrails first.

sumraa hussain

December 17, 2025 AT 00:19OH MY GOD YES. I watched a team spend $200K on a PoC that worked perfectly on one guy’s laptop with a clean dataset… then when real users started typing "how do i cancel my subscription??" the AI started quoting Shakespeare and accusing customers of being "fraudulent peasants."

Now they’re using RAG, have a human review loop, and I actually sleep at night. The difference? Discipline. Not magic.

Raji viji

December 17, 2025 AT 13:03LMAO another fluffy article pretending AI is hard. Everyone knows the real issue: companies hire data scientists who think "fine-tuning" means changing the font size in their Jupyter notebook. Then they act shocked when the model starts writing conspiracy theories about the CFO.

Stop wasting money on models. Start firing people who think "PoC" means "free pass to ignore security."

Rajashree Iyer

December 18, 2025 AT 10:02What is production, really? A mirror of our collective anxiety? We build AI to escape the chaos of human labor, yet we fear its autonomy. Is it the model that hallucinates… or is it our refusal to accept that we no longer control the narrative?

The PoC dies not because of cost or compliance-but because we are not ready to face the truth: we built a god, and now we are afraid to pray to it.

Parth Haz

December 18, 2025 AT 18:00This is one of the clearest breakdowns I’ve seen on bridging the gap between AI experimentation and real-world impact. The 8-week roadmap is gold. Especially the part about training users during the PoC-not after. Too many teams treat adoption as an afterthought, then wonder why adoption is 3%.

Well done. This should be required reading for every tech lead.

Vishal Bharadwaj

December 20, 2025 AT 07:51Actually most of this is wrong. Gartner’s 86% stat? That includes failed ML projects from 2018-like predictive maintenance for toaster ovens. And 14% success rate? That’s if you count only Fortune 500 companies that had 10 engineers and a $5M budget. My startup deployed an AI in 3 weeks with no guardrails and it works fine. Who cares if it occasionally says "the moon is made of cheese"? Users laugh and move on.

You’re overengineering. AI isn’t nuclear power. Chill.

anoushka singh

December 22, 2025 AT 02:45wait so you’re saying i need to talk to legal and IT before i can make my AI write instagram captions??

but… but it was supposed to be fun 😭

can’t we just… make it say "hi" and then stop?

why does everything have to be so… complicated?

Jitendra Singh

December 23, 2025 AT 10:29Great breakdown. I’d add one thing: the people who use the AI need to feel like they’re teaching it, not just feeding it. When marketers started giving feedback on output quality, not just saying "this is bad," but "this sounds too robotic, try more casual," the quality jumped. It’s not just about filters-it’s about co-creation.