Security Telemetry and Alerting for AI-Generated Applications: What You Need to Know

Jul, 29 2025

Jul, 29 2025

AI-generated applications don’t behave like traditional software. They don’t follow fixed rules. They learn, adapt, and sometimes make decisions that even their creators can’t fully explain. That’s why security telemetry and alerting for AI apps isn’t just an upgrade-it’s a complete rewrite of how we think about security.

What Makes AI Telemetry Different?

Traditional security tools watch for known bad patterns: unusual logins, strange file accesses, suspicious network traffic. But AI applications generate outputs based on probabilities, not code paths. A model might output a harmful response not because it was hacked, but because its training data had a subtle bias. Or it might suddenly start generating garbage text-not because it’s compromised, but because its confidence scores dropped below a threshold. Security telemetry for AI apps has to track both the what and the how. That means monitoring:- Model confidence scores during inference

- Prompt injection attempts (like feeding malicious inputs to trick the AI)

- Model drift (when the AI’s behavior changes over time)

- Data poisoning signals (when training data gets corrupted)

- Model inversion attacks (where attackers reverse-engineer sensitive training data from outputs)

Standard SIEM systems can’t do this. You need telemetry that understands machine learning behavior. Companies like Splunk and IBM now offer modules that ingest model logs, API request patterns, and inference metrics alongside traditional firewall and EDR data.

The Data You Can’t Ignore

AI applications generate 3 to 5 times more telemetry data than traditional apps. Why? Because every prediction, every input, every confidence level is a data point. A customer service AI might process 50,000 prompts a day. Each one needs to be logged, analyzed, and compared against baseline behavior. Here’s what your telemetry pipeline should capture:- Input anomalies: Are users sending unusual prompts? Are they trying to bypass filters with obfuscated text?

- Output consistency: Is the AI suddenly generating responses that are far less accurate or more aggressive than before?

- API usage spikes: Is someone making thousands of requests in minutes? That could be an automated attack trying to extract training data.

- Model retraining triggers: When the model updates, does the new version behave differently? Did someone sneak in bad data during retraining?

- Edge device telemetry: If the AI runs on a mobile app or IoT device, you need local monitoring. Latency matters-processing data on-device reduces blind spots.

NetScout’s AI Sensor and Arctic Wolf’s MDR platform both use Deep Packet Inspection and real-time behavioral analysis to catch these patterns. One fintech company saw a 65% drop in false positives after six months of tuning their telemetry to understand normal AI variance.

Alerting Isn’t Just About Thresholds Anymore

You can’t set a rule like “alert if error rate > 5%” and expect it to work. AI models naturally have fluctuating error rates. A 7% error rate might be normal for one model, and catastrophic for another. Effective alerting for AI apps needs:- Behavioral baselines: The system learns what “normal” looks like for each model over time-before setting alerts.

- Context-aware triggers: An alert for a prompt injection only fires if it’s followed by a spike in API calls and a drop in output quality.

- Correlation engines: Linking a suspicious API call to a model drift event and a change in user feedback scores. That’s how Arctic Wolf stopped a ransomware attack: not because of a single alert, but because of the pattern across three unrelated data streams.

Early adopters report a 45% faster threat detection time-but also a 30% higher false positive rate in the first few months. That’s normal. It takes time to train the system to tell the difference between a model glitch and a real attack.

The Black Box Problem

Here’s the biggest headache: most AI models are black boxes. You can’t see how they arrived at a decision. So how do you know if an odd output is a security issue-or just how the model works? MITRE’s 2023 ATT&CK for AI framework found that current telemetry systems capture less than 40% of the real attack surface. Why? Because they can’t see the internal logic. That’s why the industry is pushing for “transparent telemetry”-systems that combine anomaly detection with explainability tools. Microsoft’s Azure AI Security Benchmark and Google’s Vertex AI Model Security Dashboard now include built-in explainability features. They don’t just say “this output is risky.” They say “this output was triggered by input X, which had a 92% similarity to known adversarial examples.” That’s a game-changer. It turns a vague alert into an actionable insight.Who’s Doing This Right?

Financial services lead adoption-42% of firms now require AI telemetry in their RFPs. Why? Because fraud and compliance risks are too high. One bank caught a data poisoning attempt during model retraining. The attacker had injected fake loan applications into the training set to make the model approve risky loans. The telemetry flagged it because the model’s output distribution suddenly shifted-and the training data provenance logs showed an unauthorized upload. Healthcare companies use it to detect biased outputs in diagnostic AI. A hospital’s AI was recommending lower treatment intensity for elderly patients. Telemetry showed the model was over-relying on age as a proxy for comorbidities. That wasn’t a hack-it was a bias. But without telemetry tracking output fairness metrics, they’d never have known. Startups like Robust Intelligence and Arthur AI specialize in this. They don’t just monitor; they help you understand what the AI is doing. Their platforms map attack vectors directly to the AI lifecycle: training, validation, deployment, inference.

Where It Falls Short

Despite progress, three big gaps remain:- Skills shortage: Only 22% of cybersecurity pros have machine learning experience. You need people who understand both SIEMs and neural networks.

- Tool complexity: Splunk’s AI modules score 4.2/5 on G2-but users say setup takes 3-6 months. That’s twice as long as traditional tools.

- No standards: There’s no universal metric for “secure AI behavior.” NIST’s AI Risk Management Framework says you need continuous monitoring-but doesn’t say how.

One Reddit user from a tech startup said they spent four months building custom telemetry pipelines just to distinguish between normal model variance and real attacks. That’s not scalable.

What You Should Do Now

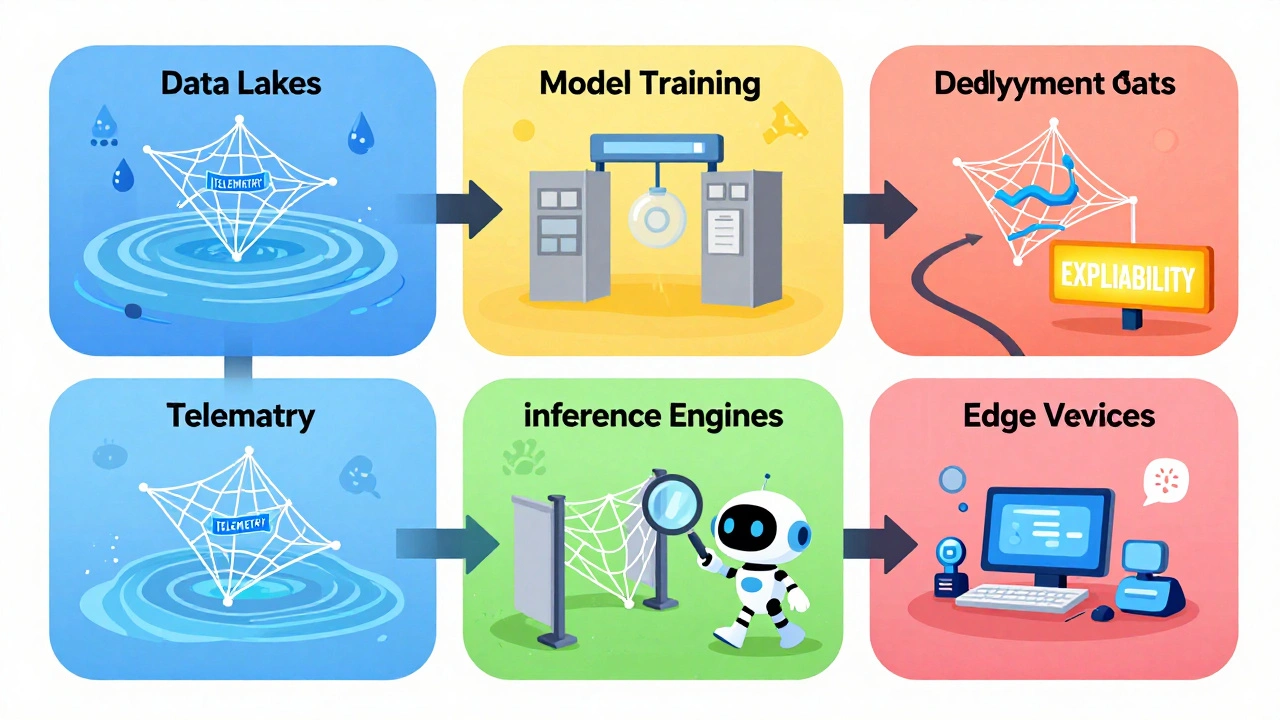

If you’re deploying AI-generated apps, here’s your checklist:- Start with your AI lifecycle: Map where the AI is trained, validated, deployed, and used. Telemetry must cover every stage.

- Integrate with MLOps: Your CI/CD pipeline for AI should feed telemetry data into your security platform. Don’t wait until deployment.

- Choose tools with explainability: Avoid black-box monitoring. Demand tools that tell you why something is flagged.

- Build baselines before alerting: Let the system learn normal behavior for at least 30 days before turning on alerts.

- Train your SOC: Bring in ML engineers to help interpret AI-specific alerts. This isn’t a job for traditional analysts.

Don’t wait for a breach to realize your AI app is vulnerable. The attacks are already happening. In 2023, IBM’s X-Force reported a 52% faster incident response time for teams using AI-specific telemetry. That’s not a luxury-it’s a survival tool.

What’s Coming Next

By 2026, Gartner predicts 70% of security telemetry tools will use causal AI-meaning they’ll stop just noticing patterns and start figuring out what caused them. That will slash false positives by up to 60%. We’re also seeing the rise of self-adapting telemetry. Systems that automatically adjust their monitoring rules based on how the AI behaves. If a model starts generating more responses with low confidence, the telemetry will automatically increase scrutiny on its inputs. No human needed. The future isn’t just better alerts. It’s smarter context. Telemetry that doesn’t just say “something’s wrong”-but explains it in terms you can act on.What’s the difference between traditional security telemetry and AI-specific telemetry?

Traditional telemetry watches for known malicious patterns-like unusual logins or file changes. AI-specific telemetry tracks how the model behaves: its confidence scores, input/output consistency, drift over time, and susceptibility to adversarial prompts. It’s not just about what the app does, but how it thinks.

Can I use my existing SIEM for AI applications?

You can, but you’ll miss most AI-specific threats. Standard SIEMs don’t understand model confidence, prompt injection, or data poisoning. You need add-ons or specialized platforms that ingest AI model logs, API metrics, and inference data. Tools like Splunk AI modules or IBM QRadar with AI extensions are designed for this.

Why are false positives so high with AI telemetry?

AI models naturally produce variable outputs. A drop in confidence or a slight shift in response style isn’t always an attack-it could be normal model variance. Early telemetry systems treat all deviations as threats. It takes months of tuning and behavioral baselining to reduce false positives. Start with monitoring only, then slowly enable alerts.

Do I need machine learning experts on my security team?

Yes, at least one. Only 22% of cybersecurity professionals have ML experience. You need someone who can interpret model logs, understand training data flows, and tell the difference between a bias issue and a real attack. If you can’t hire one, partner with an AI security vendor that provides managed services.

What’s the biggest risk if I don’t implement AI telemetry?

You won’t know when your AI is being manipulated. Attackers can poison training data, inject harmful prompts, or extract sensitive information through model queries-all without triggering traditional alerts. Without telemetry, you’re flying blind. In regulated industries like finance or healthcare, that could mean compliance violations, lawsuits, or reputational damage.

Lissa Veldhuis

December 12, 2025 AT 18:17AI telemetry is just corporate buzzword bingo now

allison berroteran

December 13, 2025 AT 01:04I've been watching AI models in production for over two years now and honestly the biggest issue isn't the attacks-it's how little we understand what 'normal' even looks like. Every model behaves differently, even if they're trained on the same data. One day your customer service bot is chill and helpful, the next it's generating poetic rants about capitalism because some intern accidentally fed it a Reddit thread from 2018. You can't just set thresholds-you have to build a living, breathing understanding of each model's personality. It's less like monitoring a server and more like raising a very smart, very unpredictable teenager.

Michael Jones

December 14, 2025 AT 23:16we're building castles on sand here

the real problem isn't telemetry it's that we're letting algorithms make decisions we don't understand and then pretending we can secure them with more data

what if the answer isn't more monitoring but less automation

what if we stopped trying to make ai perfect and started designing systems that don't rely on it for critical decisions

we're so obsessed with the tech we forgot to ask if we should even be using it

Gabby Love

December 15, 2025 AT 13:51Just a quick note: if you're using Splunk's AI modules, make sure you're ingesting the inference logs with the right schema. We had false positives for weeks because the confidence score field was being parsed as a string instead of a float. Simple fix, huge difference.

Jen Kay

December 16, 2025 AT 23:31It's funny how we treat AI like it's some mysterious alien force when really it's just code trained on human garbage. The 'black box' problem? We built it that way. The drift? We fed it toxic data and called it 'real-world training.' The attacks? We didn't even patch the API endpoints. Telemetry isn't the solution-it's the Band-Aid on a bullet wound. We need to stop outsourcing our ethics to algorithms and start taking responsibility for what we put into them.

Michael Thomas

December 17, 2025 AT 16:56USA leads in AI security. Rest of the world is still playing catch-up. Get with it.

Abert Canada

December 18, 2025 AT 21:31As someone from Canada who's worked with both US and EU teams on this, the real gap isn't tech-it's culture. Americans want to monitor everything, Europeans want to shut it down, and no one talks to the engineers who actually built the model. We need more cross-team huddles, not more dashboards. Also, if your SOC team doesn't know what a transformer is, you're already behind.

David Smith

December 20, 2025 AT 03:28So let me get this straight-we're spending millions to monitor AI because we're too lazy to write proper code? This is why startups fail. You don't fix broken systems with more telemetry. You fix them by not building them in the first place. Also, I'm pretty sure this entire post was written by an LLM. Am I right?