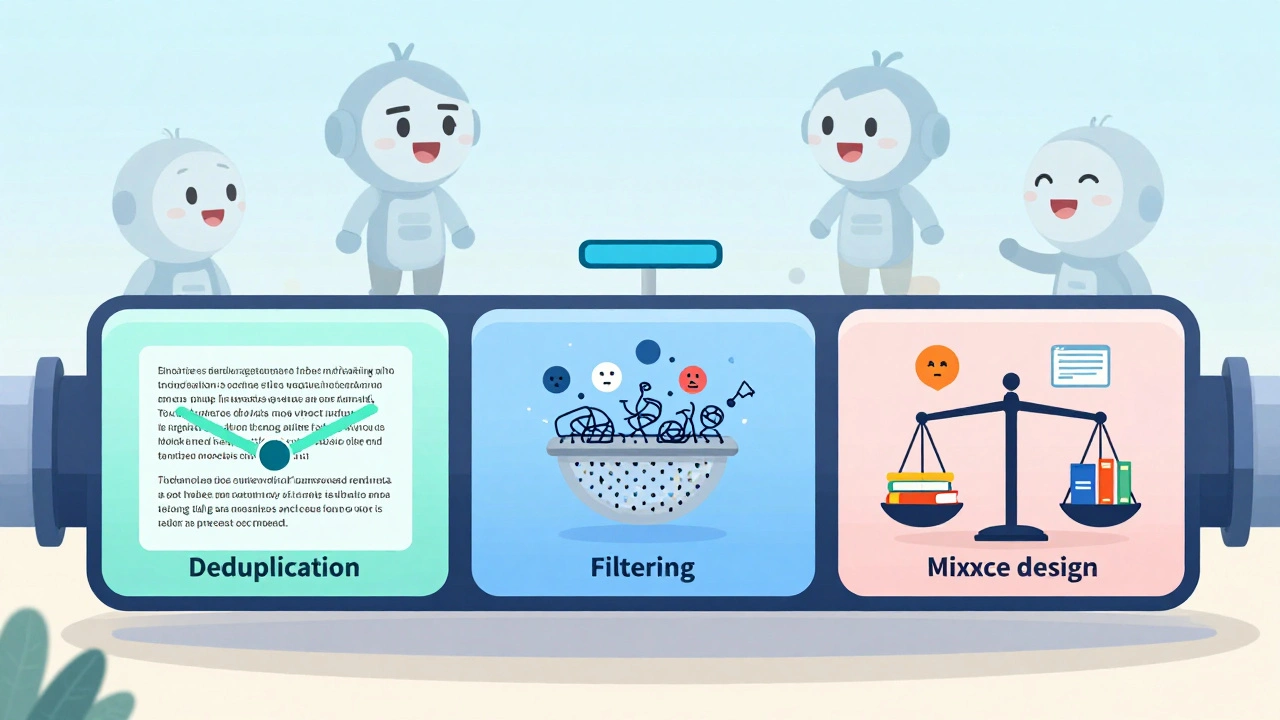

Training Data Pipelines for Generative AI: Deduplication, Filtering, and Mixture Design

Oct, 24 2025

Oct, 24 2025

Generative AI models like GPT, Claude, and Llama don’t learn from raw internet text. They learn from training data pipelines-carefully built systems that clean, organize, and mix data before it ever touches a model. Skip this step, and your model will repeat nonsense, hallucinate facts, or even learn harmful patterns. This isn’t optional. It’s the foundation.

Why Your Model’s Performance Depends on Data Cleanup

Most people think the magic of generative AI comes from the model architecture. It doesn’t. It comes from the data. A study by CDInsights found that models trained on pipelines with strict deduplication performed 22% better in output quality than those without. Why? Because duplicates aren’t just redundant-they teach the model to overfit to common phrases, making responses robotic and predictable. Imagine training a chatbot on a dataset where 20% of the text is copied from Reddit threads. The model learns to mimic those casual, unstructured replies. Now imagine 15% of your training data is scraped from low-quality blogs with broken grammar and misinformation. The model will repeat those errors. That’s not a bug. That’s how AI works. It doesn’t know what’s true. It only knows what’s repeated. This is why pipelines exist. They’re not fancy dashboards. They’re surgical tools that cut out the noise so the model can focus on what matters.Deduplication: Removing the Same Thing Over and Over

Deduplication sounds simple: delete duplicates. But in a dataset of 10 trillion tokens (yes, that’s real), finding duplicates isn’t about matching exact strings. It’s about spotting near-identical paragraphs, rewritten sentences, and rehosted content. The industry standard uses MinHash and Locality-Sensitive Hashing (LSH). These algorithms don’t compare every document to every other document-that would take years. Instead, they create fingerprints for text blocks. If two fingerprints match above a certain threshold, they’re flagged as near-duplicates. Meta’s Llama 3 pipeline removed 27% of its training data through paragraph-level deduplication. That’s not just saving storage space. It’s removing redundancy that would’ve skewed the model’s understanding of language frequency. AIAccelerator Institute tested this on real-world web crawls and found 98.7% accuracy in detecting duplicates using Apache Airflow pipelines. But here’s the catch: overdoing it hurts. Remove too much, and you lose diversity. A model trained on a dataset with 95% deduplication might sound polished but won’t handle niche topics well. The goal isn’t perfection-it’s balance. Aim for 15-30% reduction. That’s where most top models operate.Filtering: Cutting Out the Bad, Keeping the Useful

Not all text is equal. Some is toxic. Some is gibberish. Some is just poorly written. Filtering layers decide what stays and what gets tossed. There are three main filters:- Toxic content filters: Tools like Perspective API scan for hate speech, threats, or harassment. Google’s Dataflow achieves 99.2% precision here-but you can’t see how it works. Open-source alternatives like Meta’s DataComp give you full transparency at 95.7% precision.

- Quality scoring: Perplexity metrics measure how “surprising” a text is. High perplexity = weird, unnatural phrasing. Low perplexity = fluent, likely human-written. Models trained on low-perplexity data generate more coherent responses.

- Domain relevance: If you’re building a medical AI, you don’t want 30% of your data coming from cooking blogs. Filters use classifiers to tag content by category and then drop anything outside the target domain.

Mixture Design: The Secret Sauce of Model Behavior

You can have perfect deduplication and flawless filtering, but if your data mix is wrong, your model will be useless. Mixture design is about balance. Think of it like cooking. You wouldn’t make a soup with 90% salt and 10% broth. Same with AI. Leading models use weighted mixes. For example:- Coding models: 60% general web, 20% technical docs, 15% code repositories, 5% scientific papers.

- General-purpose LLMs: 70% web text, 15% books, 10% code, 5% dialogue.

Open Source vs. Cloud Platforms: What Works Best?

You have two paths: build your own or buy one. Open-source tools like Kubeflow Pipelines and Meta’s DataComp give you control. You can see every filter, tweak every threshold, audit every decision. But it takes time. Teams report 3-4 weeks of engineering work just to get the basics running. Cost? Around $0.04 per GB processed. Cloud platforms like AWS SageMaker and Azure ML Pipelines are plug-and-play. They handle deduplication, filtering, and versioning out of the box. But you lose visibility. AWS’s filtering metrics are opaque. You don’t know what got removed. And it costs $0.15 per GB-nearly four times more. Gartner’s 2024 cost analysis shows open-source saves money but eats engineering hours. Cloud saves hours but costs more. The choice depends on your team size and risk tolerance. Startups? Build. Enterprises with compliance needs? Buy.

What Goes Wrong (And How to Avoid It)

Most pipeline failures aren’t technical. They’re human.- Ignoring versioning: 78% of failed model iterations trace back to untracked data changes. If you don’t know what data was used in version 2.1, you can’t reproduce results.

- Using stale data: Microsoft recommends retraining triggers every 7-14 days. If your data is older than 3 months, your model is already outdated.

- Over-filtering: Yann LeCun’s research showed removing “imperfect but diverse” content made models 15% less creative. Sometimes, messy data is useful.

- Not testing mixtures: Always run small-scale tests before full training. A 5% imbalance can cost you 20% accuracy.

What’s Next? Autonomous Pipelines

The next wave isn’t just better pipelines-it’s self-driving ones. Google’s Vertex AI team announced “self-healing data pipelines” in April 2025. These systems detect data drift, fix broken labels, and auto-adjust mixtures without human input. CDInsights found that GenAI-powered pipelines reduce data prep time by 44% and improve final dataset quality by 19%. By 2027, Gartner predicts 80% of enterprise pipelines will use continuous mixture optimization-constantly learning from real-world model performance and adjusting data on the fly. This isn’t sci-fi. It’s happening now.Final Takeaway: Data Is the New Model

You can buy the biggest GPU cluster. You can fine-tune the latest transformer. But if your data pipeline is broken, your model will fail. Deduplication keeps your data clean. Filtering keeps it safe. Mixture design makes it smart. The best AI teams don’t spend months tweaking model parameters. They spend weeks building data pipelines. Because in generative AI, the data isn’t just input. It’s the blueprint.What happens if I skip deduplication in my AI training data?

Skipping deduplication leads to overfitting. Your model will repeat common phrases, sound robotic, and struggle with novelty. Studies show models trained on duplicated data perform up to 22% worse in output quality. Deduplication isn’t optional-it’s essential for generating diverse, accurate responses.

How do I know if my data filtering is too aggressive?

If your model starts sounding overly generic, avoids creative answers, or fails on edge-case queries, you may have over-filtered. Yann LeCun’s research showed removing “imperfect but diverse” content reduced model flexibility by 15%. Test your pipeline by reintroducing a small sample of previously filtered data and measure performance changes.

Can I use the same data mixture for all types of AI models?

No. A coding model needs heavy code exposure (15-20%), while a general chatbot needs more dialogue and web text. A medical AI requires high-quality clinical documents. Mixture design must match your model’s purpose. Using a generic mix across different models will degrade performance in targeted tasks.

Is open-source better than cloud-based pipelines?

It depends. Open-source tools like Kubeflow and DataComp give you full control and cost less ($0.04/GB), but require weeks of setup. Cloud pipelines like AWS SageMaker are faster to deploy ($0.15/GB) but hide filtering logic. Choose open-source if you need transparency and customization. Choose cloud if speed and integration matter more.

What’s the biggest mistake teams make with AI data pipelines?

The biggest mistake is not tracking data versions. 78% of failed model iterations come from untracked changes in training data. If you don’t know what data was used in version 3.2, you can’t reproduce results, debug failures, or comply with regulations like the EU AI Act. Use tools like DVC to version your data like you version your code.

Bridget Kutsche

December 14, 2025 AT 10:35This is such a solid breakdown-seriously, most people treat AI like magic, but the real work happens in the pipeline. I’ve seen teams waste months tweaking architectures when they should’ve just cleaned their data first. Deduplication alone saved our team 30% on training costs and made responses way less robotic. Don’t skip the boring stuff-it’s where the magic hides.

Also, dynamic mixture design? That’s the future. If your pipeline can adapt based on performance, you’re not just building a model-you’re building a living system.

Jack Gifford

December 15, 2025 AT 11:52Yo, I just want to say thank you for not saying ‘it’s all about the model.’ I’m so tired of people acting like transformer layers are some kind of divine intervention. Nope. It’s the data. Always the data.

And honestly? The 15-30% deduplication range is spot on. We went overboard at first and lost all the messy, real-world chatter-turned our chatbot into a corporate robot. Now we keep a little chaos. It’s better.

Sarah Meadows

December 15, 2025 AT 13:55Open-source pipelines are for hobbyists. If you’re serious about AI, you use enterprise-grade cloud tools. AWS and Azure don’t just handle deduplication-they protect your IP, comply with regulations, and filter out foreign propaganda. Why risk your model on some GitHub repo run by a guy in his basement? You think China’s not doing the same thing? Wake up.

And yes, $0.15/GB is expensive-but it’s cheaper than your company getting fined for toxic outputs. This isn’t a cost. It’s national security.

Nathan Pena

December 17, 2025 AT 07:20It’s mildly amusing how casually people reference ‘studies’ without citing sources. ‘CDInsights found’-which study? What journal? What sample size? And ‘AIAccelerator Institute’? Is that a real entity or a placeholder for someone’s LinkedIn banner?

Also, ‘98.7% accuracy in detecting duplicates’-with what baseline? MinHash isn’t perfect. LSH has false positives. And you didn’t mention tokenization artifacts or Unicode normalization edge cases. This reads like a blog post dressed up as a whitepaper. Poor rigor.

Mike Marciniak

December 18, 2025 AT 03:20They’re lying about deduplication. They’re not removing duplicates-they’re erasing dissent. You think the internet is full of noise? No. It’s full of truth. And they’re scrubbing it. Why? So the AI only says what the elite want it to say. That’s why all these models sound the same. They’re not learning language-they’re learning obedience.

And don’t get me started on ‘dynamic mixture design.’ That’s AI surveillance. They’re watching how your model fails and feeding it more propaganda. This isn’t innovation. It’s mind control.

VIRENDER KAUL

December 18, 2025 AT 06:46Very good analysis however the term 'toxic content filters' is insufficiently defined. In non-western contexts, what constitutes toxicity varies drastically. For instance, in South Asia, direct disagreement is often perceived as aggression. Should a model trained on Western datasets suppress culturally valid expressions of dissent? This is not merely a technical issue but an epistemological crisis.

Furthermore, the assumption that 'low perplexity equals human-like' is flawed. Classical Sanskrit poetry has high perplexity yet is profoundly coherent. Your metrics are ethnocentric and statistically naive.

Mbuyiselwa Cindi

December 19, 2025 AT 18:25Love this. Seriously. I’ve been working with a small team in Cape Town trying to build a local language AI, and we were drowning in bad data-lots of code-switching, slang, broken grammar, but it was real. We almost filtered it all out thinking it was ‘low quality.’ Then we ran a test with just 10% of it mixed back in-and boom, the model started understanding context way better.

Don’t be afraid of messy data. Sometimes the ‘flaws’ are where the humanity lives.

Henry Kelley

December 20, 2025 AT 23:53Biggest thing I learned? Don’t just trust the filters. We used a cloud pipeline and assumed it was clean. Turns out it auto-removed all regional dialects because they ‘looked like spam.’ Our users in Appalachia and Louisiana were getting nonsense replies. Took us weeks to realize it wasn’t the model-it was the pipeline silently erasing voices.

Always audit. Always test with real people. No tool is perfect.

Victoria Kingsbury

December 22, 2025 AT 18:31Dynamic mixture design is wild. I’ve seen models that start out answering like a textbook, then after a few weeks of auto-adjusting, suddenly start sounding like a Reddit thread. Not because they got dumber-but because they were fed more real-world data. The system learned that users weren’t asking for perfect grammar, they were asking for relatability.

Also, the 22% performance drop from over-deduplication? Yeah, that’s real. We lost all the niche forums where people actually explain how things work-like how to fix a leaky faucet in 1970s plumbing. Turns out, those weird threads were gold for contextual reasoning.

Tonya Trottman

December 23, 2025 AT 02:00Oh wow. Another post pretending ‘data is the new model’ is some revolutionary insight. Newsflash: this has been standard practice since 2018. You didn’t invent this. You just read a Gartner report and thought you were the first to figure it out.

And ‘dynamic mixture design’? That’s just gradient descent with extra steps. And you call it ‘self-healing’ like it’s alive? Please. It’s a glorified A/B test with a fancy dashboard.

Also, you said ‘98.7% accuracy’-but didn’t mention the false negative rate. You know what happens when you miss a duplicate? The model learns bias. You didn’t even touch on that. Typical. All flash, no substance.