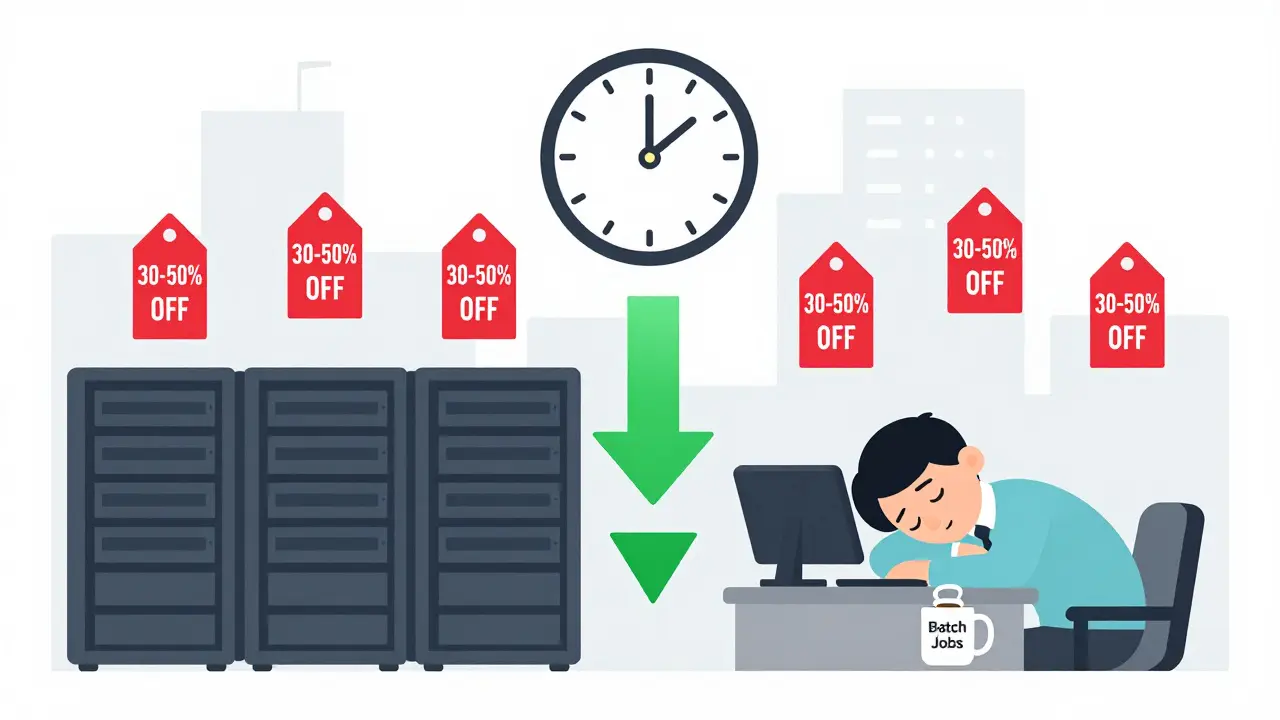

Cloud Cost Optimization for Generative AI: Scheduling, Autoscaling, and Spot

Learn how to slash generative AI cloud costs by 50-75% using scheduling, autoscaling, and spot instances. Real-world strategies that work in 2026.

Learn how to slash generative AI cloud costs by 50-75% using scheduling, autoscaling, and spot instances. Real-world strategies that work in 2026.

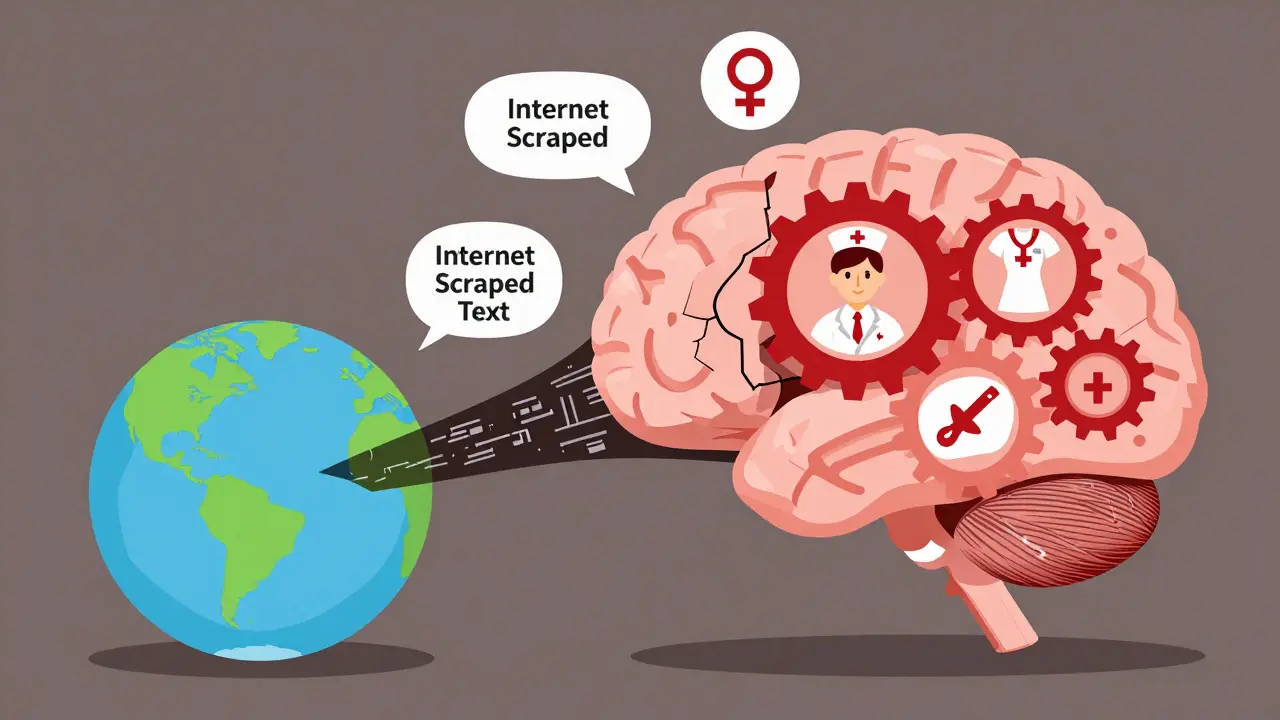

Large language models inherit bias from training data, leading to harmful stereotypes in hiring, healthcare, and daily use. Learn where bias comes from, how it manifests, and what’s being done to fix it.

Coding-specialist large language models like GitHub Copilot and CodeLlama are transforming how teams write software by enabling AI-powered pair programming at scale. Learn how they boost productivity, where they fall short, and how to use them safely.

HR Knowledgebots use large language models to answer employee policy questions from internal handbooks, cutting HR workload by 30-50% and providing 24/7 instant answers grounded in real company documents.

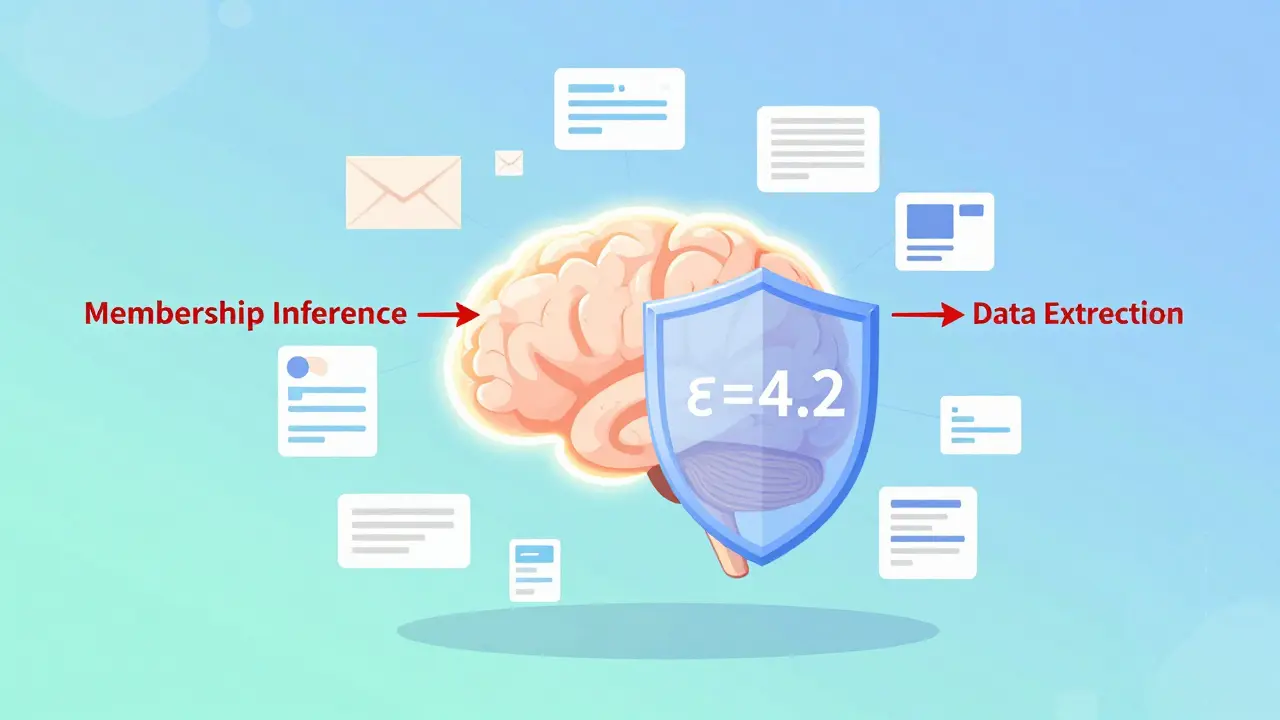

Differential privacy adds mathematical noise to LLM training to prevent memorization of sensitive data. It offers provable privacy guarantees but reduces accuracy, slows training, and increases memory use. Learn how it works, where it's used, and whether it's right for your project.

Tokenizer design choices-BPE, WordPiece, Unigram-directly impact LLM accuracy, speed, and memory use. Learn how vocabulary size and tokenization methods affect performance in real-world applications.

AI coding assistants don't automatically make developers faster. Learn how to measure true productivity by balancing throughput and code quality, and avoid the common traps that sabotage ROI.

LoRA and adapter modules let you fine-tune massive language models using 99% less memory than full fine-tuning. Learn how these techniques work, when to use them, and how to deploy them on consumer hardware.

Vibe coding with AI tools like GitHub Copilot breaks traditional compliance. Learn how SOC 2, ISO 27001, and NIST controls must adapt to audit AI-generated code, prevent breaches, and avoid failed audits.

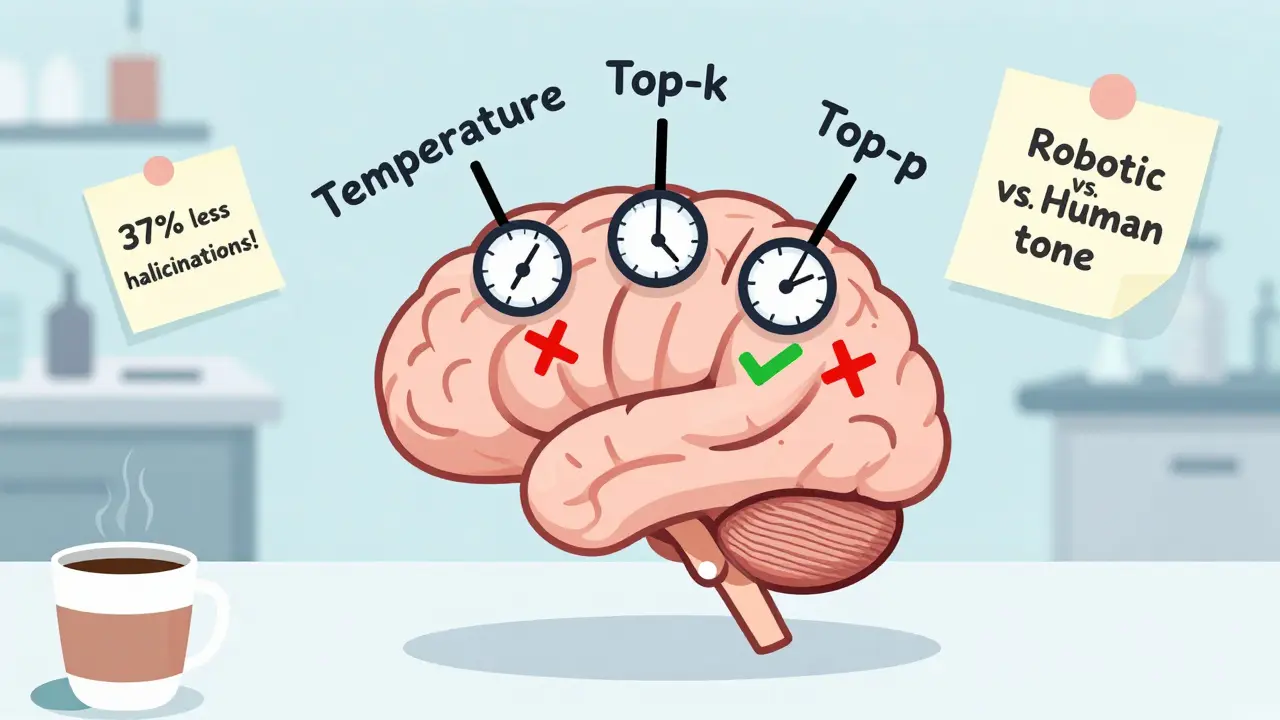

Learn how temperature, top-k, and nucleus sampling directly affect LLM hallucinations. Discover the best settings for factual accuracy, creative tasks, and real-world applications - backed by 2025 research and industry data.

Shadow testing lets you evaluate new large language models using real production traffic without affecting users. It's now a standard practice for enterprises to catch safety, cost, and performance issues before they reach customers.

Learn how to pick the right vibe coding platform for your team based on skill level, security needs, and project type. Compare Replit, Windsurf, Noca, and Builder.io with real data from 2026.